nvJPEG

A GPU accelerated JPEG codec library.

1. Introduction

1.1. nvJPEG Decoder

The nvJPEG library provides high-performance, GPU accelerated JPEG decoding functionality for image formats commonly used in deep learning and hyperscale multimedia applications. The library offers single and batched JPEG decoding capabilities which efficiently utilize the available GPU resources for optimum performance; and the flexibility for users to manage the memory allocation needed for decoding.

The nvJPEG library enables the following functions: use the JPEG image data stream as input; retrieve the width and height of the image from the data stream, and use this retrieved information to manage the GPU memory allocation and the decoding. A dedicated API is provided for retrieving the image information from the raw JPEG image data stream.

Note

Throughout this document, the terms “CPU” and “Host” are used synonymously. Similarly, the terms “GPU” and “Device” are synonymous.

The nvJPEG library supports the following:

JPEG options:

Baseline and Progressive JPEG decoding/encoding

8 bits per pixel

Huffman bitstream decoding

Upto 4 channel JPEG bitstreams

8- and 16-bit quantization tables

-

The following chroma subsampling for the 3 color channels Y, Cb, Cr (Y, U, V):

4:4:4

4:2:2

4:2:0

4:4:0

4:1:1

4:1:0

Features:

Hybrid decoding using both the CPU (i.e., host) and the GPU (i.e., device).

Hardware acceleration for baseline JPEG decode on Hardware Acceleration.

Input to the library is in the host memory, and the output is in the GPU memory.

Single image and batched image decoding.

Single phase and multiple phases decoding.

Color space conversion.

User-provided memory manager for the device and pinned host memory allocations.

1.2. nvJPEG Encoder

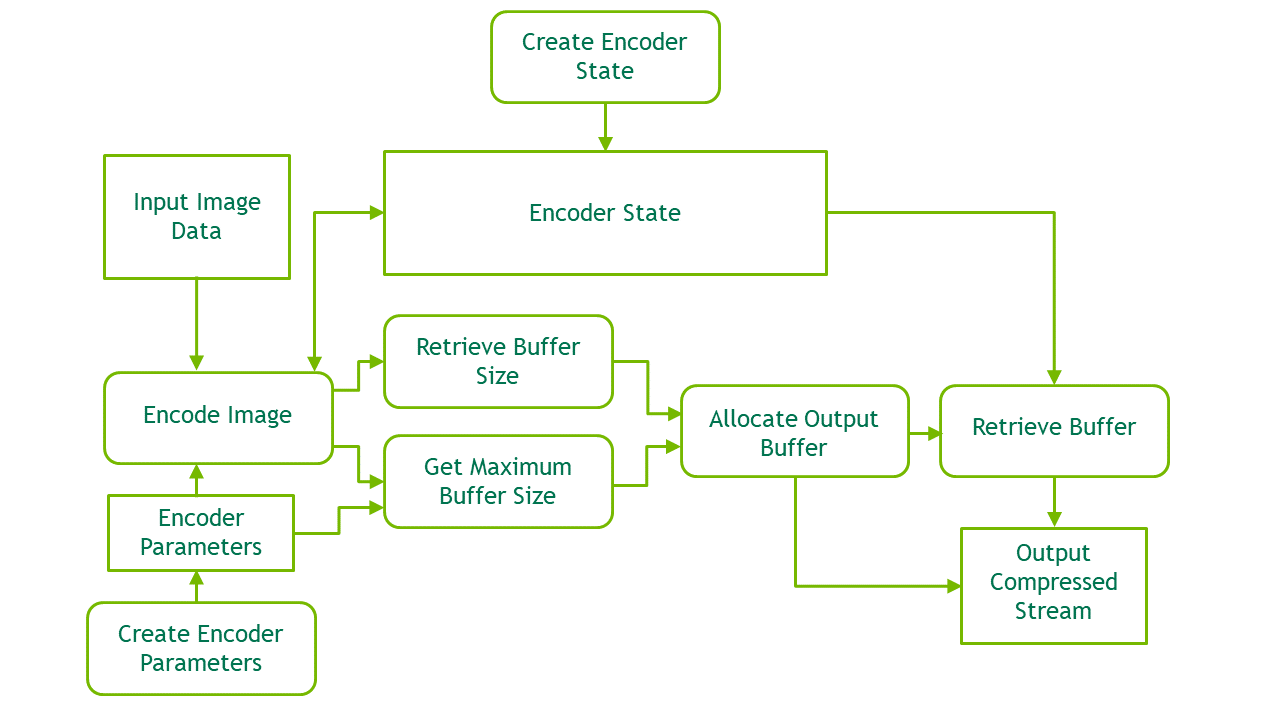

The encoding functions of the nvJPEG library perform GPU-accelerated compression of user’s image data to the JPEG bitstream. User can provide input data in a number of formats and colorspaces, and control the encoding process with parameters. Encoding functionality will allocate temporary buffers using user-provided memory allocator.

Before calling the encoding functions the user should perform a few prerequisite steps using the helper functions described in nvJPEG Encoder Helper API Reference.

1.3. Thread Safety

Not all nvJPEG types are thread safe.

When using decoder APIs across multiple threads, the following decoder types should be instantiated separately for each thread: nvJPEG Bitstream Handle, nvJPEG Opaque JPEG Decoding State Handle, nvJPEG Decode Device Buffer Handle, nvJPEG Decode Pinned Buffer Handle

When using encoder APIs across multiple threads, nvjpegEncoderState_t should be instantiated separately for each thread.

For user-provided allocators (inputs to nvjpegCreateEx()), the user needs to ensure thread safety.

1.4. Multi-GPU support

The nvJPEG states and handles are bound to the device that was set as current during their creation. Using these states and handles with another device set as current is undefined. The user is responsible of keeping track of the current device.

1.5. Hardware Acceleration

Hardware accelerated JPEG decode is available on the following GPUs architectures -

Ampere (A100, A30)

Hopper

Ada

Blackwell

Platforms which support hardware accelerated JPEG decode:

Windows

Linux (x86_64, PowerPC, ARM64)

Hardware accelerated JPEG encode is available on Jetson Thor (Blackwell), running Linux ARM64.

2. JPEG Decoding

2.1. Using JPEG Decoding

The nvJPEG library provides functions for both the decoding of a single image, and batched decoding of multiple images.

2.1.1. Single Image Decoding

For single-image decoding you provide the data size and a pointer to the file data, and the decoded image is placed in the output buffer.

To use the nvJPEG library, start by calling the helper functions for initialization.

Create nvJPEG library handle with one of the helper functions

nvjpegCreateSimple() or nvjpegCreateEx().-

Create JPEG state with the helper function

nvjpegJpegStateCreate(). See nvJPEG Type Declarations andnvjpegJpegStateCreate().The following helper functions are available in the nvJPEG library:

nvjpegStatus_t nvjpegGetProperty(libraryPropertyType type, int *value);[DEPRECATED] nvjpegStatus_t nvjpegCreate(nvjpegBackend_t backend, nvjpegHandle_t *handle , nvjpeg_dev_allocator allocator);nvjpegStatus_t nvjpegCreateSimple(nvjpegHandle_t *handle);nvjpegStatus_t nvjpegCreateEx(nvjpegBackend_t backend, nvjpegDevAllocator_t *dev_allocator, nvjpegPinnedAllocator_t *pinned_allocator, unsigned int flags, nvjpegHandle_t *handle);nvjpegStatus_t nvjpegDestroy(nvjpegHandle_t handle);nvjpegStatus_t nvjpegJpegStateCreate(nvjpegHandle_t handle, nvjpegJpegState_t *jpeg_handle);nvjpegStatus_t nvjpegJpegStateDestroy(nvjpegJpegState handle);Other helper functions such as

nvjpegSet*()andnvjpegGet*()can be used to configure the library functionality on per-handle basis. Refer to the nvJPEG Helper API Reference for more details.

-

Retrieve the width and height information from the JPEG-encoded image by using the

nvjpegGetImageInfo()function.Below is the signature of

nvjpegGetImageInfo()function:nvjpegStatus_t nvjpegGetImageInfo( nvjpegHandle_t handle, const unsigned char *data, size_t length, int *nComponents, nvjpegChromaSubsampling_t *subsampling, int *widths, int *heights);

For each image to be decoded, pass the JPEG data pointer and data length to the above function. The

nvjpegGetImageInfo()function is thread safe. One of the outputs of the above

nvjpegGetImageInfo()function isnvjpegChromaSubsampling_t. This parameter is an enum type, and its enumerator list is composed of the chroma subsampling property retrieved from the JPEG image. See nvJPEG Chroma Subsampling.-

Use the

nvjpegDecode()function in the nvJPEG library to decode this single JPEG image. See the signature of this function below:nvjpegStatus_t nvjpegDecode( nvjpegHandle_t handle, nvjpegJpegState_t jpeg_handle, const unsigned char *data, size_t length, nvjpegOutputFormat_t output_format, nvjpegImage_t *destination, cudaStream_t stream);

In the above

nvjpegDecode()function, the parametersnvjpegOutputFormat_t,nvjpegImage_t, andcudaStream_tcan be used to set the output behavior of thenvjpegDecode()function. You provide thecudaStream_tparameter to indicate the stream to which your asynchronous tasks are submitted. -

The ``nvjpegOutputFormat_t`` parameter:

The

nvjpegOutputFormat_tparameter can be set to one of theoutput_formatsettings below:output_format

Meaning

NVJPEG_OUTPUT_UNCHANGEDReturn the decoded image planar format.

NVJPEG_OUTPUT_RGBConvert to planar RGB.

NVJPEG_OUTPUT_BGRConvert to planar BGR.

NVJPEG_OUTPUT_RGBIConvert to interleaved RGB.

NVJPEG_OUTPUT_BGRIConvert to interleaved BGR.

NVJPEG_OUTPUT_YReturn the Y component only.

NVJPEG_OUTPUT_YUVReturn in the YUV planar format.

NVJPEG_OUTPUT_NV12Return in the NV12 format (separate Y and interleaved UV). Requires chroma subsampling 4:2:0.

NVJPEG_OUTPUT_YUY2Return in the YUY2 format (interleaved YUYV). Requires chroma subsampling is 4:2:2.

NVJPEG_OUTPUT_UNCHANGEDI_U16Return the decoded image interleaved format.

For example, if

output_formatis set toNVJPEG_OUTPUT_YorNVJPEG_OUTPUT_RGBI, orNVJPEG_OUTPUT_BGRIthen the output is written only to channel[0] ofnvjpegImage_t, and the other channels are not touched.Alternately, in the case of planar output, the data is written to the corresponding channels of the

nvjpegImage_tdestination structure.Finally, in the case of grayscale JPEG and RGB output, the luminance is used to create the grayscale RGB.

The below table explains the combinations of the output formats and the number of channels supported by the library.

No of Channels in bitstream |

1 |

2 |

3 |

4 |

Output Format |

||||

NVJPEG_OUTPUT_UNCHANGED |

Yes |

Yes |

Yes |

Yes |

NVJPEG_OUTPUT_YUV |

Only the first channel of the output is populated |

No |

Yes |

No |

NVJPEG_OUTPUT_NV12 |

No |

No |

Yes |

No |

NVJPEG_OUTPUT_YUY2 |

No |

No |

Yes |

No |

NVJPEG_OUTPUT_Y |

Yes |

No |

Yes |

Yes(a) |

NVJPEG_OUTPUT_RGB |

Yes(b) |

No |

Yes |

Yes(a) |

NVJPEG_OUTPUT_BGR |

Yes(b) |

No |

Yes |

Yes(a) |

NVJPEG_OUTPUT_RGBI |

Yes(b) |

No |

Yes |

Yes(a) |

NVJPEG_OUTPUT_BGRI |

Yes(b) |

No |

Yes |

Yes(a) |

NVJPEG_OUTPUT_UNCHANGEDI_U16 |

Yes(c) |

Yes |

No |

No |

|

NOTES:

|

-

As mentioned above, an important benefit of the

nvjpegGetImageInfo()function is the ability to utilize the image information retrieved from the the input JPEG image to allocate proper GPU memory for your decoding operation.The

nvjpegGetImageInfo()function returns thewidths,heightsandnComponentsparameters.nvjpegStatus_t nvjpegGetImageInfo( nvjpegHandle_t handle, const unsigned char *data, size_t length, int *nComponents, nvjpegChromaSubsampling_t *subsampling, int *widths, int *heights);

You can use the retrieved parameters,

widths,heightsandnComponents, to calculate the required size for the output buffers, either for a single decoded JPEG, or for every decoded JPEG in a batch.To optimally set the

destinationparameter for thenvjpegDecode()function, use the following guidelines:For the output_format:

NVJPEG_OUTPUT_Y

destination.pitch[0] should be at least: width[0]

destination.channel[0] should be at least of size: destination.pitch[0]*height[0]

For the output_format

destination.pitch[c] should be at least:

destination.channel[c] should be at least of size:

NVJPEG_OUTPUT_YUV

width[c] for c = 0, 1, 2

destination.pitch[c]*height[c] for c = 0, 1, 2

NVJPEG_OUTPUT_NV12

width[c] for c = 0, and width[c]*2 for c = 1

destination.pitch[c]*height[c] for c = 0, 1

NVJPEG_OUTPUT_YUY2

width[1]*4 for c = 0

destination.pitch[0]*height[0]

NVJPEG_OUTPUT_RGB and NVJPEG_OUTPUT_BGR

width[0] for c = 0, 1, 2

destination.pitch[0]*height[0] for c = 0, 1, 2

NVJPEG_OUTPUT_RGBI and NVJPEG_OUTPUT_BGRI

width[0]*3

destination.pitch[0]*height[0]

NVJPEG_OUTPUT_UNCHANGED

width[c] for c = [ 0, nComponents - 1 ]

destination.pitch[c]*height[c] for c = [ 0, nComponents - 1]

NVJPEG_OUTPUT_UNCHANGEDI_U16

width[c]* nComponents* sizeof(unsigned short)

destination.pitch[c]*height[c] for c = [ 0, nComponents - 1]

-

Ensure that the nvJPEG Image structure (or structures, in the case of batched decode) is filled with the pointers and pitches of allocated buffers. The

nvjpegImage_tstructure that holds the output pointers is defined as follows:typedef struct { unsigned char * channel[NVJPEG_MAX_COMPONENT]; size_t pitch[NVJPEG_MAX_COMPONENT]; } nvjpegImage_t;

NVJPEG_MAX_COMPONENT is the maximum number of color components the nvJPEG library supports in the current release. For generic images, this is the maximum number of encoded channels that the library is able to decompress.

Finally, when you call the

nvjpegDecode()function with the parameters as described above, thenvjpegDecode()function fills the output buffers with the decoded data.

2.1.2. Decode using Decoupled Phases

The nvJPEG library allows further separation of the host and device phases of the decode process. The host phase of the decoding will not need to access to device resources.

A few examples of decoupled APIs can be found under Decode API—Decoupled Decoding.

Below is the sequence of API calls to decode a single image

-

Initialize all the items that are used in the decoding process:

Create the library handle using one of the library handle initialization routines.

Choose decoder implementation

nvjpegBackend_t, and create decoder usingnvjpegDecoderCreate().Create JPEG decoder state using

nvjpegDecoderStateCreate().Create JPEG stream using

nvjpegJpegStreamCreate().-

Create the pinned and device buffers used by the decoder using the below APIs respectively. These buffers are used to store intermediate decoding results.

nvjpegBufferPinnedCreate()nvjpegBufferDeviceCreate()

-

Link the buffers to the JPEG state using the following APIs respectively:

nvjpegStateAttachPinnedBuffer()nvjpegStateAttachDeviceBuffer()

-

Create decode parameters using the below API. This is used to set the output format, and enable ROI decode:

nvjpegDecodeParamsCreate()

-

Perform decoding:

-

Parse the jpeg bit-stream using

nvjpegJpegStreamParse()-

Encoded bitstream information, like channel dimensions, can be retrieved using the below API. This information is used to allocate the output pointers in

nvjpegImage_t.nvjpegJpegStreamGetComponentsNum()nvjpegJpegStreamGetComponentDimensions()

-

Note

Ensure that

nvjpegJpegStreamParse()returns success before calling nvjpegDecodeJpeg* APIs in the next step.-

Call the decode API in the below sequence to decode the image. All the APIs in the below sequence must return

NVJPEG_STATUS_SUCCESSto ensure correct decoding:nvjpegDecodeJpegHost()nvjpegDecodeJpegTransferToDevice()nvjpegDecodeJpegDevice()

-

2.1.3. Batched Image Decoding

For the batched image decoding you provide pointers to multiple file data in the memory, and also provide the buffer sizes for each file data. The nvJPEG library will decode these multiple images, and will place the decoded data in the output buffers that you specified in the parameters.

2.1.3.1. Single Phase

For batched image decoding in single phase, follow these steps:

Call

nvjpegDecodeBatchedInitialize()function to initialize the batched decoder. Specify the batch size in thebatch_sizeparameter. SeenvjpegDecodeBatchedInitialize().Next, call

nvjpegDecodeBatched()for each new batch. Make sure to pass the parameters that are correct to the specific batch of images. If the size of the batch changes, or if the batch decoding fails, then call thenvjpegDecodeBatchedInitialize()function again.

2.2. nvJPEG Type Declarations

2.2.1. nvJPEG Backend

typedef enum {

NVJPEG_BACKEND_DEFAULT = 0,

NVJPEG_BACKEND_HYBRID = 1,

NVJPEG_BACKEND_GPU_HYBRID = 2,

NVJPEG_BACKEND_HARDWARE = 3,

NVJPEG_BACKEND_GPU_HYBRID_DEVICE = 4,

NVJPEG_BACKEND_HARDWARE_DEVICE = 5,

NVJPEG_BACKEND_LOSSLESS_JPEG = 6

} nvjpegBackend_t;

The nvjpegBackend_t enum is used to select either default back-end by default, or use GPU decoding for baseline JPEG images, or use CPU for Huffman decoding.

Member |

Description |

NVJPEG_BACKEND_DEFAULT |

Back-end is selected internally. |

NVJPEG_BACKEND_HYBRID |

Uses CPU for Huffman decoding. |

NVJPEG_BACKEND_GPU_HYBRID |

Uses GPU for Huffman decoding. |

NVJPEG_BACKEND_HARDWARE |

Uses Hardware Acceleration for decode. Supports baseline JPEG images with single scan with 1 or 3 channels. 410 and 411 chroma subsamplings are not supported. |

NVJPEG_BACKEND_GPU_HYBRID_DEVICE |

Supports input bitstream on device memory. Can be used only with batched decode APIs for baseline JPEG images without restart intervals. |

NVJPEG_BACKEND_HARDWARE_DEVICE |

Supports input bitstream on device memory. Can be used only with batched decode APIs. Uses Hardware Acceleration for decode. Supports baseline JPEG images with single scan with 1 or 3 channels. 410 and 411 chroma subsamplings are not supported. |

NVJPEG_BACKEND_LOSSLESS_JPEG |

Supports lossless jpeg bitstreams as defined in the jpeg 92 standard. Bitstreams with up to 2 channels and prediction mode 1 are supported. |

2.2.2. nvJPEG Bitstream Handle

struct nvjpegJpegStream;

typedef struct nvjpegJpegStream* nvjpegJpegStream_t;

This handle stores the bit-stream parameters on the host. This helps retrieve bitstream meta-data using APIs defined in nvJPEG Stream API.

2.2.3. nvJPEG Decode Device Buffer Handle

struct nvjpegBufferDevice;

typedef struct nvjpegBufferDevice* nvjpegBufferDevice_t;

This nvjpegBufferDevice_t is used by decoder states to store the intermediate information in device memory.

2.2.4. nvJPEG Decode Parameter Handle

struct nvjpegDecodeParams;

typedef struct nvjpegDecodeParams* nvjpegDecodeParams_t;

This decoder parameter handle stores the parameters like output format, and the ROI decode parameters that are set using APIs defined in nvJPEG Chroma Subsampling.

2.2.5. nvJPEG Decode Pinned Buffer Handle

struct nvjpegBufferPinned;

typedef struct nvjpegBufferPinned* nvjpegBufferPinned_t;

This nvjpegBufferPinned_t handle is used by decoder states to store the intermediate information on pinned memory.

2.2.6. nvJPEG Decoder Handle

struct nvjpegJpegDecoder;

typedef struct nvjpegJpegDecoder* nvjpegJpegDecoder_t;

This decoder handle stores the intermediate decoder data, which is shared across the decoding stages. This decoder handle is initialized for a given nvjpegBackend_t. It is used as input to the Decode API—Decoupled Decoding.

2.2.7. nvJPEG Host Pinned Memory Allocator Interface

typedef int (*tPinnedMalloc)(void**, size_t, unsigned int flags);

typedef int (*tPinnedFree)(void*);

typedef struct {

tPinnedMalloc pinned_malloc;

tPinnedFree pinned_free;

} nvjpegPinnedAllocator_t;

When the nvjpegPinnedAllocator_t *allocator parameter in the nvjpegCreateEx() function is set as a pointer to the above nvjpegPinnedAllocator_t structure, then this structure will be used for allocating and releasing host pinned memory for copying data to/from device. The function prototypes for the memory allocation and memory freeing functions are similar to the cudaHostAlloc() and cudaFreeHost() functions. They will return 0 in case of success, and non-zero otherwise.

However, if the nvjpegPinnedAllocator_t *allocator parameter in the nvjpegCreateEx() function is set to NULL, then the default memory allocation functions cudaHostAlloc() and cudaFreeHost() will be used. When using nvjpegCreate() or nvjpegCreateSimple() function to create library handle, the default host pinned memory allocator will be used.

2.2.8. nvJPEG Extended Host Pinned Memory Allocator Interface

typedef int (*tPinnedMallocV2)(void* ctx, void **ptr, size_t size, cudaStream_t stream);

typedef int (*tPinnedFreeV2)(void* ctx, void *ptr, size_t size, cudaStream_t stream);

typedef struct

{

tPinnedMallocV2 pinned_malloc;

tPinnedFreeV2 pinned_free;

void *pinned_ctx;

} nvjpegPinnedAllocatorV2_t;

Extended pinned allocators support stream ordered allocations along with user defined context information pinned_ctx. When invoking the allocators, nvJPEG will pass pinned_ctx as input to the extended pinned allocators.

2.2.9. nvJPEG Image

typedef struct {

unsigned char * channel[NVJPEG_MAX_COMPONENT];

size_t pitch[NVJPEG_MAX_COMPONENT];

} nvjpegImage_t;

The nvjpegImage_t structure (or structures, in the case of batched decode) is used to fill with the pointers and pitches of allocated buffers. The nvjpegImage_t structure that holds the output pointers.

Member |

Description |

NVJPEG_MAX_COMPONENT |

Maximum number of color components the nvJPEG library supports. For generic images, this is the maximum number of encoded channels that the library is able to decompress. |

2.2.10. nvJPEG Device Memory Allocator Interface

typedef int (*tDevMalloc)(void**, size_t);

typedef int (*tDevFree)(void*);

typedef struct {

tDevMalloc dev_malloc;

tDevFree dev_free;

} nvjpegDevAllocator_t;

Users can tell the library to use their own device memory allocator. The function prototypes for the memory allocation and memory freeing functions are similar to the cudaMalloc()and cudaFree() functions. They should return 0 in case of success, and non-zero otherwise. A pointer to the nvjpegDevAllocator_t structure, with properly filled fields, should be provided to the nvjpegCreate() function. NULL is accepted, in which case the default memory allocation functions cudaMalloc() and cudaFree() is used.

When the nvjpegDevAllocator_t *allocator parameter in the nvjpegCreate() or nvjpegCreateEx() function is set as a pointer to the above nvjpegDevAllocator_t structure, then this structure is used for allocating and releasing the device memory. The function prototypes for the memory allocation and memory freeing functions are similar to the cudaMalloc() and cudaFree() functions. They should return 0 in case of success, and non-zero otherwise.

However, if the nvjpegDevAllocator_t *allocator parameter in the nvjpegCreate() or nvjpegCreateEx() function is set to NULL, then the default memory allocation functions cudaMalloc() and cudaFree() will be used. When using nvjpegCreateSimple() function to create library handle the default device memory allocator will be used.

2.2.11. nvJPEG Extended Device Memory Allocator Interface

typedef int (*tDevMallocV2)(void* ctx, void **ptr, size_t size, cudaStream_t stream);

typedef int (*tDevFreeV2)(void* ctx, void *ptr, size_t size, cudaStream_t stream);

typedef struct

{

tDevMallocV2 dev_malloc;

tDevFreeV2 dev_free;

void *dev_ctx;

} nvjpegDevAllocatorV2_t;

Extended device allocators support stream ordered allocations along with user defined context information dev_ctx. When invoking the allocators, nvJPEG will pass dev_ctx as input to the extended device allocators.

2.2.12. nvJPEG Opaque JPEG Decoding State Handle

struct nvjpegJpegState;

typedef struct nvjpegJpegState* nvjpegJpegState_t;

The nvjpegJpegState structure stores the temporary JPEG information. It should be initialized before any usage. This JPEG state handle can be reused after being used in another decoding. The same JPEG handle should be used across the decoding phases for the same image or batch. Multiple threads are allowed to share the JPEG state handle only when processing same batch during first phase (nvjpegDecodePhaseOne) .

2.2.13. nvJPEG Opaque Library Handle Struct

struct nvjpegHandle;

typedef struct nvjpegHandle* nvjpegHandle_t;

The library handle is used in any consecutive nvJPEG library calls, and should be initialized first.

The library handle is thread safe, and can be used by multiple threads simultaneously.

2.2.14. nvJPEG Output Pointer Struct

typedef struct {

unsigned char * channel[NVJPEG_MAX_COMPONENT];

size_t pitch[NVJPEG_MAX_COMPONENT];

} nvjpegImage_t;

The nvjpegImage_tstruct holds the pointers to the output buffers, and holds the corresponding strides of those buffers for the image decoding.

Refer to Single Image Decoding on how to set up the nvjpegImage_t struct.

2.2.15. nvJPEG Jpeg Encoding

typedef enum {

NVJPEG_ENCODING_UNKNOWN = 0x0,

NVJPEG_ENCODING_BASELINE_DCT = 0xc0,

NVJPEG_ENCODING_EXTENDED_SEQUENTIAL_DCT_HUFFMAN = 0xc1,

NVJPEG_ENCODING_PROGRESSIVE_DCT_HUFFMAN = 0xc2,

NVJPEG_ENCODING_LOSSLESS_HUFFMAN = 0xc3

} nvjpegJpegEncoding_t;

The nvjpegJpegEncoding_t enum lists the JPEG encoding types that are supported by the nvJPEG library The enum values are based on the markers defined in the JPEG specification

Member |

Description |

NVJPEG_ENCODING_UNKNOWN |

This value is returned for all the JPEG markers not supported by the nvJPEG library. |

NVJPEG_ENCODING_BASELINE_DCT |

Corresponds to the JPEG marker 0xc0, refer to the JPEG spec for more details. |

NVJPEG_ENCODING_EXTENDED_SEQUENTIAL_DCT_HUFFMAN |

Corresponds to the JPEG marker 0xc1, refer to the JPEG spec for more details. |

NVJPEG_ENCODING_PROGRESSIVE_DCT_HUFFMAN |

Corresponds to the JPEG marker 0xc2, refer to the JPEG spec for more details. |

NVJPEG_ENCODING_LOSSLESS_HUFFMAN |

Corresponds to the JPEG marker 0xc3, refer to the JPEG spec for more details. |

2.2.16. nvJPEG Scale Factor

typedef enum {

NVJPEG_SCALE_NONE = 0,

NVJPEG_SCALE_1_BY_2 = 1,

NVJPEG_SCALE_1_BY_4 = 2,

NVJPEG_SCALE_1_BY_8 = 3

} nvjpegScaleFactor_t;

The nvjpegScaleFactor_t enum lists all the scale factors supported by the library. This feature is supported when nvjpeg handles are intstaniated using NVJPEG_BACKEND_HARDWARE

Member |

Description |

NVJPEG_SCALE_NONE |

Decoded output is not scaled |

NVJPEG_SCALE_1_BY_2 |

Decoded output width and height are scaled by a factor of 1/2 |

NVJPEG_SCALE_1_BY_4 |

Decoded output width and height are scaled by a factor of 1/4 |

NVJPEG_SCALE_1_BY_8 |

Decoded output width and height are scaled by a factor of 1/8 |

2.2.17. nvJPEG Flags

#define NVJPEG_FLAGS_DEFAULT 0

#define NVJPEG_FLAGS_HW_DECODE_NO_PIPELINE 1

#define NVJPEG_FLAGS_ENABLE_MEMORY_POOLS 2

#define NVJPEG_FLAGS_BITSTREAM_STRICT 4

#define NVJPEG_FLAGS_REDUCED_MEMORY_DECODE 8

#define NVJPEG_FLAGS_REDUCED_MEMORY_DECODE_ZERO_COPY 16

#define NVJPEG_FLAGS_UPSAMPLING_WITH_INTERPOLATION 32

nvJPEG flags provide additional controls when initializing the library using nvjpegCreateEx() or nvjpegCreateExV2(). It is possible to combine the flags as they are bit fields.

Member |

Description |

NVJPEG_FLAGS_DEFAULT |

Corresponds to default library behavior. |

NVJPEG_FLAGS_HW_DECODE_NO_PIPELINE |

To be used when the library is initialized with NVJPEG_BACKEND_HARDWARE. It will be ignored for other back-ends. nvjpeg in batched decode mode buffers additional images to achieve optimal performance. Use this flag to disable buffering of additional images. |

NVJPEG_FLAGS_ENABLE_MEMORY_POOLS [Deprecated] |

Starting with CUDA 11.1 this flag will be ignored. |

NVJPEG_FLAGS_BITSTREAM_STRICT |

nvJPEG library will try to decode a bitstream even if it doesn’t strictly follow the JPEG specification. Using this flag will return an error in such cases. |

NVJPEG_FLAGS_REDUCED_MEMORY_DECODE |

When using |

NVJPEG_FLAGS_REDUCED_MEMORY_DECODE_ZERO_COPY |

Using this flag enables zero-copy memory when feasible on supported platforms. |

NVJPEG_FLAGS_UPSAMPLING_WITH_INTERPOLATION |

Using this flag enables the decoder to use interpolation when performing chroma upsampling during the YCbCr to RGB conversion stage. |

2.2.18. nvJPEG Exif Orientation

typedef enum {

NVJPEG_ORIENTATION_UNKNOWN = 0,

NVJPEG_ORIENTATION_NORMAL = 1,

NVJPEG_ORIENTATION_FLIP_HORIZONTAL = 2,

NVJPEG_ORIENTATION_ROTATE_180 = 3,

NVJPEG_ORIENTATION_FLIP_VERTICAL = 4,

NVJPEG_ORIENTATION_TRANSPOSE = 5,

NVJPEG_ORIENTATION_ROTATE_90 = 6,

NVJPEG_ORIENTATION_TRANSVERSE = 7,

NVJPEG_ORIENTATION_ROTATE_270 = 8

} nvjpegExifOrientation_t;

The nvjpegExifOrientation_t enum represents the exif orientation in a jfif(jpeg) file. Exif orientation information is typically used to denote the digital camera sensor orientation at the time of image capture.

Member |

Description |

NVJPEG_ORIENTATION_UNKNOWN |

Exif orientation information is not available in the bitstream. |

NVJPEG_ORIENTATION_NORMAL |

Decode output remains unchanged. |

NVJPEG_ORIENTATION_FLIP_HORIZONTAL |

Decoded output should be mirrored/flipped horizontally. |

NVJPEG_ORIENTATION_ROTATE_180 |

Decoded output should be rotated 180 degrees. |

NVJPEG_ORIENTATION_FLIP_VERTICAL |

Decoded output should be mirrored/flipped vertically. |

NVJPEG_ORIENTATION_TRANSPOSE |

Decoded output should be flipped/mirrored horizontally followed by a 90 degrees counter-clockwise rotation. |

NVJPEG_ORIENTATION_ROTATE_90 |

Decoded output should be rotated 90 degrees counter-clockwise. |

NVJPEG_ORIENTATION_TRANSVERSE |

Decoded output should be flipped/mirrored horizontally followed by a 270 degrees counter-clockwise rotation. |

NVJPEG_ORIENTATION_ROTATE_270 |

Decoded output should be rotated 270 degrees counter-clockwise. |

2.3. nvJPEG API Reference

This section describes the nvJPEG decoder API.

2.3.1. nvJPEG Helper API Reference

2.3.1.1. nvjpegGetProperty()

Gets the numeric value for the major or minor version, or the patch level, of the nvJPEG library.

Signature:

nvjpegStatus_t nvjpegGetProperty(

libraryPropertyType type,

int *value);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

One of the supported |

|

Output |

Host |

The numeric value corresponding to the specific |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.2. nvjpegGetCudartProperty()

Gets the numeric value for the major version, minor version, or the patch level of the CUDA toolkit that was used to build nvJPEG library. For the same information on the nvJPEG library itself, see nvjpegGetProperty().

Signature:

nvjpegStatus_t nvjpegGetCudartProperty(

libraryPropertyType type,

int *value);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

One of the supported |

|

Output |

Host |

The numeric value corresponding to the specific |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.3. nvjpegCreate() [DEPRECATED]

Allocates and initializes the library handle.

Note

This function is deprecated. Use either nvjpegCreateSimple() or nvjpegCreateEx() functions to create the library handle.

Signature:

nvjpegStatus_t nvjpegCreate(

nvjpegBackend_t backend,

nvjpegDevAllocator_t *allocator,

nvjpegHandle_t *handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Backend parameter for nvjpegDecodeBatched() API. If this is set to DEFAULT then it automatically chooses one of the underlying algorithms. |

|

Input |

Host |

Device memory allocator. See nvJPEG Device Memory Allocator Interface structure description. If NULL is provided, then the default CUDA runtime |

|

Input/Output |

Host |

The library handle. |

The nvjpegBackend_t parameter is an enum type, with the below enumerated list values:

typedef enum {

NVJPEG_BACKEND_DEFAULT = 0,

NVJPEG_BACKEND_HYBRID = 1,

} nvjpegBackend_t;

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.4. nvjpegCreateSimple()

Allocates and initializes the library handle, with default codec implementations selected by library and default memory allocators.

Signature:

nvjpegStatus_t nvjpegCreateSimple(nvjpegHandle_t *handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input/Output |

Host |

The library handle. |

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.5. nvjpegCreateEx()

Allocates and initializes the library handle using the provided arguments.

Signature:

nvjpegStatus_t nvjpegCreateEx(nvjpegBackend_t backend,

nvjpegDevAllocator_t *dev_allocator,

nvjpegPinnedAllocator_t *pinned_allocator,

unsigned int flags,

nvjpegHandle_t *handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Backend parameter for nvjpegDecodeBatched() API. If this is set to DEFAULT then it automatically chooses one of the underlying algorithms. |

|

Input |

Host |

Device memory allocator. See |

|

Input |

Host |

Pinned host memory allocator. See |

|

Input |

Host |

Refer to nvJPEG Flags for details. |

|

Input/Output |

Host |

The library handle. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.6. nvjpegCreateExV2()

Allocates and initializes the library handle using the provided arguments.

Signature:

nvjpegStatus_t nvjpegCreateExV2(nvjpegBackend_t backend,

nvjpegDevAllocatorV2_t *dev_allocator,

nvjpegPinnedAllocatorV2_t *pinned_allocator,

unsigned int flags,

nvjpegHandle_t *handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Backend parameter for nvjpegDecodeBatched() API. If this is set to DEFAULT then it automatically chooses one of the underlying algorithms. |

|

Input |

Host |

Extended device memory allocator. See |

|

Input |

Host |

Extended pinned memory allocator. See |

|

Input |

Host |

Refer to nvJPEG Flags for details. |

|

Input/Output |

Host |

The library handle. |

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.7. nvjpegDestroy()

Releases the library handle.

Signature:

nvjpegStatus_t nvjpegDestroy(nvjpegHandle_t handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input/Output |

Host |

The library handle to release. |

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.8. nvjpegSetDeviceMemoryPadding()

Use the provided padding for all device memory allocations with specified library handle. A large number will help to amortize the need for device memory reallocations when needed.

Signature:

nvjpegStatus_t nvjpegSetDeviceMemoryPadding(

size_t padding,

nvjpegHandle_t handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Device memory padding to use for all further device memory allocations. |

|

Input/Output |

Host |

The library handle. |

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.9. nvjpegGetDeviceMemoryPadding()

Retrieve the device memory padding that is currently used for the specified library handle.

Signature:

nvjpegStatus_t nvjpegGetDeviceMemoryPadding(

size_t *padding,

nvjpegHandle_t handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Output |

Host |

Device memory padding that is currently used for device memory allocations. |

|

Input/Output |

Host |

The library handle. |

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.10. nvjpegSetPinnedMemoryPadding()

Use the provided padding for all pinned host memory allocations with specified library handle. A large number will help to amortize the need for pinned host memory reallocations when needed.

Signature:

nvjpegStatus_t nvjpegSetPinnedMemoryPadding(

size_t padding,

nvjpegHandle_t handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Pinned host memory padding to use for all further pinned host memory allocations. |

|

Input/Output |

Host |

The library handle. |

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.11. nvjpegGetPinnedMemoryPadding()

Retrieve the pinned host memory padding that is currently used for specified library handle.

Signature:

nvjpegStatus_t nvjpegGetPinnedMemoryPadding(

size_t *padding,

nvjpegHandle_t handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Output |

Host |

Pinned host memory padding that is currently used for pinned host memory allocations. |

|

Input/Output |

Host |

The library handle. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.12. nvjpegGetHardwareDecoderInfo()

Retrieve hardware decoder details such as number of engines and number of cores available in each engine.

Signature:

nvjpegStatus_t nvjpegGetHardwareDecoderInfo(nvjpegHandle_t handle,

unsigned int* num_engines,

unsigned int* num_cores_per_engine);

Parameters:

|

Input |

Host |

The library handle. |

|

Input/Output |

Host |

Retrieves number of engines available for decode. Return value of 0 indicates that hardware decoder is not available. |

|

Input/Output |

Host |

Retrieves number of cores per engine. Return value of 0 indicates that hardware decoder is not available. |

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.13. nvjpegJpegStateCreate()

Allocates and initializes the internal structure required for the JPEG processing.

Signature:

nvjpegStatus_t nvjpegJpegStateCreate(

nvjpegHandle_t handle,

nvjpegJpegState_t *jpeg_handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

The library handle. |

|

Input/Output |

Host |

The image state handle. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.14. nvjpegJpegStateDestroy()

Releases the image internal structure.

Signature:

nvjpegStatus_t nvjpegJpegStateDestroy(nvjpegJpegState handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input/Output |

Host |

The image state handle. |

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.15. nvjpegDecoderCreate()

Creates a decoder handle.

Signature:

nvjpegStatus_t nvjpegDecoderCreate(

nvjpegHandle_t nvjpeg_handle,

nvjpegBackend_t implementation,

nvjpegJpegDecoder_t* decoder_handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Library handle. |

|

Input |

Host |

Backend parameter for the decoder_handle.The back end applies to all the functions under the decoupled API, when called with this handle. |

|

Input/Output |

Host |

Decoder state handle. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.16. nvjpegDecoderDestroy()

Destroys the decoder handle.

Signature:

nvjpegStatus_t nvjpegDecoderDestroy(

nvjpegJpegDecoder_t decoder_handle);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input/Output |

Host |

Decoder handle. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.17. nvjpegDecoderJpegSupported()

Determines whether the decoder_handle is able to handle the bit-stream stored in jpeg_stream.

Signature:

nvjpegStatus_t nvjpegDecoderJpegSupported(

nvjpegJpegDecoder_t decoder_handle,

nvjpegJpegStream_t jpeg_stream,

nvjpegDecodeParams_t decode_params,

int* is_supported);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Decoder state handle |

|

Input |

Host |

Bit stream meta-data |

|

Input |

Host |

Decoder output configuration |

|

Output |

Host |

Return value of 0 indicates bitstream can be decoded by the |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.18. nvjpegDecoderStateCreate()

Creates the decoder_state internal structure. The decoder_state is associated with the nvJPEG Backend implementation that was used to create the decoder_handle.

Signature:

nvjpegStatus_t nvjpegDecoderStateCreate(

nvjpegHandle_t nvjpeg_handle,

nvjpegJpegDecoder_t decoder_handle,

nvjpegJpegState_t* decoder_state);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Library handle. |

|

Input |

Host |

Decoder handle. |

|

Input/Output |

Host |

nvJPEG Image State Handle. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.19. nvjpegJpegStreamCreate()

Creates jpeg_stream that is used to parse the JPEG bitstream and store bitstream parameters.

Signature:

nvjpegStatus_t nvjpegJpegStreamCreate(

nvjpegHandle_t handle,

nvjpegJpegStream_t *jpeg_stream);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Library handle |

|

Input |

Host |

Bitstream handle |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.20. nvjpegJpegStreamDestroy()

Destroys the jpeg_stream structure.

Signature:

nvjpegStatus_t nvjpegJpegStreamDestroy(

nvjpegJpegStream_t *jpeg_stream);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Bitstream handle |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.21. nvjpegBufferPinnedCreate()

Creates a pinned buffer handle.

Signature:

nvjpegStatus_t nvjpegBufferPinnedCreate(

nvjpegHandle_t handle,

nvjpegPinnedAllocator_t* pinned_allocator,

nvjpegBufferPinned_t* buffer);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Library handle. |

|

Input |

Host |

Pinned host memory allocator. See |

|

Input/Output |

Host |

nvJPEG pinned buffer object. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.22. nvjpegBufferPinnedCreateV2()

Creates a pinned buffer handle using extended allocators.

Signature:

nvjpegStatus_t nvjpegBufferPinnedCreateV2(

nvjpegHandle_t handle,

nvjpegPinnedAllocatorV2_t* pinned_allocator,

nvjpegBufferPinned_t* buffer);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

nvjpegHandle_t handle |

Input |

Host |

Library handle. |

nvjpegPinnedAllocatorV2_t* pinned_allocator |

Input |

Host |

Extended pinned host memory allocator. See nvJPEG Extended Host Pinned Memory Allocator Interface structure description. |

nvjpegBufferPinned_t* buffer |

Input/Output |

Host |

nvJPEG pinned buffer object. |

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.23. nvjpegBufferPinnedDestroy()

Destroys a pinned buffer handle.

Signature:

nvjpegStatus_t nvjpegBufferPinnedDestroy(

nvjpegBufferPinned_t buffer);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

nvJPEG pinned buffer object. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.24. nvjpegStateAttachPinnedBuffer()

Link the nvJPEG pinned buffer handle to decoder_state. The pinned_buffer is used by the decoder to store the intermediate information that is used across the decoding stages. Pinned buffer can be attached to different decoder states, which helps to switch between implementations without allocating extra memory.

Signature:

nvjpegStatus_t nvjpegStateAttachPinnedBuffer(

nvjpegJpegState_t decoder_state,

nvjpegBufferPinned_t pinned_buffer);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

nvJPEG decoder state. |

|

Input |

Host |

nvJPEG pinned buffer container. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.25. nvjpegBufferPinnedRetrieve()

Retrieves the pinned memory pointer and size from the nvJPEG pinned buffer handle. Allows the application to re-use the memory once the decode is complete.

Signature:

nvjpegStatus_t nvjpegBufferPinnedRetrieve(

nvjpegBufferPinned_t buffer,

size_t* size, void** ptr);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

nvJPEG pinned buffer container. |

|

Input/Output |

Host |

Size in bytes of the pinned buffer. |

|

Input/Output |

Host |

Pointer to the pinned buffer. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.26. nvjpegBufferPinnedResize()

Resize the pinned buffer to the specified size in bytes. This API can be used to pre-allocate the pinned buffer to a large value and avoid allocator calls during decode.

Signature:

nvjpegStatus_t nvjpegBufferPinnedResize(nvjpegBufferPinned_t buffer,

size_t size,

cudaStream_t stream);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

nvJPEG pinned buffer container. |

|

Input |

Host |

Size in bytes of the pinned buffer. |

|

Input |

Host |

CUDA stream to use when |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.27. nvjpegBufferDeviceCreate()

Creates the device buffer handle.

Signature:

nvjpegStatus_t nvjpegBufferDeviceCreate(

nvjpegHandle_t handle,

nvjpegDevAllocator_t* device_allocator,

nvjpegBufferDevice_t* buffer);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Library handle. |

|

Input |

Host |

Device memory allocator. See the nvJPEG Device Memory Allocator Interface structure description. |

|

Input/Output |

Host |

nvJPEG device buffer container. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.28. nvjpegBufferDeviceCreateV2()

Creates the device buffer handle using extended allocators.

Signature:

nvjpegStatus_t nvjpegBufferDeviceCreateV2(

nvjpegHandle_t handle,

nvjpegDevAllocatorV2_t* device_allocator,

nvjpegBufferDevice_t* buffer);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

nvjpegHandle_t handle |

Input |

Host |

Library handle. |

nvjpegDevAllocatorV2_t* device_allocator |

Input |

Host |

Extended device memory allocator. See |

nvjpegBufferDevice_t* buffer |

Input/Output |

Host |

nvJPEG device buffer container. |

Returns:

nvjpegStatus_t - An error code as specified in nvJPEG API Return Codes.

2.3.1.29. nvjpegBufferDeviceDestroy()

Destroys the device buffer handle.

Signature:

nvjpegStatus_t nvjpegBufferDeviceDestroy(

nvjpegBufferDevice_t buffer);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host/Device |

nvJPEG device buffer container. Device pointers are stored within the host structures. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.30. nvjpegStateAttachDeviceBuffer()

Link the nvJPEG device buffer handle to the decoder_state. The device_buffer is used by the decoder to store the intermediate information that is used across the decoding stages. Device buffer can be attached to different decoder states, which helps to switch between implementations without allocating extra memory.

Signature:

nvjpegStatus_t nvjpegStateAttachDeviceBuffer(

nvjpegJpegState_t decoder_state,

nvjpegBufferDevice_t device_buffer);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

nvJPEG decoder state. |

|

Input |

Host/Device |

nvJPEG device buffer container. Device pointers are stored within the host structures. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.31. nvjpegBufferDeviceRetrieve()

Retrieve the device memory pointer and size from the nvJPEG device buffer handle. Allows the application to re-use the memory after the decode is complete.

Signature:

nvjpegStatus_t nvjpegBufferDeviceRetrieve(

nvjpegBufferDevice_t buffer,

size_t* size,

void** ptr);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

nvJPEG device buffer container. |

|

Input/Output |

Host |

Device buffer size in bytes. |

|

Input/Output |

Host |

Pointer to the device buffer. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.32. nvjpegBufferDeviceResize()

Resize the device buffer to the specified size in bytes. This API can be used to pre-allocate the device buffer to a large value and avoid allocator calls during decode.

Signature:

nvjpegStatus_t nvjpegBufferDeviceResize(nvjpegBufferDevice_t buffer,

size_t size,

cudaStream_t stream);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

nvJPEG device buffer container. |

|

Input |

Host |

Size in bytes of the device buffer. |

|

Input |

Host |

CUDA stream to use when |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.33. nvjpegDecodeParamsCreate()

Creates a handle for the parameters. The parameters that can be programmed include: output format, ROI decode, CMYK to RGB conversion.

Signature:

nvjpegStatus_t nvjpegDecodeParamsCreate(

nvjpegHandle_t handle,

nvjpegDecodeParams_t *decode_params);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Library handle. |

|

Input/Output |

Host |

Decode output parameters. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.1.34. nvjpegDecodeParamsDestroy()

Destroys the decode_params handle.

Signature:

nvjpegStatus_t nvjpegDecodeParamsDestroy(

nvjpegDecodeParams_t *decode_params);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input/Output |

Host |

Decode output parameters. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2. Retrieve Encoded Image Information API

The helper functions for retrieving the encoded image information.

2.3.2.1. nvjpegGetImageInfo()

Decodes the JPEG header and retrieves the basic information about the image.

Signature:

nvjpegStatus_t nvjpegGetImageInfo(

nvjpegHandle_t handle,

const unsigned char *data,

size_t length,

int *nComponents,

nvjpegChromaSubsampling_t *subsampling,

int *widths,

int *heights);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

The library handle. |

|

Input |

Host |

Pointer to the encoded data. |

|

Input |

Host |

Size of the encoded data in bytes. |

|

Output |

Host |

Number of channels in the jpeg encoded data. |

|

Output |

Host |

Chroma subsampling for the 1- or 3- channel encoding. |

|

Output |

Host |

Pointer to the first element of array of size NVJPEG_MAX_COMPONENT, where the width of each channel (up to NVJPEG_MAX_COMPONENT) will be saved. If the channel is not encoded, then the corresponding value would be zero. |

|

Output |

Host |

Pointer to the first element of array of size NVJPEG_MAX_COMPONENT, where the height of each channel (up to NVJPEG_MAX_COMPONENT) will be saved. If the channel is not encoded, then the corresponding value would be zero. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2.2. nvJPEG Stream API

These functions store the parsed bit-stream data on the host.

2.3.2.2.1. nvjpegJpegStreamParse()

Parses the bitstream and stores the metadata in thejpeg_stream struct.

Signature:

nvjpegStatus_t nvjpegJpegStreamParse(

nvjpegHandle_t handle,

const unsigned char *data,

size_t length,

int save_metadata,

int save_stream,

nvjpegJpegStream_t jpeg_stream);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

The library handle. |

|

Input |

Host |

Pointer to the bit-stream. |

|

Input |

Host |

Bit-stream size. |

|

Input |

Host |

(Not enabled. Marked for future use). If not 0, then the JPEG stream metadata (headers, app markers, etc.) will be saved in the internal |

|

Input |

Host |

If not 0, then the whole jpeg stream will be copied to the internal JpegStream structure, and the pointer to the JPEG file data will not be needed after this call.

If 0, then |

|

Input/Output |

Host/Device |

The nvJPEG bitstream handle that stores the parsed bitstream information. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2.2.2. nvjpegJpegStreamParseHeader()

Parses only the header of the bit-stream and stores the header information in thejpeg_stream struct.

Signature:

nvjpegStatus_t nvjpegJpegStreamParseHeader(

nvjpegHandle_t handle,

const unsigned char *data,

size_t length,

nvjpegJpegStream_t jpeg_stream);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

The library handle. |

|

Input |

Host |

Pointer to the bit-stream. |

|

Input |

Host |

Bit-stream size. |

|

Input/Output |

Host/Device |

The nvJPEG bitstream handle that stores the parsed bitstream information. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2.2.3. nvjpegJpegStreamParseTables()

To be used when decoding TIFF files with JPEG compression. Parses the JPEG tables bitstream and stores the jpeg tables in jpeg_stream

Signature:

nvjpegStatus_t nvjpegJpegStreamParseHeader(

nvjpegHandle_t handle,

const unsigned char *data,

size_t length,

nvjpegJpegStream_t jpeg_stream);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

The library handle. |

|

Input |

Host |

Pointer to the JPEG tables bitstream. Can be set to NULL to reset the JPEG tables. |

|

Input |

Host |

JPEG tables bitstream size. |

|

Input/Output |

Host |

The nvJPEG bitstream handle that stores the parsed bitstream information. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2.2.4. nvjpegJpegStreamGetFrameDimensions()

Extracts the JPEG frame dimensions from the bitstream.

Signature:

nvjpegStatus_t nvjpegJpegStreamGetFrameDimensions(

nvjpegJpegStream_t jpeg_stream,

unsigned int* width,

unsigned int* height);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Bitstream handle. |

|

Output |

Host |

Frame height. |

|

Output |

Host |

Frame width. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2.2.5. nvjpegJpegStreamGetComponentsNum()

Extracts the JPEG frame dimensions from the bitstream.

Signature:

nvjpegStatus_t nvjpegJpegStreamGetComponentsNum(

nvjpegJpegStream_t jpeg_stream,

unsigned int* components_num);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Bitstream handle. |

|

Output |

Host |

Number of encoded channels in the input. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2.2.6. nvjpegJpegStreamGetComponentDimensions()

Extracts the component dimensions from the bitstream.

Signature:

nvjpegStatus_t nvjpegJpegStreamGetComponentDimensions(

nvjpegJpegStream_t jpeg_stream,

unsigned int component,

unsigned int* width,

unsigned int* height)

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Bitstream handle. |

|

Input |

Host |

Component index. |

|

Output |

Host |

Component height. |

|

Output |

Host |

Component width. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2.2.7. nvjpegJpegStreamGetChromaSubsampling()

Gets the chroma subsampling from the jpeg_stream. For grayscale (single channel) images it returns NVJPEG_CSS_GRAY. For 3-channel images it tries to assign one of the known chroma sub-sampling values based on the sampling information present in the bitstream, else it returns NVJPEG_CSS_UNKNOWN. If the number of channels is 2 or 4, then it returns NVJPEG_CSS_UNKNOWN.

Signature:

nvjpegStatus_t nvjpegJpegStreamGetChromaSubsampling(

nvjpegJpegStream_t jpeg_stream,

nvjpegChromaSubsampling_t* chroma_subsampling);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Bitstream handle. |

|

Output |

Host |

Chroma subsampling for the 1- or 3- channel encoding. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2.2.8. nvjpegJpegStreamGetJpegEncoding()

This function obtains the JPEG encoding type from the jpeg_stream. For baseline images it returns NVJPEG_ENCODING_BASELINE_DCT. For progressive images it returns NVJPEG_ENCODING_PROGRESSIVE_DCT_HUFFMAN.

Signature:

nvjpegStatus_t nvjpegJpegStreamGetJpegEncoding(

nvjpegJpegStream_t jpeg_stream,

nvjpegJpegEncoding_t* jpeg_encoding);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

In |

Host |

Input bitstream handle. |

|

Out |

Host |

Encoding type obtained—baseline or progressive. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2.2.9. nvjpegJpegStreamGetExifOrientation()

Extracts the exif orientation from the bitstream. Returns NVJPEG_ORIENTATION_UNKNOWN if the exif marker/orientation information is not present.

Signature:

nvjpegStatus_t NVJPEGAPI nvjpegJpegStreamGetExifOrientation(

nvjpegJpegStream_t jpeg_stream,

nvjpegExifOrientation_t *orientation_flag);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Bitstream handle. |

|

Output |

Host |

Exif orientation in JPEG stream. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.2.2.10. nvjpegJpegStreamGetSamplePrecision()

Extracts the sample precision(bit depth) from the bitstream.

Signature:

nvjpegStatus_t NVJPEGAPI nvjpegJpegStreamGetSamplePrecision(

nvjpegJpegStream_t jpeg_stream,

unsigned int *precision);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

Bitstream handle. |

|

Output |

Host |

Sample precision value. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.3. Decode API—Single Phase

Functions for decoding single image or batched images in a single phase.

2.3.3.1. nvjpegDecode()

Decodes a single image, and writes the decoded image in the desired format to the output buffers. This function is asynchronous with respect to the host. All GPU tasks for this function will be submitted to the provided stream.

From CUDA 11 onwards, nvjpegDecode() picks the best available back-end for a given image, user no longer has control on this. If there is a need to select the back-end, then consider using nvjpegDecodeJpeg(). This is a new API added in CUDA 11 which allows user to control the back-end.

Signature:

nvjpegStatus_t nvjpegDecode(

nvjpegHandle_t handle,

nvjpegJpegState_t jpeg_handle,

const unsigned char *data,

size_t length,

nvjpegOutputFormat_t output_format,

nvjpegImage_t *destination,

cudaStream_t stream);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

The library handle. |

|

Input |

Host |

The image state handle. |

|

Input |

Host |

Pointer to the encoded data. |

|

Input |

Host |

Size of the encoded data in bytes. |

|

Input |

Host |

Format in which the decoded output will be saved. |

|

Input/Output |

Host/Device |

Pointer to the structure that describes the output destination. This structure should be on the host (CPU), but the pointers in this structure should be pointing to the device (i.e., GPU) memory. See |

|

Input |

Host |

The CUDA stream where all of the GPU work will be submitted. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.3.2. nvjpegDecodeBatchedInitialize()

This function initializes the batched decoder state. The initialization parameters include the batch size, the maximum number of CPU threads, and the specific output format in which the decoded image will be saved. This function should be called once, prior to decoding the batches of images. Any currently running batched decoding should be finished before calling this function.

Signature:

nvjpegStatus_t nvjpegDecodeBatchedInitialize(

nvjpegHandle_t handle,

nvjpegJpegState_t jpeg_handle,

int batch_size,

int max_cpu_threads,

nvjpegOutputFormat_t output_format);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

The library handle. |

|

Input |

Host |

The image state handle. |

|

Input |

Host |

Batch size. |

|

Input |

Host |

This parameter is no longer used by the library. |

|

Input |

Host |

Format in which the decoded output will be saved. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.3.3. nvjpegDecodeBatched()

Decodes the batch of images, and writes them to the buffers described in the destination parameter in a format provided to nvjpegDecodeBatchedInitialize() function. This function is asynchronous with respect to the host. All GPU tasks for this function will be submitted to the provided stream.

Signature:

nvjpegStatus_t nvjpegDecodeBatched(

nvjpegHandle_t handle,

nvjpegJpegState_t jpeg_handle,

const unsigned char *const *data,

const size_t *lengths,

nvjpegImage_t *destinations,

cudaStream_t stream);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

The library handle. |

|

Input |

Host |

The image state handle. |

|

Input |

Host |

Pointer to the first element of array of the input data. The size of the array is assumed to be batch_size provided to |

|

Input |

Host |

Pointer to the first element of array of input sizes. Size of array is assumed to be batch_size provided to |

|

Input/Output |

Host/Device |

Pointer to the first element of array of output descriptors. The size of array is assumed to be batch_size provided to |

|

Input |

Host |

The CUDA stream where all the GPU work will be submitted. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.3.4. nvjpegDecodeBatchedEx()

This API helps to Decodes the batch of images with ROI, and writes them to the buffers described in the destination parameter in a format provided to nvjpegDecodeBatchedInitialize() function. This function is asynchronous with respect to the host. All GPU tasks for this function will be submitted to the provided stream.

Signature:

nvjpegStatus_t nvjpegDecodeBatchedEx(

nvjpegHandle_t handle,

nvjpegJpegState_t jpeg_handle,

const unsigned char *const *data,

const size_t *lengths,

nvjpegImage_t *destinations,

nvjpegDecodeParams_t *decode_params,

cudaStream_t stream);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

nvjpeg library handle. |

|

Input |

Host |

The image state handle. |

|

Input |

Host |

Pointer to the first element of array of the input data. The size of the array is assumed to be |

|

Input |

Host |

Pointer to the first element of array of input sizes. |

|

Input/Output |

Host/Device |

Pointer to the first element of array of output descriptors. The size of array is assumed to be |

|

Input |

Host |

Setting ROI Decode parameters |

|

Input |

Host |

The CUDA stream where all the GPU work will be submitted. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.3.5. nvjpegDecodeBatchedSupported()

This API helps determine whether an image can be decoded by nvjpegDecodeBatched(). User can parse the bitstream header using nvjpegJpegStreamParseHeader() and then call this API to determine whether the image can be decoded.

Signature:

nvjpegStatus_t nvjpegDecodeBatchedSupported(

nvjpegHandle_t handle,

nvjpegJpegStream_t jpeg_stream,

int* is_supported);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

nvjpeg library handle. |

|

Input |

Host |

Bit stream meta-data. |

|

Output |

Host |

Return value of 0 indicates bitstream can be decoded by the |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.3.6. nvjpegDecodeBatchedSupportedEx()

This API helps determine whether an image can be decoded by nvjpegDecodeBatched(). User can parse the bitstream header using nvjpegJpegStreamParseHeader() and set the ROI in the decode params then call this API to determine whether the image can be decoded.

Signature:

nvjpegStatus_t nvjpegDecodeBatchedSupportedEx(

nvjpegHandle_t handle,

nvjpegJpegStream_t jpeg_stream,

nvjpegDecodeParams_t decode_params,

int* is_supported);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

nvjpeg library handle. |

|

Input |

Host |

Bit stream meta-data. |

|

Input |

Host |

Setting ROI Decode parameters. |

|

Output |

Host |

Return value of 0 indicates bitstream can be decoded by the |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.3.7. nvjpegDecodeBatchedPreAllocate()

This is an experimental API that can be used with nvjpegDecodeBatched(). When decoding images with varying sizes and chroma subsampling, performance is limited by the repeated cuda calls made by the library to free/allocate device memory. This API attempts to avoid this problem by allocating device memory prior to the actual decoding. Users have the option to call this API with values that are unlikely to be exceeded when nvjpegDecodeBatched() is called.

Note

Note:

This functionality is available only when the nvjpegHandle_tis instantiated using NVJPEG_BACKEND_HARDWARE. It is currently a No Op for other backends.

This API only provides a hint for initial allocation. If the image dimensions at the time of decode exceed what was provided, then the library will resize the device buffers.

If the images being decoded have different chroma subsamplings, then the chroma_subsampling field should be set to NVJPEG_CSS_444 to ensure that the device memory can be reused.

Signature:

nvjpegStatus_t nvjpegDecodeBatchedPreAllocate(

nvjpegHandle_t handle,

nvjpegJpegState_t jpeg_handle,

int batch_size,

int width,

int height,

nvjpegChromaSubsampling_t chroma_subsampling,

nvjpegOutputFormat_t output_format);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

The library handle. |

|

Input |

Host |

The image state handle. |

|

Input |

Host |

Batch size. |

|

Input |

Host |

Maximum width of image that will be decoded. |

|

Input |

Host |

Maximum height of image that will be decoded. |

|

Input |

Host |

Chroma-subsampling of the images. |

|

Input |

Host |

Format in which the decoded output will be saved. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.3.8. nvjpegDecodeBatchedParseJpegTables()

To be used along with batched decode APIs when decoding JPEG bitstreams from a TIFF file. This function parses the JPEG tables bitstream to extract the JPEG tables. The external Huffman and quantization tables will be applied to all the JPEG bitstreams in the batch.

Signature:

nvjpegStatus_t nvjpegDecodeBatchedParseJpegTables(

nvjpegHandle_t handle,

nvjpegJpegState_t jpeg_handle,

const unsigned char *data,

const size_t length);

Parameters:

Parameter |

Input / Output |

Memory |

Description |

|

Input |

Host |

The library handle. |

|

Input/Output |

Host/Device |

The image state handle. |

|

Input |

Host |

Pointer to the JPEG tables bitstream. Can be set to NULL to reset the jpeg tables. |

|

Input |

Host |

JPEG tables bitstream size. |

Returns:

nvjpegStatus_t — An error code as specified in nvJPEG API Return Codes.

2.3.4. Decode API—Decoupled Decoding

This set of decoding API works with the bitstream handles, decode parameter handles, pinned and device buffers handles as input, thus decoupling JPEG bitstream parse, buffer management and setting up decoder parameters from the decode process itself.

Currently only multiphase decoding is available. Multiphase decoupled single image decoding consists of three phases:

Host

Mixed

Device

Each of the above decodings is carried on according to its individual semantics. Phases on different images can be carried out with different decoding state handles simultaneously, while sharing of some helper objects is possible. See the details of semantics in the individual phases descriptions.

Below are a couple of examples of using decoupled API.

The following snippet explains how to use the API to prefetch the host stage of the processing: first do all of the host work on the host, and then submit the rest of decoding work to the device.

#define BATCH_SIZE 2

nvjpegHandle_t nvjpeg_handle;

nvjpegJpegState_t nvjpeg_decoder_state[BATCH_SIZE];

nvjpegBufferPinned_t nvjpeg_pinned_buffer[BATCH_SIZE];

nvjpegBufferDevice_t nvjpeg_device_buffer;

nvjpegJpegStream_t nvjpeg_jpeg_stream[BATCH_SIZE];

nvjpegDecodeParams_t nvjpeg_decode_params;

nvjpegJpegDecoder_t nvjpeg_decoder;

nvjpegBackend_t impl = NVJPEG_BACKEND_DEFAULT;

unsigned char* bitstream[BATCH_SIZE] // pointers jpeg bitstreams

size_t length[BATCH_SIZE]; // bitstream sizes

nvjpegImage_t output_images[BATCH_SIZE];

// all the images in the batch will be decoded as RGBI

nvjpegDecodeParamsSetOutputFormat(nvjpeg_decode_params,NVJPEG_OUTPUT_RGBI );

// call host phase for two bitstreams

for (int i = 0; i < BATCH_SIZE; i++)

{

nvjpegJpegStreamParse(nvjpeg_handle, bitstream[i], length[i], 0, 0, nvjpeg_jpeg_stream[i]);

nvjpegStateAttachPinnedBuffer(nvjpeg_decoder_state[i], nvjpeg_pinned_buffer[i]);

nvjpegDecodeJpegHost(nvjpeg_handle, nvjpeg_decoder, nvjpeg_decoder_state[i], nvjpeg_decode_params, nvjpeg_jpeg_stream[i])

}

for (int i = 0; i < BATCH_SIZE; i++)

{

// same device buffer being used for decoding bitstreams

nvjpegStateAttachDeviceBuffer(nvjpeg_decoder_state[i], nvjpeg_device_buffer);

// cuda stream set to NULL

nvjpegDecodeJpegTransferToDevice(nvjpeg_handle, nvjpeg_decoder, nvjpeg_decoder_state[i], nvjpeg_jpeg_stream[i], NULL);

// cuda stream set to NULL

nvjpegDecodeJpegDevice(nvjpeg_handle, nvjpeg_decoder, nvjpeg_decoder_state[i], &output_images[i], NULL);

cudaDeviceSynchronize();

}

The following snippet explains how pinned and device buffers can be shared across two instances of nvJPEG Decoder Handle.

#define BATCH_SIZE 4

nvjpegHandle_t nvjpeg_handle;

nvjpegJpegDecoder_t nvjpeg_decoder_impl1;

nvjpegJpegDecoder_t nvjpeg_decoder_impl2;

nvjpegJpegState_t nvjpeg_decoder_state_impl1;

nvjpegJpegState_t nvjpeg_decoder_state_impl2;

nvjpegBufferPinned_t nvjpeg_pinned_buffer;

nvjpegBufferDevice_t nvjpeg_device_buffer;

nvjpegJpegStream_t nvjpeg_jpeg_stream;

nvjpegDecodeParams_t nvjpeg_decode_params;

unsigned char* bitstream[BATCH_SIZE] // pointers jpeg bitstreams

size_t length[BATCH_SIZE]; // bitstream sizes

// populate bitstream and length correctly for this code to work

nvjpegImage_t output_images[BATCH_SIZE];

// allocate device memory for output images, for this snippet to work

nvjpegStateAttachPinnedBuffer(nvjpeg_decoder_state_impl1, nvjpeg_pinned_buffer);

nvjpegStateAttachPinnedBuffer(nvjpeg_decoder_state_impl2, nvjpeg_pinned_buffer);

nvjpegStateAttachDeviceBuffer(nvjpeg_decoder_state_impl1, nvjpeg_device_buffer);

nvjpegStateAttachDeviceBuffer(nvjpeg_decoder_state_impl2, nvjpeg_device_buffer);

// all the images in the batch will be decoded as RGBI

nvjpegDecodeParamsSetOutputFormat(nvjpeg_decode_params,NVJPEG_OUTPUT_RGBI );

for (int i = 0; i < BATCH_SIZE; i++)

{

nvjpegJpegStreamParse(nvjpeg_handle,bitstream[i],length[i],0,0,nvjpeg_jpeg_stream);

// decide which implementation to use, based on image size

unsigned int frame_width;

unsigned int frame_height;

nvjpegJpegStreamGetFrameDimensions(nvjpeg_jpeg_stream,&frame_width, &frame_height));

nvjpegJpegDecoder_t& decoder = (frame_height*frame_width > 1024 * 768 ) ? nvjpeg_decoder_impl2: nvjpeg_decoder_impl1;

nvjpegJpegState_t& decoder_state = (frame_height * frame_width > 1024 * 768) ? nvjpeg_decoder_state_impl2:nvjpeg_decoder_state_impl1;

nvjpegDecodeJpegHost(nvjpeg_handle,decoder,decoder_state,nvjpeg_decode_params,nvjpeg_jpeg_stream);

// cuda stream set to NULL

nvjpegDecodeJpegTransferToDevice(nvjpeg_handle,decoder,decoder_state,nvjpeg_jpeg_stream,NULL);

// cuda stream set to NULL

nvjpegDecodeJpegDevice(nvjpeg_handle,nvjpeg_decoder,decoder_state,&output_images, NULL);