TAO Toolkit Integration with DeepStream#

NVIDIA TAO toolkit is a simple, easy-to-use training toolkit that requires minimal coding to create vision AI models using the user’s own data. Using TAO toolkit, users can transfer learn from NVIDIA pre-trained models to create their own model. Users can add new classes to an existing pre-trained model, or they can re-train the model to adapt to their use case. Users can use model pruning capability to reduce the overall size of the model.

Pre-trained models#

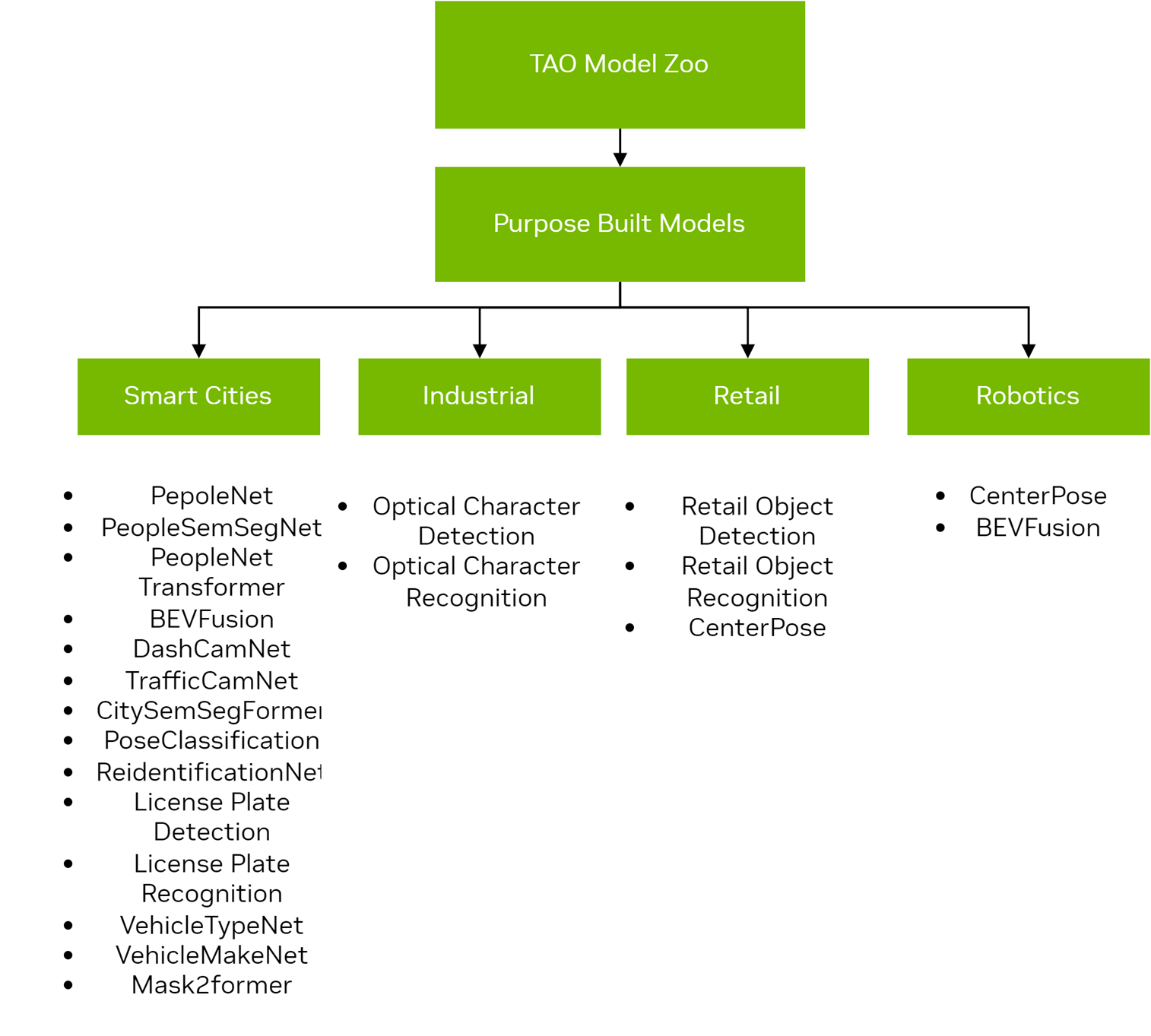

There are 2 types of pre-trained models that users can start with - purpose-built pre-trained models and meta-architecture vision models. Purpose-built pre-trained models are highly accurate models that are trained on millions of objects for a specific task. The pre-trained weights for meta-architecture vision models merely act as a starting point to build more complex models. These pre-trained weights are trained on Open image dataset and they provide a much better starting point for training versus starting from scratch or starting from random weights. With the latter choice, users can choose from 100+ permutations of model architecture and backbone. See the illustration below.

The purpose-built models are highly accurate models that are trained on thousands of data inputs for a specific task. These domain-focused models can either be used directly for inference or can be used with TAO for transfer learning on your own dataset. More information about each of these models is available in Computer Vision Model Zoo chapter of TAO toolkit documentation – Computer Vision Model Zoo or in the individual model cards.

TAO toolkit pretrained models - use cases# Model Name

Description

NGC Instance

TAO Finetuning

TrafficCamNet

4 class object detection network to detect cars in an image.

Yes

PeopleNet

3 class object detection network to detect people in an image.

Yes

DashCamNet

4 class object detection network to detect cars in an image.

Yes

VehicleMakeNet

Resnet18 model to classify a car crop into 1 out 20 car brands.

Yes

VehicleTypeNet

Resnet18 model to classify a car crop into 1 out 6 car types.

Yes

License Plate Detection

Object Detection network to detect license plates in an image of a car.

Yes

License Plate Recognition

Model to recognize characters from the image crop of a License Plate.

Yes

PeopleSemSegNet

Semantic segmentation of persons in an image.

Yes

CitySemSegFormer

Semantic segmentation of persons in an image.

Yes

Optical Character Detection

Network to detect characters in an image.

Yes

Optical Character Recognition

Model to recognise characters from a preceding OCDNet model.

Yes

Retail Object Detection

DINO (DETR with Improved DeNoising Anchor Boxes) based object detection network to detect retail objects on a checkout counter.

Yes

Retail Object Recognition

Embedding generator model to recognize objects on a checkout counter.

Yes

ReIdentificationNet

Re-Identification network to generate embeddings for identifying persons in different scenes.

Yes

Pose Classification

Pose classification network to classify poses of people from their skeletons.

Yes

PeopleNet Transformer

3 class object detection network to detect people in an image.

Yes

BodyPose3DNet

3D human pose estimation network to predict 34 keypoints in 3D of a person in an image.

Yes

Most models trained with TAO toolkit are natively integrated for inference with DeepStream. If the model is integrated, it is supported by the reference deepstream-app. If the model is not natively integrated in the SDK, you can find a reference application on the GitHub repo. See the table below for information on the models supported. For models integrated into deepstream-app, we have provided sample config files for each of the networks. The sample config files are available in the deepstream_tao_apps_configs_ folder. The table below also lists config files for each model.

Note

Refer README in package /opt/nvidia/deepstream/deepstream/samples/configs/tao_pretrained_models/README.md to obtain TAO toolkit config files and models mentioned in following table.

The TAO toolkit pre-trained models table shows the deployment information of purpose-built pre-trained models.

TAO toolkit pre-trained models in DeepStream# Pre-Trained model

DeepStream reference app

Config files

DLA supported

deepstream-app

deepstream_app_source1_trafficcamnet.txt,config_infer_primary_trafficcamnet.txt,labels_trafficnet.txtYes

deepstream-app

deepstream_app_source1_peoplenet.txt,config_infer_primary_peoplenet.txt,labels_peoplenet.txtYes

deepstream-app

deepstream_app_source1_dashcamnet_vehiclemakenet_vehicletypenet.txt,config_infer_primary_dashcamnet.txt,labels_dashcamnet.txtYes

deepstream-app

deepstream_app_source1_dashcamnet_vehiclemakenet_vehicletypenet.txt,config_infer_secondary_vehiclemakenet.txt,labels_vehiclemakenet.txtYes

deepstream-app

deepstream_app_source1_dashcamnet_vehiclemakenet_vehicletypenet.txt,config_infer_secondary_vehicletypenet.txt,labels_vehicletypenet.txtYes

seg_app_unet.yml,pgie_citysemsegformer_tao_config.txtYes

No

No

No

No

No

No

No

No

No

No

det_app_frcnn.yml,pgie_peoplenet_transformer_tao_config.ymlNo

The TAO toolkit models can be deployed with DeepStream sample applications.

Learn more about running the TAO models here: deepstream tao apps

For more information about TAO and how to deploy TAO models, refer to Integrating TAO Models into DeepStream chapter of TAO toolkit user guide.

For more information about deployment of architecture specific models with DeepStream, refer to deepstream tao apps Github repo.