Gst-nvinfer#

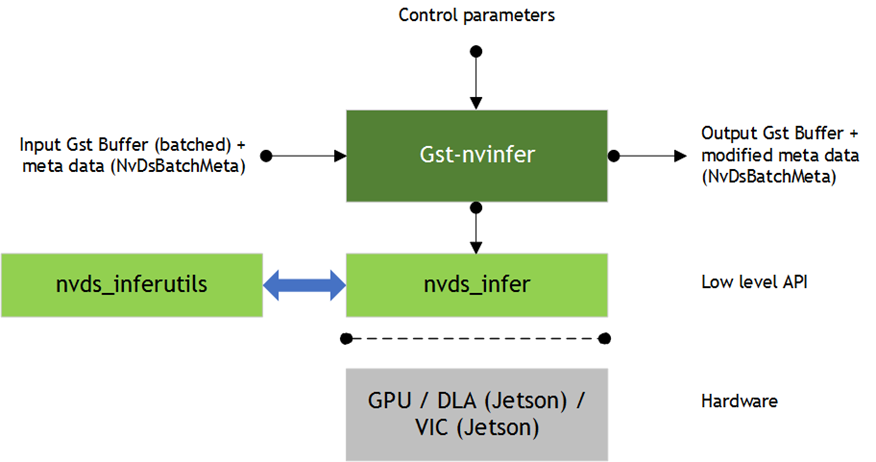

The Gst-nvinfer plugin does inferencing on input data using NVIDIA® TensorRT™.

The plugin accepts batched NV12/RGBA buffers from upstream. The NvDsBatchMeta structure must already be attached to the Gst Buffers. The low-level library (libnvds_infer) operates on any of INT8 RGB, BGR, or GRAY data with dimension of Network Height and Network Width. The Gst-nvinfer plugin performs transforms (format conversion and scaling), on the input frame based on network requirements, and passes the transformed data to the low-level library. The low-level library preprocesses the transformed frames (performs normalization and mean subtraction) and produces final float RGB/BGR/GRAY planar data which is passed to the TensorRT engine for inferencing. The output type generated by the low-level library depends on the network type. The pre-processing function is:

y = net scale factor*(x-mean)

Where:

x is the input pixel value. It is an int8 with range [0,255].

mean is the corresponding mean value, read either from the mean file or as offsets[c], where c is the channel to which the input pixel belongs, and offsets is the array specified in the configuration file. It is a float.

net-scale-factor is the pixel scaling factor specified in the configuration file. It is a float.

y is the corresponding output pixel value. It is a float.

Gst-nvinfer currently works on the following type of networks:

Multi-class object detection

Multi-label classification

Segmentation (semantic)

Instance Segmentation

The Gst-nvinfer plugin can work in three modes:

Primary mode: Operates on full frames

Secondary mode: Operates on objects added in the meta by upstream components

Preprocessed Tensor Input mode: Operates on tensors attached by upstream components

When operating in preprocessed tensor input mode, the pre-processing inside Gst-nvinfer is completely

skipped. The plugin looks for GstNvDsPreProcessBatchMeta attached to the input

buffer and passes the tensor as is to TensorRT inference function without any

modifications. This mode currently supports processing on full-frame and ROI. The

GstNvDsPreProcessBatchMeta is attached by the Gst-nvdspreprocess plugin.

When the plugin is operating as a secondary classifier along with the tracker, it tries to improve performance by avoiding re-inferencing on the same objects in every frame. It does this by caching the classification output in a map with the object’s unique ID as the key. The object is inferred upon only when it is first seen in a frame (based on its object ID) or when the size (bounding box area) of the object increases by 20% or more. This optimization is possible only when the tracker is added as an upstream element. Detailed documentation of the TensorRT interface is available at: https://docs.nvidia.com/deeplearning/sdk/tensorrt-developer-guide/index.html The plugin supports the IPlugin interface for custom layers. Refer to section IPlugin Interface for details. The plugin also supports the interface for custom functions for parsing outputs of object detectors and initialization of non-image input layers in cases where there is more than one input layer. Refer to sources/includes/nvdsinfer_custom_impl.h for the custom method implementations for custom models.

Downstream components receive a Gst Buffer with unmodified contents plus the metadata created from the inference output of the Gst-nvinfer plugin. The plugin can be used for cascaded inferencing. That is, it can perform primary inferencing directly on input data, then perform secondary inferencing on the results of primary inferencing, and so on. See the sample application deepstream-test2 for more details.

Inputs and Outputs#

This section summarizes the inputs, outputs, and communication facilities of the Gst-nvinfer plugin.

Inputs

Gst Buffer

NvDsBatchMeta (attaching NvDsFrameMeta)

ONNX

TAO Encoded Model and Key

Offline: Supports engine files generated by TAO Toolkit SDK Model converters

Layers: Supports all layers supported by TensorRT, see: https://docs.nvidia.com/deeplearning/sdk/tensorrt-developer-guide/index.html.

Control parameters

Gst-nvinfer gets control parameters from a configuration file. You can specify this by setting the property config-file-path. For details, see Gst-nvinfer File Configuration Specifications. Other control parameters that can be set through GObject properties are:

Batch size

Inference interval

Attach inference tensor outputs as buffer metadata

Attach instance mask output as in object metadata

The parameters set through the GObject properties override the parameters in the Gst-nvinfer configuration file.

Outputs

Gst Buffer

Depending on network type and configured parameters, one or more of:

NvDsObjectMeta

NvDsClassifierMeta

NvDsInferSegmentationMeta

NvDsInferTensorMeta

Features#

The following table summarizes the features of the plugin.

Gst-nvinfer plugin features# Feature

Description

Release

Explicit Full Dimension Network Support

Refer to https://docs.nvidia.com/deeplearning/sdk/tensorrt-developer-guide/index.html#work_dynamic_shapes for more details.

DS 5.0

Non-maximum Suppression (NMS)

New bounding box clustering algorithm.

DS 5.0

On-the-fly model update (Engine file only)

Update the model-engine-file on-the-fly in a running pipeline.

DS 5.0

Configurable frame scaling params

Configurable options to select the compute hardware and the filter to use while scaling frame/object crops to network resolution

DS 5.0

TAO toolkit encoded model support

—

DS 4.0

Gray input model support

Support for models with single channel gray input

DS 4.0

Tensor output as meta

Raw tensor output is attached as meta data to Gst Buffers and flowed through the pipeline

DS 4.0

Segmentation model

Supports segmentation model

DS 4.0

Maintain input aspect ratio

Configurable support for maintaining aspect ratio when scaling input frame to network resolution

DS 4.0

Custom cuda engine creation interface

Interface for generating CUDA engines from TensorRT INetworkDefinition and IBuilder APIs instead of model files

DS 4.0

ONNX Model support

—

DS 3.0

Multiple modes of operation

Support for cascaded inferencing

DS 2.0

Asynchronous mode of operation for secondary inferencing

Infer asynchronously for secondary classifiers

DS 2.0

Grouping using CV::Group rectangles

For detector bounding box clustering

DS 2.0

Configurable batch-size processing

User can configure batch size for processing

DS 2.0

No Restriction on number of output blobs

Supports any number of output blobs

DS 3.0

Configurable number of detected classes (detectors)

Supports configurable number of detected classes

DS 3.0

Support for Classes: configurable (> 32)

Supports any number of classes

DS 3.0

Application access to raw inference output

Application can access inference output buffers for user specified layer

DS 3.0

Support for single shot detector (SSD)

—

DS 3.0

Secondary GPU Inference Engines (GIEs) operate as detector on primary bounding box

Supports secondary inferencing as detector

DS 2.0

Multiclass secondary support

Supports multiple classifier network outputs

DS 2.0

Grouping using DBSCAN

For detector bounding box clustering

DS 3.0

Loading an external lib containing IPlugin implementation for custom layers (IPluginCreator & IPluginFactory)

Supports loading (dlopen()) a library containing IPlugin implementation for custom layers

DS 3.0

Multi GPU

Select GPU on which we want to run inference

DS 2.0

Detection width height configuration

Filter out detected objects based on min/max object size threshold

DS 2.0

Allow user to register custom parser

Supports final output layer bounding box parsing for custom detector network

DS 2.0

Bounding box filtering based on configurable object size

Supports inferencing in secondary mode objects meeting min/max size threshold

DS 2.0

Configurable operation interval

Interval for inferencing (number of batched buffers skipped)

DS 2.0

Select Top and bottom regions of interest (RoIs)

Removes detected objects in top and bottom areas

DS 2.0

Operate on Specific object type (Secondary mode)

Process only objects of define classes for secondary inferencing

DS 2.0

Configurable blob names for parsing bounding box (detector)

Support configurable names for output blobs for detectors

DS 2.0

Allow configuration file input

Support configuration file as input (mandatory in DS 3.0)

DS 2.0

Allow selection of class id for operation

Supports secondary inferencing based on class ID

DS 2.0

Support for Full Frame Inference: Primary as a classifier

Can work as classifier as well in primary mode

DS 2.0

Multiclass secondary support

Support multiple classifier network outputs

DS 2.0

Secondary GIEs operate as detector on primary bounding box Support secondary inferencing as detector

—

DS 2.0

Supports FP16, FP32 and INT8 models FP16 and INT8 are platform dependent

—

DS 2.0

Supports TensorRT Engine file as input

—

DS 2.0

Inference input layer initialization Initializing non-video input layers in case of more than one input layers

—

DS 3.0

Support for FasterRCNN

—

DS 3.0

Support for Yolo detector (YoloV3/V3-tiny/V2/V2-tiny)

—

DS 4.0

Support for yolov3-spp detector

—

DS 5.0

Support Instance segmentation with MaskRCNN

Support for instance segmentation using MaskRCNN. It includes output parser and attach mask in object metadata.

DS 5.0

Support for NHWC network input

—

DS 6.0

Added support for TAO ONNX model

—

DS 6.0

Support for input tensor meta

Inferences using already preprocessed raw tensor from input tensor meta (attached as user meta at batch level) and skips preprocessing in nvinfer. In this mode, the batch-size of nvinfer must be equal to the sum of ROIs set in the gst-nvdspreprocess plugin config file.

DS 6.0

Support for clipping bounding boxes to ROI boundary

—

DS 6.2

Gst-nvinfer File Configuration Specifications#

The Gst-nvinfer configuration file uses a “Key File” format described in https://specifications.freedesktop.org/desktop-entry-spec/latest.

The [property] group configures the general behavior of the plugin. It is the only mandatory group.

The [class-attrs-all] group configures detection parameters for all classes.

The [class-attrs-<class-id>] group configures detection parameters for a class specified by <class-id>. For example, the [class-attrs-23] group configures detection parameters for class ID 23. This type of group has the same keys as [class-attrs-all].

The following two tables respectively describe the keys supported for [property] groups and [class-attrs-…] groups.

Gst-nvinfer Property Group Supported Keys# Property

Meaning

Type and Range

Example Notes

num-detected-classes

Number of classes detected by the network

Integer, >0

num-detected-classes=91

Detector

Both

net-scale-factor

Pixel normalization factor (ignored if input-tensor-meta enabled)

Float, >0.0

net-scale-factor=0.031

All

Both

model-file

Pathname of the model file. Not required if model-engine-file is used

String

model-file=

/home/ubuntu/model

All

Both

proto-file

Pathname of the prototxt file. Not required if model-engine-file is used

String

proto-file=

/home/ubuntu/model.prototxt

All

Both

int8-calib-file

Pathname of the INT8 calibration file for dynamic range adjustment with an FP32 model

String

int8-calib-file=/home/ubuntu/int8_calib

All

Both

batch-size

Number of frames or objects to be inferred together in a batch

Integer, >0

batch-size=30

All

Both

input-tensor-from-meta

Use preprocessed input tensors attached as metadata instead of preprocessing inside the plugin. If this is set, ensure that the batch-size of nvinfer is equal to the sum of ROIs set in the gst-nvdspreprocess plugin config file.

Boolean

input-tensor-from-meta=1

All

Primary

tensor-meta-pool-size

Size of the output tensor meta pool

Integer, >0

tensor-meta-pool-size=20

All

Both

model-engine-file

Pathname of the serialized model engine file

String

model-engine-file=

/home/ubuntu/model.engine

All

Both

onnx-file

Pathname of the ONNX model file

String

onnx-file=

/home/ubuntu/model.onnx

All

Both

enable-dbscan

Indicates whether to use DBSCAN or the OpenCV groupRectangles() function for grouping detected objects. DEPRECATED. Use cluster-mode instead.

Boolean

enable-dbscan=1

Detector

Both

labelfile-path

Pathname of a text file containing the labels for the model

String

labelfile-path=

/home/ubuntu/model_labels.txt

Detector & classifier

Both

mean-file

Pathname of mean data file in PPM format (ignored if input-tensor-meta enabled)

String

mean-file=

/home/ubuntu/model_meanfile.ppm

All

Both

gie-unique-id

Unique ID to be assigned to the GIE to enable the application and other elements to identify detected bounding boxes and labels

Integer, >0

gie-unique-id=2

All

Both

operate-on-gie-id

Unique ID of the GIE on whose metadata (bounding boxes) this GIE is to operate on

Integer, >0

operate-on-gie-id=1

All

Both

operate-on-class-ids

Class IDs of the parent GIE on which this GIE is to operate on

Semicolon delimited integer array

operate-on-class-ids=1;2

Operates on objects with class IDs 1, 2

generated by parent GIE

If operate-on-class-ids is set to -1,

it will operate on all class-ids

All

Both

interval

Specifies the number of consecutive batches to be skipped for inference

Integer, >0

interval=1

All

Primary

input-object-min-width

Secondary GIE infers only on objects with this minimum width

Integer, ≥0

input-object-min-width=40

All

Secondary

input-object-min-height

Secondary GIE infers only on objects with this minimum height

Integer, ≥0

input-object-min-height=40

All

Secondary

input-object-max-width

Secondary GIE infers only on objects with this maximum width

Integer, ≥0

input-object-max-width=256

0 disables the threshold

All

Secondary

input-object-max-height

Secondary GIE infers only on objects with this maximum height

Integer, ≥0

input-object-max-height=256

All

Both

network-mode

Data format to be used by inference

Integer 0: FP32 1: INT8 2: FP16 3: BEST

network-mode=0

All

Both

offsets

Array of mean values of color components to be subtracted from each pixel. Array length must equal the number of color components in the frame. The plugin multiplies mean values by net-scale-factor.(ignored if input-tensor-meta enabled)

Semicolon delimited float array, all values ≥0

offsets=77.5 21.2

All

Both

parse-bbox-func-name

Name of the custom bounding box parsing function. If not specified, Gst-nvinfer uses the internal function for the resnet model provided by the SDK

String

parse-bbox-func-name=

parse_bbox_resnet

Detector

Both

parse-bbox-instance-mask-func-name

Name of the custom instance segmentation parsing function. It is mandatory for instance segmentation network as there is no internal function.

String

parse-bbox-instance-mask-func-name=

NvDsInferParseCustomMrcnnTLT

Instance Segmentation

Primary

custom-lib-path

Absolute pathname of a library containing custom method implementations for custom models

String

custom-lib-path=

/home/ubuntu/libresnet_custom_impl.so

All

Both

model-color-format

Color format required by the model (ignored if input-tensor-meta enabled)

Integer 0: RGB 1: BGR 2: GRAY

model-color-format=0

All

Both

classifier-async-mode

Enables inference on detected objects and asynchronous metadata attachments. Works only when tracker-ids are attached. Pushes buffer downstream without waiting for inference results. Attaches metadata after the inference results are available to next Gst Buffer in its internal queue.

Boolean

classifier-async-mode=1

Classifier

Secondary

process-mode

Mode (primary or secondary) in which the element is to operate on (ignored if input-tensor-meta enabled)

Integer 1=Primary 2=Secondary

gie-mode=1

All

Both

classifier-threshold

Minimum threshold label probability. The GIE outputs the label having the highest probability if it is greater than this threshold

Float, ≥0

classifier-threshold=0.4

Classifier

Both

secondary-reinfer-interval

Re-inference interval for objects, in frames

Integer, ≥0

secondary-reinfer-interval=15

Detector & Classifier

Secondary

output-tensor-meta

Gst-nvinfer attaches raw tensor output as Gst Buffer metadata.

Boolean

output-tensor-meta=1

All

Both

output-instance-mask

Gst-nvinfer attaches instance mask output in object metadata.

Boolean

output-instance-mask=1

Instance Segmentation

Primary

enable-dla

Indicates whether to use the DLA engine for inferencing. Note: DLA is supported only on NVIDIA® Jetson AGX Orin™ and NVIDIA® Jetson Orin NX™. Currently work in progress.

Boolean

enable-dla=1

All

Both

use-dla-core

DLA core to be used. Note: Supported only on Jetson AGX Orin and Jetson Orin NX. Currently work in progress.

Integer, ≥0

use-dla-core=0

All

Both

network-type

Type of network

Integer

0: Detector

1: Classifier

2: Segmentation

3: Instance Segmentation

network-type=1

All

Both

maintain-aspect-ratio

Indicates whether to maintain aspect ratio while scaling input.

Boolean

maintain-aspect-ratio=1

All

Both

symmetric-padding

Indicates whether to pad image symmetrically while scaling input. DeepStream pads the images asymmetrically by default.

Boolean

symmetric-padding=1

All

Both

parse-classifier-func-name

Name of the custom classifier output parsing function. If not specified, Gst-nvinfer uses the internal parsing function for softmax layers.

String

parse-classifier-func-name=

parse_bbox_softmax

Classifier

Both

custom-network-config

Pathname of the configuration file for custom networks available in the custom interface for creating CUDA engines.

String

custom-network-config=

/home/ubuntu/network.config

All

Both

tlt-encoded-model

Pathname of the TAO toolkit encoded model.

String

tlt-encoded-model=

/home/ubuntu/model.etlt

All

Both

tlt-model-key

Key for the TAO toolkit encoded model.

String

tlt-model-key=abc

All

Both

segmentation-threshold

Confidence threshold for the segmentation model to output a valid class for a pixel. If confidence is less than this threshold, class output for that pixel is −1.

Float, ≥0.0

segmentation-threshold=0.3

Segmentation, Instance segmentation

Both

segmentation-output-order

Segmentation network output layer order

Integer 0: NCHW 1: NHWC

segmentation-output-order=1

Segmentation

Both

workspace-size

Workspace size to be used by the engine, in MB

Integer, >0

workspace-size=45

All

Both

force-implicit-batch-dim

When a network supports both implicit batch dimension and full dimension, force the implicit batch dimension mode.

Boolean

force-implicit-batch-dim=1

All

Both

engine-create-func-name

Name of the custom TensorRT CudaEngine creation function. Refer to the “Custom Model Implementation Interface” section for details

String

engine-create-func-name=

NvDsInferYoloCudaEngineGet

All

Both

cluster-mode

Clustering algorithm to use. Refer to the next table for configuring the algorithm specific parameters. Refer Clustering algorithms supported by nvinfer for more information

Integer 0: OpenCV groupRectangles() 1: DBSCAN 2: Non Maximum Suppression 3: DBSCAN + NMS Hybrid 4: No clustering

cluster-mode=2

cluster-mode=4 for instance segmentation

Detector

Both

filter-out-class-ids

Filter out detected objects belonging to specified class-ids

Semicolon delimited integer array

filter-out-class-ids=1

2

scaling-filter

The filter to use for scaling frames / object crops to network resolution (ignored if input-tensor-meta enabled)

Integer, refer to enum NvBufSurfTransform_Inter in nvbufsurftransform.h for valid values

scaling-filter=1

All

Both

scaling-compute-hw

Compute hardware to use for scaling frames / object crops to network resolution (ignored if input-tensor-meta enabled)

Integer 0: Platform default – GPU (dGPU), VIC (Jetson) 1: GPU 2: VIC (Jetson only)

scaling-compute-hw=2

All

Both

output-io-formats

Specifies the data type and order for bound output layers. For layers not specified, defaults to FP32 and CHW

Semi-colon separated list of format. <output-layer1-name>:<data-type>:<order>;<output-layer2-name>:<data-type>:<order>

data-type should be one of [fp32, fp16, int32, int8]

order should be one of [chw, chw2, chw4, hwc8, chw16, chw32]

output-io-formats=

output_cov/Sigmoid:0:fp32:chw;output_bbox/BiasAdd:0:fp32:chw

All

Both

Layer-device-precision

Specifies the device type and precision for any layer in the network

Semi-colon separated list of format. <layer1-name>:<precision>:<device-type>;<layer2-name>:<precision>:<device-type>;

precision should be one of [fp32, fp16, int8]

Device-type should be one of [gpu, dla]

layer-device-precision=

output_cov/Sigmoid:0:fp32:gpu;output_bbox/BiasAdd:0:fp32:gpu;

All

Both

network-input-order

Order of the network input layer (ignored if input-tensor-meta enabled)

Integer 0:NCHW 1:NHWC

network-input-order=1

All

Both

classifier-type

Description of what the classifier does

String (alphanumeric, ‘-’ and ‘_’ allowed, no spaces)

classifier-type=vehicletype

Classifier

Both

crop-objects-to-roi-boundary

Clip the object bounding boxes to fit within the specified ROI boundary.

Boolean

crop-objects-to-roi-boundary=1

Detector

Both

Gst-nvinfer Class-attributes Group Supported Keys# Name

Description

Type and Range

Example

Notes

(Primary/Secondary)

threshold

Detection threshold

Float, ≥0

threshold=0.5

Object detector

Both

pre-cluster-threshold

Detection threshold to be applied prior to clustering operation

Float, ≥0

pre-cluster-threshold=

0.5

Object detector

Both

post-cluster-threshold

Detection threshold to be applied post clustering operation

Float, ≥0

post-cluster-threshold=

0.5

Object detector

Both

eps

Epsilon values for OpenCV grouprectangles() function and DBSCAN algorithm

Float, ≥0

eps=0.2

Object detector

Both

group-threshold

Threshold value for rectangle merging for OpenCV grouprectangles() function

Integer, ≥0

group-threshold=1

0 disables the clustering functionality

Object detector

Both

minBoxes

Minimum number of points required to form a dense region for DBSCAN algorithm

Integer, ≥0

minBoxes=1

0 disables the clustering functionality

Object detector

Both

dbscan-min-score

Minimum sum of confidence of all the neighbors in a cluster for it to be considered a valid cluster.

Float, ≥0

dbscan-min-score=

0.7

Object detector

Both

nms-iou-threshold

Maximum IOU score between two proposals after which the proposal with the lower confidence will be rejected.

Float, ≥0

nms-iou-threshold=

0.2

Object detector

Both

roi-top-offset

Offset of the RoI from the top of the frame. Only objects within the RoI are output.

Integer, ≥0

roi-top-offset=

200

Object detector

Both

roi-bottom-offset

Offset of the RoI from the bottom of the frame. Only objects within the RoI are output.

Integer, ≥0

roi-bottom-offset=

200

Object detector

Both

detected-min-w

Minimum width in pixels of detected objects to be output by the GIE

Integer, ≥0

detected-min-w=

64

Object detector

Both

detected-min-h

Minimum height in pixels of detected objects to be output by the GIE

Integer, ≥0

detected-min-h=

64

Object detector

Both

detected-max-w

Maximum width in pixels of detected objects to be output by the GIE

Integer, ≥0

detected-max-w=200

0 disables the property

Object detector

Both

detected-max-h

Maximum height in pixels of detected objects to be output by the GIE

Integer, ≥0

detected-max-h=200

0 disables the property

Object detector

Both

topk

Keep only top K objects with highest detection scores.

Integer, ≥0. -1 to disable

topk=10

Object detector

Both

Note

UFF model support is removed from TRT 10.3.

Gst Properties#

The values set through Gst properties override the values of properties in the configuration file. The application does this for certain properties that it needs to set programmatically. The following table describes the Gst-nvinfer plugin’s Gst properties.

Property |

Meaning |

Type and Range |

Example notes |

|---|---|---|---|

config-file-path |

Absolute pathname of configuration file for the Gst-nvinfer element |

String |

config-file-path=config_infer_primary.txt |

process-mode |

Infer Processing Mode 1=Primary Mode 2=Secondary Mode |

Integer, 1 or 2 |

process-mode=1 |

unique-id |

Unique ID identifying metadata generated by this GIE |

Integer, | 0 to 4,294,967,295 |

unique-id=1 |

infer-on-gie-id |

See operate-on-gie-id in the configuration file table |

Integer, 0 to 4,294,967,295 |

infer-on-gie-id=1 |

operate-on-class-ids |

See operate-on-class-ids in the configuration file table |

An array of colon- separated integers (class-ids) |

operate-on-class-ids=1:2:4 |

filter-out-class-ids |

See filter-out-class-ids in the configuration file table |

Semicolon delimited integer array |

filter-out-class-ids=1;2 |

model-engine-file |

Absolute pathname of the pre-generated serialized engine file for the mode |

String |

model-engine-file=model_b1_fp32.engine |

batch-size |

Number of frames/objects to be inferred together in a batch |

Integer, 1 – 4,294,967,295 |

batch-size=4 |

Interval |

Number of consecutive batches to be skipped for inference |

Integer, 0 to 32 |

interval=0 |

gpu-id |

Device ID of GPU to use for pre-processing/inference (dGPU only) |

Integer, 0-4,294,967,295 |

gpu-id=1 |

raw-output-file-write |

Pathname of raw inference output file |

Boolean |

raw-output-file-write=1 |

raw-output-generated-callback |

Pointer to the raw output generated callback function |

Pointer |

Cannot be set through gst-launch |

raw-output-generated-userdata |

Pointer to user data to be supplied with raw-output-generated-callback |

Pointer |

Cannot be set through gst-launch |

output-tensor-meta |

Indicates whether to attach tensor outputs as meta on GstBuffer. |

Boolean |

output-tensor-meta=0 |

output-instance-mask |

Gst-nvinfer attaches instance mask output in object metadata. |

Boolean |

output-instance-mask=1 |

input-tensor-meta |

Use preprocessed input tensors attached as metadata instead of preprocessing inside the plugin |

Boolean |

input-tensor-meta=1 |

crop-objects-to-roi-boundary |

Clip the object bounding boxes to fit within the specified ROI boundary |

Boolean |

crop-objects-to-roi-boundary=1 |

Clustering algorithms supported by nvinfer#

cluster-mode = 0 | GroupRectangles#

GroupRectangles is a clustering algorithm from OpenCV library which clusters rectangles of similar size and location using the rectangle equivalence criteria. Link to API documentation - https://docs.opencv.org/3.4/d5/d54/group__objdetect.html#ga3dba897ade8aa8227edda66508e16ab9

cluster-mode = 1 | DBSCAN#

Density-based spatial clustering of applications with noise or DBSCAN is a clustering algorithm which which identifies clusters by checking if a specific rectangle has a minimum number of neighbors in its vicinity defined by the eps value. The algorithm further normalizes each valid cluster to a single rectangle which is outputted as valid bounding box if it has a confidence greater than that of the threshold.

cluster-mode = 2 | NMS#

Non maximum suppression or NMS is a clustering algorithm which filters overlapping rectangles based on a degree of overlap(IOU) which is used as threshold. Rectangles with the highest confidence score is first preserved while the rectangles which overlap greater than the threshold are removed iteratively.

cluster-mode = 3 | Hybrid#

Hybrid clustering algorithm is a method which uses both DBSCAN and NMS algorithms in a two step process. DBSCAN is first applied to form unnormalized clusters in proposals whilst removing the outliers. NMS is later applied on these clusters to select the final rectangles for output.

cluster-mode=4 | No clustering#

No clustering is applied and all the bounding box rectangle proposals are returned as it is.

Tensor Metadata#

The Gst-nvinfer plugin can attach raw output tensor data generated by a TensorRT inference engine as metadata. It is added as an NvDsInferTensorMeta in the frame_user_meta_list member of NvDsFrameMeta for primary (full frame) mode, or in the obj_user_meta_list member of NvDsObjectMeta for secondary (object) mode.

To read or parse inference raw tensor data of output layers#

Enable property output-tensor-meta or enable the same-named attribute in the configuration file for the Gst-nvinfer plugin.

When operating as primary GIE,

NvDsInferTensorMetais attached to each frame’s (eachNvDsFrameMetaobject’s)frame_user_meta_list. When operating as secondary GIE,NvDsInferTensorMetais attached to eachNvDsObjectMetaobject’sobj_user_meta_list. When operating in preprocessed tensor input mode (using nvdspreprocess plugin), the tensor metadata is accessible throughNvDsPreProcessorBatchMeta- first look forGstNvDsPreProcessBatchMetainbatch_meta->batch_user_meta_list, then access theroi_vectorwhich containsNvDsRoiMetaobjects.

Metadata attached by Gst-nvinfer can be accessed in a GStreamer pad probe attached downstream from the Gst-nvinfer instance.

The

NvDsInferTensorMetaobject’s metadata type is set toNVDSINFER_TENSOR_OUTPUT_META. To get this metadata you must iterate over theNvDsUserMetauser metadata objects in the list referenced byframe_user_meta_listorobj_user_meta_list. However, for preprocessed tensor input mode, you must first find theGstNvDsPreProcessBatchMetainbatch_meta->batch_user_meta_list, then access theroi_vectorwhich containsNvDsRoiMetaobjects, and iterate over each ROI meta’sroi_user_meta_listto findNVDSINFER_TENSOR_OUTPUT_META. EachNvDsRoiMetacontainsframe_metaandobject_metareferences to identify the source object, and look forNVDSINFER_TENSOR_OUTPUT_METAwithin each ROI meta’sroi_user_meta_list.

For more information about Gst-infer tensor metadata usage, see the source code in sources/apps/sample_apps/deepstream_infer_tensor_meta-test.cpp, provided in the DeepStream SDK samples.

Segmentation Metadata#

The Gst-nvinfer plugin attaches the output of the segmentation model as user meta in an instance of NvDsInferSegmentationMeta with meta_type set to NVDSINFER_SEGMENTATION_META. The user meta is added to the frame_user_meta_list member of NvDsFrameMeta for primary (full frame) mode, or the obj_user_meta_list member of NvDsObjectMeta for secondary (object) mode.

For guidance on how to access user metadata, see User/Custom Metadata Addition inside NvDsBatchMeta and Tensor Metadata sections.