Container Builder#

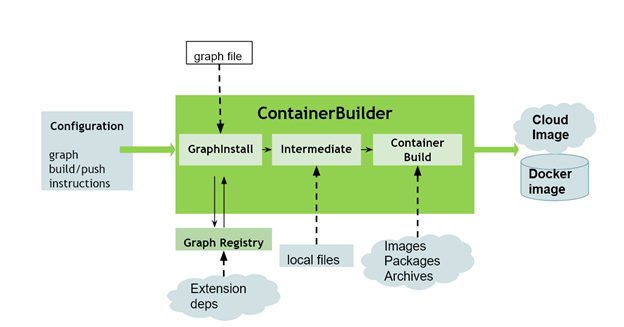

Container Builder (CB) is used to build docker images for AI Application graphs created using Composer. In addition to docker images, it can also push the final image into the cloud for deployment.

Container Builder interacts with Registry: to:

Download extensions and other related files into your local system.

Copy other required files specified in the config file to generate an intermediate work folder and an optimized dockerfile.

Convert archives/packages dependencies and instructions into docker and try to build a minimal sized local image. For optimization, you can easily configure container builder to support multi-stage docker build.

Container Builder supports graph installing and container image building on x86 Ubuntu systems. It can also build arm64 images from x86_64 platforms - to do this, you will need to install QEMU and bintutils.

Prerequisites#

Install right docker version https://docs.docker.com/engine/install/ubuntu/

log into the server which you might need pull/push images. Run:

$ docker login server:port

If you need NGC images and resources, follow https://ngc.nvidia.com/setup/api-key to apply permission and get API_KEY token. Then run,

$ docker login nvcr.io

Some features (e.g. squash) might need docker experimental support. To enable that, update

/etc/docker/daemon.jsonand add{ "experimental": true }

Then restart docker by running

$ sudo systemctl restart docker

If you want to build ARM docker images from x86_64 platform, then need to install QEMU and binfmt. OS restart might be needed.

$ sudo apt-get install qemu binfmt-support qemu-user-static $ docker run --rm --privileged multiarch/qemu-user-static --reset -p yes

To verify if it is working, run

$ docker run --rm -t arm64v8/ubuntu uname -m

Install Graph Composer package. Make sure

container_builderexecutable binary is installed.

Container Builder Features#

The image building is based on docker build. Container builder provides different stage models to build the image. There are compile_stage and clean_stage models for users to select. Some features are applicable for one stage only. For more details, see the feature table.

Features |

Description |

Stage (compile_stage/clean_stage) |

Local files/folders copy |

Copies local files/folders to the current image destination path. |

both |

Stage files/folders copy |

Copies files/folders from other target stages or other image to current image/stage destination path. |

both |

Multiple deepstream graph files installation |

Installs one or more deepstream graph files extensions and relevant files into image |

N/A, final image |

Multiple registry repo create/destroy |

Specifies multiple registry repo list to create and delete |

N/A, final image |

Packages installation by apt/pip3 |

Installs debian package and pip3 packages online |

both |

HTTP archives download and installation |

Downloads HTTP archives and runs custom commands thereafter. |

both |

git repo clone and custom build |

Clones specific branch/tag/commit from repo and does custom build |

compile_stage only |

Multiple Stage docker build |

Builder final target image from multiple stages. |

N/A, for final image |

Custom cmdline runs |

Runs custom commands for on a image stage |

clean_stage |

Dockerfile misc |

Base image Selection and other miscs WORKDIR/ENV/ENTRYPOINT/LABEL |

clean_stage |

docker build options |

Supports platforms, arguments, network, cache, squash |

N/A, for final image |

Push Image to cloud |

Pushes local built image to remote server |

N/A, for final image |

Cross platform container build |

Supports build other platforms(e.g. ARM64) containers from x86_64 |

N/A |

Container Builder Tool Usage#

CB (container_builder) tool has very few input arguments. The config file collects all user settings as a YAML format text file. Briefly to generate a container, users need update the config file and run command line, where graph_target_key can be x86 or aarch64

$ container_builder build -c config.yaml -d graph_target_key

Container image can also be pushed to remote repository using command

$ container_builder push -c config.yaml -d graph_target.yaml

See more details of config settings from Configuration Specification. The graph target key corresponds to the graph target configuration, stored in file /opt/nvidia/graph-composer/graph_targets.yaml, which is used by registry during graph install. See registry cli graph install documentation for sample file.

The default log print level is INFO and output stream displays on screen. log-level, log-file, and other arguments are used for debug. For more details, refer to help option from the following command

$ container_builder -h

Multiarch Build#

Additionally, CB tool can now build multiarch images. Users can use the following command to achieve the same

$ container_builder build -c config_file1.yaml config_file2.yaml -d target_key1 target_key2 -wd [working dir]

The users may also skip the target key mentioned in the command if they have specified the target within each of the config files as shown below:

%YAML 1.2

---

target: x86 # optional

unique_stage: final_image

base_image: auto

stage_model: clean_stage

One thing to note here is the multiarch feature requires the users to mention the docker push configurations as well; otherwise CB will fail to generate the final multiarch image.

Run Container Builder#

The following are a basic set of steps to build a container using an existing Container Builder configuration file and execute the container.

Update the config file to start the build. Open

/opt/nvidia/deepstream/deepstream/reference_graphs/deepstream-test1/ds_test1_container_builder_dgpu.yamlSpecify the right base image with correct DeepStream SDK version for the

graph_files. If base image is not specified, container builder will attempt to auto select it from a pool of predefined base images in/opt/nvidia/graph-composer/container_builder.yaml. The container which matches the graph target closest will be selectedbase_image: "nvcr.io/nvidia/deepstream:x.x-x"

Specify the output image name in

docker_buildsectiondocker_build: image_name: deepstream_test1_dgpu:nvgcb_test

Ensure the gxf server has started by running the following command in a terminal:

$ systemctl --user status gxf_server

The users can also run the

gxf_serveron a different port by setting the environment variableGXF_SERVER_PORT. Currently, the Container Builder CLI only supports locally runninggxf_serverwhile Windows based users or GUI based Graph Composer users can set the remote address to connect to a remotely runninggxf_server.Run Container builder tool to build the image:

$ container_builder build -c ds_test1_container_builder_dgpu.yaml -d x86 -wd /opt/nvidia/deepstream/deepstream/reference_graphs/deepstream-test1/

Verify the image and graph in container, use image in config file

$ docker run --gpus all -v /tmp/.X11-unix:/tmp/.X11-unix <image_name>

Container Builder Configuration#

The input config file for Container Builder is following YAML1.2 format rules https://yaml.org/spec/1.2/spec.html.

There are 2 major YAML document sections in the configuration settings.

Container builder main control section - With that, users can specify graph installation options, build/push options and other host side control options. Each config file can have only one control section with key field container_builder: name

Container dockerfile stage section - All the sections will be converted into dockerfiles. Users can specify multiple stage sections. There are 2 model templates for different stages.

clean_stage model: This is the default model if not specified. The output container image must have a clean_stage section as final stage. Users should keep the final stage as clean as possible.

compile_stage model: It is used to do some extra work such as build binaries from source code and to install some compile tools. It should be an intermediate stage, users can specify the clean_stage to copy required binaries from compile_stage.

Note

You must store private information safely when building docker images from container builders. Learn more details of docker reference https://docs.docker.com/engine/reference/builder/ to avoid exposing critical layers to the public. MSB(Multi-stage build) is one of the best practices to separate internal source code stage and clean public stage. In container builder, users can use compile_stage to quickly start source code compiling and copy results to clean_stage for the final image. More details refer to https://docs.docker.com/develop/develop-images/multistage-build/

A Basic Example of Container Builder Configuration#

This example has 2 sections with a clean_stage build section and a main control section.

During stage build:

Starts from base_image and installs some debian, python3 packages into the target image

Installs archives

Copies files from local and other image

Finally do some cleanup and environment settings on output target image.

The main control section would install the graph dependencies through registry into the target image. You can specify some build options to control the stage build and finally push the target image into the cloud.

Here is the sample code with comments inline.

# Container dockerfile Stage build section

---

# target: x86 # optional, can be used during multi-arch build

unique_stage: final_image # required, name must be unique

# base_image is required

base_image: "nvcr.io/nvidia/deepstream:8.0-triton-multiarch"

stage_model: clean_stage # Optional

# Install debian packages

apt_deps:

- curl

- ca-certificates

- tar

- python3

- python3-pip

# Install pip3 packages

pip3_deps:

- PyYAML>=5.4.1

# Copy local files to image

local_copy_files:

- src: "/opt/nvidia/graph-composer/gxe"

# dst: "/opt/nvidia/graph-composer/gxe"

- src: "/opt/nvidia/graph-composer/libgxf_core.so"

# dst: "/opt/nvidia/graph-composer/libgxf_core.so"

# Copy files from other images or other stages

stage_copy_files:

- src_stage: "nvcr.io/nvidia/deepstream:8.0-samples"

src: "/opt/nvidia/deepstream/deepstream/samples"

# dst: "/opt/nvidia/deepstream/deepstream/samples"

# Download HTTP archives and install

http_archives:

- url: https://host:port/archive.tar.bz2

curl_option: "-u user:token"

post_cmd: "tar -jxvf archive.tar.bz2 -C /"

# Clean up operations

custom_runs:

- "apt autoremove && ln -s /opt/nvidia/deepstream/deepstream/samples /samples"

# Specify WORKDIR

work_folder: /workspace/test/

# Specify multiple ENV

env_list:

PATH: "/opt/nvidia/graph-composer:$PATH"

LD_LIBRARY_PATH: "/opt/nvidia/graph-composer/:$LD_LIBRARY_PATH"

# specify ENTRYPOINT

#entrypoint: ["/opt/nvidia/graph-composer/gxe"]

# Container Builder Main Control Section

--- # delimiter required

container_builder: main # required, any string is ok for name

graph: # optional

graph_files: [deepstream-test1.yaml] # graph file in local

graph_dst: /workspace/test/ # destination path in target image

# extension manifest location in target image

manifest_dst: /workspace/test/

# extensions installed location in target image

ext_install_root: /workspace/test/

# docker build options

docker_build:

image_name: deepstream_test1:nvgcb_test

no_cache: true

squash: false

# docker push list to cloud, optional

# username/password are optional if $docker login already ran

docker_push:

- url: "nvcr.io/nvidian/user/deepstream_test1:nvgcb_test"

Username:

password:

A Multi-Stage Example#

This example shows a multi-stage build. The download_stage within compile_stage model would download all ONNX models from a private git repo with netrc file for permissions. The final image would copy a specific file out of download_stage into the final image location.

The download_stage would be lost as some intermediate layers and the final image is clean to keep minimal dependencies and get rid of netrc files.

# use compile_stage to download all models through git

---

unique_stage: download_stage

base_image: "ubuntu:24.04"

stage_model: compile_stage

# copy netrc file into compile stage for git clone

local_copy_files:

- src: "/home/user/.netrc"

dst: "/root/.netrc"

# download models into folder /download/models

git_repo_list:

- repo_folder: /downloads/models

url: https://privatehost/user/models #a private host require netrc

tag: master

# use clean_stage for final image output

---

# Final Stage

unique_stage: final_image

base_image: "ubuntu:24.04"

stage_model: clean_stage

# copy a specific file out of download_stage into final_image

stage_copy_files:

- src_stage: "download_stage"

src: "/downloads/models/modelA.onnx"

dst: "/data/modelA.onnx"

# Container builder main control settings

---

# Container Builder Config

container_builder: builder_name # required

docker_build:

image_name: "cb_multi_stage:cb_test"

# specify step orders in case multiple stages out of order

stage_steps: [download_stage, final_image]

no_cache: true

Container builder main control section specification#

Note

All fields with /*dst ends with ‘/’ means that is a folder path on the target image. /*src depends on the real source path.

Fields |

Description |

Type and Range |

Example Notes |

container_builder |

The control section name |

string, required |

container_builder: main

|

graph |

A dictionary with graph file, extension and registry setting |

dictionary, optional |

graph:

graph_files: [test1.yaml]

manifest_dst: /workspace/test/

|

graph.graphfiles |

Deepstream gxf graph files in YAML format, which could be generated by Composer |

list[string], required for graph |

graph:

graph_files: [test1.yaml, test2.yaml]

|

graph.graph_dst |

Destination in target image for the graph files. If not provided, User can decide to copy from through stage build |

string, optional |

graph:

graph_dst: /workspace/test1/

Must specify a folder path if multiple graph_files exists. |

graph.manifest_dst |

Destination in target image for the manifest files |

string, required for graph |

graph:

manifest_dst: /workspace/test/

Must specify a folder path if multiple graph_files exists. |

graph.ext_install_root |

Destination in target image for graph extensions prefix directory |

string, optional depends on registry behavior is not set |

graph:

ext_install_root: /opt/nvidia/graph-composer

Must specify a folder path |

docker_build.image_name |

target image name with tag |

string, optional if absent, a random name would be used |

docker_build:

image_name: nvgcb_test:21.04

|

docker_build.no_cache |

Build with cache or not Cache is disabled by default |

bool, optional |

docker_build:

no_cache: true

|

docker_build.squash |

Squash image layers to reduce image size, need enable experimental in docker, check prerequisites. Note: not all layers support squash, if some layers failed, need disable squash |

bool, optional |

docker_build:

squash: false

|

docker_build.network |

Network mode for docker build |

string, optional Default value is |

docker_build:

network: host

|

docker_build.stage_steps |

The sequence of stage build sections, available when multi-stage build enabled. |

list[string], optional If disabled, default sequence is the order of stage sections |

---

unique_stage: final_stage

...

---

unique_stage: download_stage

...

---

docker_build:

stage_steps: [download_stage, final_stage]

|

docker_push |

A list of remote image repos for docker to push. each item have a url for remote repo with tag |

list[dict], optional Must if you intend to use the multi-arch feature |

docker_push:

- url: "nvcr.io/user/repo1:cb_test1"

- url: "gitlab/user/repo1:cb_test1"

...

|

docker_push.url |

Each url is a remote image repo and tag names. |

string, required for docker_push |

docker_push:

- url: "nvcr.io/user/repo1:cb_test1"

|

docker_push.username |

username to login the remote repo server Note: it is not required if user already have ran $docker login server:port |

string, optional |

docker_push:

- url: "nvcr.io/user/repo1:cb_test1"

username: <user>

|

docker_push.password |

text password to login the remote repo server Note: it is not required if user already have ran $docker login server:port |

string, optional |

docker_push:

- url: "nvcr.io/user/repo1:cb_test1"

username: <user>

password: <password>

|

docker_push.password_env |

A variable name of the global OS environment which stores the password. This can avoid user expose text password in config file Note: it is not required if user already have ran $docker login |

string, optional |

docker_push:

- url: "nvcr.io/user/repo1:cb_test1"

username: <user>

password_env: <TOKEN>

|

debug |

Some debug info and reserve some intermediate state |

dictionary, optional |

debug:

docker_file: /path/to/dockerfile

docker_folder: /path/to/docker_folder

|

debug.docker_file |

Preserve the generated dockerfile for debug |

string, optional |

debug:

docker_file: /path/to/dockerfile

|

debug.docker_folder |

Preserve the generated docker folder for debug |

string, optional |

debug:

docker_folder: /path/to/docker_folder

|

Container dockerfile stage section specification#

The table below lists both compile_stage and clean_stage sections configuration specification. Most fields are common for both stage models. Only clean_stage should be used for the final stage. In addition, users should keep in mind stages of compile_stage are not optimized and may have extra packages and files not required for final output.

Note

All fields with *dst ends with ‘/’ means that is a folder path on the target image. *src depends on the real source path.

Fields |

Description |

Type and Range |

Example Notes |

Stage compile_stage / clean_stage |

target |

A graph target key, which corresponds to a target configuration , used by registry during graph install |

string, optional Choose from

|

target: x86

|

both |

unique_stage |

A unique name on the present stage, It is also used for dockerfile target name |

string, required |

unique_stage: final_image

|

both |

base_image |

Specify a stage name or a remote/local image for which the current target stage is based on. |

string, Optional |

base_image: "ubuntu:24.04"

For auto selection based on specified dependencies base_image: "auto"

|

both |

platform |

Specify the platform of the base_image in case it has same name in multiple platforms |

string, optional Choose from

Default value: linux/amd64 |

platform: linux/amd64

|

both |

stage_model |

Which stage_model the config file would be used to build this stage |

string, optional Choose from

Default value: clean_stage |

stage_model: clean_stage

|

both |

build_args |

A dictionary of build arguments on top of the autogenerate d docker file. The arguments could be used for docker and other cmdlines |

list[string], optional |

build_args:

CURL_PACK: "curl"

apt_deps:

- "$CURL_PACK"

|

both |

apt_deps |

A list of debian package names for apt install |

list[string], optional |

apt_deps:

- curl

- ca-certificates

- zip=3.0-11build1

|

both |

pip3_deps |

A list of Python package names for pip3 install. Note: user need specify apt_deps to install python3-pip in config file |

list[string], optional |

pip3_deps:

- PyGObject

- PyYAML

- result

|

both |

resources_files |

A list of resources files describing resources to copy to the container |

list[string], optional |

resources_files:

- resources1.yaml

- resources2.yaml

|

both |

local_copy_files |

A list file names to copy from local files/folders to destination path of stage image |

list[dict], optional |

local_copy_files:

- src: "/opt/nvidia/graph-composer/gxe"

dst: "/opt/nvidia/bin/"

- src: deepstream-test1 (a folder in local)

dst: "/workspace/deepstream-test1"

|

both |

local_copy_files.src |

Specify a file/folder name in the local machine. A relative path is relative to the config file |

string, optional |

local_copy_files:

- src: "/opt/nvidia/graph-composer/gxe"

dst: "/opt/nvidia/bin/"

- src: deepstream-test1

dst: "/workspace/deepstream-test1"

|

both |

local_copy_files.dst |

Specify an absolute path location in the target image stage. If src is absolute path and dst is empty, dst would be same path as src Read note on top of the section about dst folders policy |

string, optional |

Local file example local_copy_files:

- src: "/opt/nvidia/graph-composer/gxe"

dst: "/opt/nvidia/graph-composer/gxe"

Alternatives for dst. 1. dst is empty dst:

dst: "/opt/nvidia/graph-composer"

2. local folder example local_copy_files:

- src: "/opt/nvidia/samplefolder"

dst: "/data/samplefolder"

|

both |

stage_copy_files |

A list of StageCopyStr ucture to copy files across multiple stages and multiple images |

list[dict], optional |

stage_copy_files:

- src_stage: "nvcr.io/public/image:xxxx"

src: "/opt/nvidia/samples"

An empty dst is same as src path

|

both |

stage_copy_files.src_stage |

Specify a stage name or a image name where the src files come from |

string, optional |

stage_copy_files:

- src_stage: "nvcr.io/public/image:xxxx"

src: "/opt/nvidia/samples"

- src_stage: "<compile_stage_name>"

src: "/opt/nvidia/bin"

|

both |

stage_copy_files.sr c |

Specify a file/folder name in src_stage image/stage. Note: absolute path is recommended to avoid conflicts |

string, optional |

stage_copy_files:

- src_stage: "nvcr.io/public/image:xxxx"

src: "/opt/nvidia/samples"

|

both |

stage_copy_files.dst |

Specify a file/folder name in target stage. Note: absolute path is recommended to avoid conflicts, an empty dst is same path as src |

string, optional default: empty value is same path as src |

stage_copy_files:

- src_stage: "nvcr.io/public/image:xxxx"

src: "/opt/nvidia/samples"

dst: "/opt/nvidia/samples"

|

both |

http_archives |

A list of HTTP archive structures to download and custom install. Note: Base image/stage should have curl/ca-certificates otherwise need users specify them in apt_deps. Archives are downloaded into a temp folder and later auto-cleaned. User need specify an absolute path for filename if don’t want it auto-cleaned |

list[dict], optional |

http_archives:

- url: https://host/download/installer.sh

post_cmd: "bash installer.sh"

post_env:

key: PATH

value: "/root/bin:$PATH"

- url: https://host/download/sample.mp4

filename: /opt/nvidia/video/sample.mp4

|

both |

http_archives.url |

Specify a url to download the archive |

string, required for http_archives |

http_archives:

- url: https://host/download/installer.sh

|

both |

http_archives.filename |

Rename the downloaded file It could be: a. Empty, parse the last field in the url path as filename and download into a temporary folder and recycle later. b. A filename without a path will make the archive downloaded into a temporary folder and recycled later. c. An absolute path will make the archive downloaded into the path on the target image and keep it there without being recycled. d. A relative path is not supported and causes undefined results. |

string, optional |

http_archives:

- url: https://host/download/sample.mp4

filename: /opt/nvidia/video/sample.mp4

same as http_archives:

- url: https://host/download/sample.mp4

filename: sample.mp4

post_cmd: "cp -a sample.mp4 /opt/nvidia/video/sample.mp4"

|

both |

http_archives.post_cmd |

Specify how to install the archive during stage build |

string, optional |

http_archives:

- url: https://host/download/installer.sh

post_cmd: "chmod a+x installer.sh && ./installer.sh"

The default filename is installer.sh |

both |

http_archives.post_env |

Specify a environment setting after download and install this archive The environment variable has a key:string and value:string. key and value settings must follow Linux Shell environment variable rules |

dict[key, value], optional key:string value: string |

Refine environment PATH=/root/bin:$PATH http_archives:

- url: https://host/download/installer.sh

post_cmd: "bash installer.sh"

post_env:

key: PATH

value: "/root/bin:$PATH"

|

both |

http_archives.curl_option |

Specify extra curl options(e.g. permissions) while downloading archives. |

string, optional |

http_archives:

- url: https://host/download/sample.mp4

curl_option: "-u user:token"

filename: /data/sample.mp4

An example copy netrc file to image for curl and remove after archives downloaded local_copy_files:

- src: "/home/user/.netrc"

dst: "/root/.netrc"

http_archives:

- url: https://host/download/sample.mp4

curl_option: "-n"

filename: /data/sample.mp4

custom_runs:

- "rm -rf /root/.netrc"

|

both |

git_repo_list |

A list of git repo to clone, download and do custom build from source. User can use multi-stage config files to build source code and stage copy binaries to final stage |

list[dict], optional |

---

unique_stage: compile_1

stage_model: compile_stage

git_repo_list:

- url: https://github.com/org/project

repo_folder: /workspace/project

tag: master

build_cmd: "./autogen.sh && make && make install"

---

unique_stage: final

stage_copy_files:

- src_stage: compile_1

src: "/usr/local/bin/binary"

dst: "/usr/bin/binary"

|

compile_stage only |

git_repo_list.url |

Specify a url to git fetch the repo souce code |

string, required for git_repo_list |

git_repo_list:

- url: https://github.com/org/project

|

compile_stage only |

git_repo_list.tag |

Specify a exact tag/branch/co mmit-id of the git repo to fetch |

string, required for git_repo_li st |

git_repo_list:

- url: https://github.com/org/project

tag: master

|

compile_stage only |

git_repo_list.repo_folder |

Specify abosulate folder path in target stage to store the repo files |

string, required for git_repo_li st |

git_repo_list:

- url: https://github.com/org/project

tag: master

repo_folder: /workspace/project

|

compile_stage only |

git_repo_list.build_cmd |

Specify custom shell cmdline how to build and install the repo from source |

string, optional |

stage_model: compile_stage

git_repo_list:

- url: https://github.com/org/project

tag: master

repo_folder: /workspace/project

build_cmd: "./autogen.sh && make && make install"

|

compile_stage only |

ssh_key_host_copy |

Enable to

automatically

copy

Note: It is not recommended to use but could be useful for some git repo requires SSH keys. Users should be careful to enable it since it might expose the private key in the compile stage. |

string, optional Default value: false |

ssh_key_host_copy: true

|

compile_stage only |

work_folder |

Specify workspace folder in image stage for default folder when launch the container |

string, optional |

work_folder: /workspace/deepstream/

|

both |

custom_runs |

A list of custom RUNs at the end of the docker build |

list[string], optional |

local_copy_files:

- src: "mypackage.deb"

dst: "/tmp/"

custom_runs:

- "dpkg -i /tmp/mypackage.deb && rm -rf /tmp/*.deb"

|

both |

disable_run_true |

Auto generated dockerfile have a RUN true between each copy in locals and stages. It’s a workaround for docker build in some cases report copy errors. It is recommended to keep default value before docker’s fix but still keep an option for users to update. |

bool, optional Default value: false |

disable_run_true: false

|

both |

env_list |

Specify a list of custom environment settings at the end of docker build |

list[string], optional |

env_list:

PATH: "/opt/bin/:$PATH"

LD_LIBRARY_PATH:"/opt/lib/:$LD_LIBRARY_PATH"

DISPLAY: ":0"

|

clean_stage only |

entrypoint |

Specify a string list of entrypoint for the image |

list[string], optional |

entrypoint: ["/opt/bin/entrypoint.sh", "param1"]

|

clean_stage only |