Gst-nvtracker#

The Gst-nvtracker plugin allows the DS pipeline to use a low-level tracker library to track the detected objects over time persistently with unique IDs. It supports any low-level library that implements NvDsTracker API, including the reference implementations provided by the NvMultiObjectTracker library: IOU, NvSORT, NvDCF, MaskTracker and NvDeepSORT trackers. As part of this API, the plugin queries the low-level library for capabilities and requirements concerning the input format, memory type, and additional feature support. Based on these queries, the plugin then converts the input frame buffers into the format requested by the low-level tracker library. For example, NvDCF, MaskTracker, and NvDeepSORT trackers use NV12 or RGBA, while IOU and NvSORT requires no video frame buffers at all.

Based on the queries, the Gst-nvtracker plugin constructs the input data to the low-level tracker library, which consists of video frames and detected objects across multiple streams that are fed to the low-level library in a single data structure (i.e, a frame batch) through a single API call. This API design allows all the input data from multiple streams in the low-level tracker library processed in a batched processing mode (similar to the batch mode in cuFFT™, cuBLAS™, etc.) for potentially more efficient execution especially when accelerated on GPU. There for, it is required that the low-level tracker supports batch processing when using NvMOT_Process API.

The Gst-nvtracker plugin supports retrieval of the user-defined miscellaneous data from the low-level tracker library through NvMOT_RetrieveMiscData API, which includes useful object tracking information other than the default data for the current frame targets; for example, past-frame object data, targets in shadow tracking mode, full trajectory of terminated targets and re-identification features. More details on the types of miscellaneous data and what they means can be found in Miscellaneous Data Output section. The users are allowed to define other types of miscellaneous data in NvMOTTrackerMiscData.

More details on all these tracker APIs is discussed in NvDsTracker API for Low-Level Tracker Library section.

The plugin accepts NV12- or RGBA-formatted frame data from the upstream component and scales (and/or converts) the input buffer to a buffer in the tracker plugin based on the format required by the low-level library, with the frame resolution specified by tracker-width and tracker-height in the configuration file’s [tracker] section. The path to the low-level tracker library is to be specified via ll-lib-file configuration option in the same section. The low-level library to be used may also require its own configuration file, which can be specified via ll-config-file option. If ll-config-file is not specified, the low-level tracker library may proceed with its default parameter values.

The reference low-level tracker implementations provided by the NvMultiObjectTracker library support various types of multi-object tracking algorithms:

IOU Tracker: The Intersection-Over-Union (IOU) tracker uses the IOU values among the detector’s bounding boxes between the two consecutive frames to perform the association between them or assign a new target ID if no match found. This tracker includes a logic to handle false positives and false negatives from the object detector; however, this can be considered as the bare-minimum object tracker, which may serve as a baseline only.

NvSORT: The NvSORT tracker is the NVIDIA®-enhanced Simple Online and Realtime Tracking (SORT) algorithm. Instead of a simple bipartite matching algorithm, NvSORT uses a cascaded data association based on bounding box (bbox) proximity for associating bboxes over consecutive frames and applies a Kalman filter to update the target states. It is computationally efficient since it does not involve any pixel data processing.

NvDeepSORT: The NvDeepSORT tracker is the NVIDIA®-enhanced Online and Realtime Tracking with a Deep Association Metric (DeepSORT) algorithm, which uses the deep cosine metric learning with a Re-ID neural network for data association of multiple objects over frames. This implementation allows users to use any Re-ID network as long as it is supported by NVIDIA’s TensorRT™ framework. NvDeepSORT also uses a cascaded data association instead of a simple bipartite matching. The implementation is also optimized for efficient processing on GPU.

NvDCF: The NvDCF tracker is an online multi-object tracker that employs a discriminative correlation filter for visual object tracking, which allows independent object tracking even when detection results are not available. It uses the combination of the correlation filter responses and bounding box proximity for data association.

MaskTracker: MaskTracker simultaneously performs multi-object tracking and segmentation using advanced vision foundation models such as Segment Anything Model 2 (SAM2). It maintains high-quality segmentation and robust tracking, while automatically adding and removing targets as needed. MaskTracker can generate bounding boxes around segmentation masks, match them with detections, and fuse this information to improve bounding box accuracy. Additionally, it supports accelerated inference of the entire SAM2 network with TensorRT™.

More details on each algorithm and its implementation details can be found in NvMultiObjectTracker : A Reference Low-Level Tracker Library section.

Note

The source code of the Gst-nvtracker plugin is provided as a part of DeepStream SDK package under sources/gst-plugins/gst-nvtracker/ when installed on a system This is to allow users to make direct changes in the plugin whenever needed for their custom applications and also to show the users as to how the low-level libraries are managed and how the metadata is handled in the plugin.

Sub-batching#

The Gst-nvtracker plugin works in the batch processing mode by default. In this mode, the input frame batch is passed to and processed by a single instance of low-level tracker library. The advantage of batch processing mode is to allow GPUs to work on bigger amount of data at once, potentially increasing the GPU occupancy during execution and reducing the CUDA kernel launch overhead. Depending on the use cases, however, a potential issue is that there is a possibility that GPU could be idling (also referred to as GPU bubble) in some compute stages in the tracker unless the end-to-end operation within the module is carried out solely on the GPU. This is indeed the case if some of the compute modules in the tracker runs on CPU. If there are other components in the DeepStream pipeline that uses GPU (e.g., GPU-based inference in PGIE and SGIE), such CPU blocks in tracker can be hidden behind them, not affecting the overall throughput of the pipeline.

The newly-introduced Sub-batching feature allows the plugin to split the input frame batch into multiple sub-batches (for example, a four-stream pipeline can use two sub-batches in the tracker plugin, each of which takes care of two streams). Each sub-batch is assigned to a separate instance of low-level tracker library, where the input to the corresponding sub-batch is processed separately. Each instance of low-level tracker libraries runs on a dedicated thread running independently, allowing parallel processing of sub-batches and minimizing the GPU idling due to CPU compute blocks, which eventually results in higher resource utilization.

Because sub-batching assigns separate low-level tracker library instances to different sub-batches, it allows the user to configure each individual sub-batch differently with different low-level tracker library configuration files. This can be utilized in multiple ways like setting varied compute backends across sub-batches, using varied tracking algorithms across sub-batches or modifying any other configuration that is supported in low-level tracker configuration file. Each VPI™ backend low-level tracker library supports at most 128 streams, so when running more than 128 streams, users need to configure the sub-batching to run multiple instances of the low-level tracker library. More detailed example use-cases are discussed in Setup and Usage of Sub-batching section.

Inputs and Outputs#

This section summarizes the inputs, outputs, and communication facilities of the Gst-nvtracker plugin.

Input

Gst Buffer

A frame batch from available source streams

NvDsBatchMetaIncludes the detected object info from primary inference module

More details about NvDsBatchMeta can be found in the link.

The color formats supported for the input video frame by the NvTracker plugin are NV12 and RGBA. A separate batch of video frames are created from the input video frames based on the color format and the resolution that is required to the low-level tracker library.

Output

Gst Buffer

Same as the input. Unmodified.

NvDsBatchMetaUpdated with additional data from tracker low-level library

There’s no separate data structure for the output. Instead, the tracker plugin just adds/updates the data in the existing NvDsBatchMeta (and its NvDsObjectMeta) with the output data from the tracker low-level library, including tracked object coordinates, tracker confidence, and object IDs. There are some other miscellaneous data that can be attached as user-meta, which is covered in Miscellaneous Data Output section.

Note

If the tracker algorithm does not generate confidence value, then tracker confidence value will be set to the default value (i.e., 1.0) for tracked objects. For IOU, NvSORT and NvDeepSORT trackers, tracker_confidence is set to 1.0 as these algorithms do not generate confidence values for tracked objects. NvDCF tracker, on the other hand, generates confidence for the tracked objects due to its visual tracking capability, and its value is set in tracker_confidence field in NvDsObjectMeta structure.

Note that there are separate parameters in NvDsObjectMeta for detector’s confidence and tracker’s confidence, which are confidence and tracker_confidence, respectively. MaskTracker also fills the segmentation mask information in mask_params field. More details can be found in New metadata fields

The following table summarizes the features of the plugin.

Feature |

Description |

Release |

|---|---|---|

Configurable tracker width/height |

Frames are internally scaled in NvTracker plugin to the specified resolution for tracking and passed to the low-level lib |

DS 2.0 |

Multi-stream CPU/GPU tracker |

Supports tracking on batched buffers consisting of frames from multiple sources |

DS 2.0 |

NV12 Input |

DS 2.0 |

|

RGBA Input |

DS 3.0 |

|

Configurable GPU device |

User can select GPU for internal scaling/color format conversions and tracking |

DS 2.0 |

Dynamic addition/deletion of sources at runtime |

Supports tracking on new sources added at runtime and cleanup of resources when sources are removed |

DS 3.0 |

Support for user’s choice of low-level library |

Dynamically loads user selected low-level library |

DS 4.0 |

Support for batch processing exclusively |

Supports sending frames from multiple input streams to the low-level library |

DS 4.0 |

Multiple buffer formats as input to low-level library |

Converts input buffer to formats requested by the low-level library, for up to 4 formats per frame |

DS 4.0 |

Enabling tracking-id display |

Supports enabling or disabling display of tracking-id |

DS 5.0 |

Tracking ID reset based on event |

Based on the pipeline event (i.e., GST_NVEVENT_STREAM_EOS and GST_NVEVENT_STREAM_RESET), the tracking IDs on a particular stream can be reset to start from 0 or new IDs. |

DS 6.0 |

Tracker Miscellaneous data output |

Supports outputting user defined miscellaneous data (including object Re-ID features, the past-frame data, a list of terminated tracks, etc. ) if the low-level library supports the capability |

DS 6.3 |

Support for NVIDIA’s VPI™ based Crop-scaler and DCF-Tracker algorithms in NvDCF tracker |

DS 6.4 |

|

PVA-backend for NvDCF via VPI™’s unified API |

Allow PVA-based execution of a significant part of NvDCF on Jetson to achieve lower GPU utilization |

DS 6.4 |

Sub-batching |

Supports splitting of a batch of frames in sub-batches which are internally processed in parallel resulting in higher resource utilization. This feature also enables specification of a different config file for each sub-batch. |

DS 6.4 |

Single-View 3D Tracking |

Allow 3D world coordinate system based object tracking when camera/model info (3x4 projection matrix and 3D human model info) is provided for better handling of partial occlusion |

DS 6.4 |

MaskTracker (Developer Preview) |

A new algorithm for multi-object tracking and segmentation using SAM2 as visual tracking engine |

DS 8.0 |

Gst Properties#

The following table describes the Gst properties of the Gst-nvtracker plugin.

Property |

Meaning |

Type and Range |

Example Notes |

|---|---|---|---|

tracker-width |

Frame width at which the tracker is to operate, in pixels. |

Integer, 0 to 4,294,967,295 |

tracker-width=640 |

tracker-height |

Frame height at which the tracker is to operate, in pixels. |

Integer, 0 to 4,294,967,295 |

tracker-height=384 |

ll-lib-file |

Pathname of the low-level tracker library to be loaded by Gst-nvtracker. |

String |

ll-lib-file=/opt/nvidia/deepstream/deepstream/lib/libnvds_nvmultiobjecttracker.so |

ll-config-file |

Configuration file for the low-level library if needed. A list of configuration files can be specified when the property sub-batches is configured. |

Path to configuration file OR A list of paths to configuration files delimited by semicolon |

ll-config-file=config_tracker_NvDCF_perf.yml ll-config-file=config_tracker_NvDCF_perf1.yml; config_tracker_NvDCF_perf2.yml |

gpu-id |

ID of the GPU on which device/unified memory is to be allocated, and with which buffer copy/scaling is to be done. (dGPU only.) |

Integer, 0 to 4,294,967,295 |

gpu-id=0 |

tracking-surface-type |

Set surface stream type for tracking. (default value is 0) |

Integer, ≥0 |

tracking-surface-type=0 |

display-tracking-id |

Enables tracking ID display on OSD. |

Boolean |

display-tracking-id=1 |

compute-hw |

Compute engine to use for scaling. 0 - Default 1 - GPU 2 - VIC (Jetson only) |

Integer, 0 to 2 |

compute-hw=1 |

tracking-id-reset-mode |

Allow force-reset of tracking ID based on pipeline event. Once tracking ID reset is enabled and such event happens, the lower 32-bit of the tracking ID will be reset to 0 0: Not reset tracking ID when stream reset or EOS event happens 1: Terminate all existing trackers and assign new IDs for a stream when the stream reset happens (i.e., GST_NVEVENT_STREAM_RESET) 2: Let tracking ID start from 0 after receiving EOS event (i.e., GST_NVEVENT_STREAM_EOS) (Note: Only the lower 32-bit of tracking ID to start from 0) 3: Enable both option 1 and 2 |

Integer, 0 to 3 |

tracking-id-reset-mode=0 |

input-tensor-meta |

Use the tensor-meta from Gst-nvdspreprocess if available for tensor-meta-gie-id |

Boolean |

input-tensor-meta=1 |

tensor-meta-gie-id |

Tensor Meta GIE ID to be used, property valid only if input-tensor-meta is TRUE |

Unsigned Integer, ≥0 |

tensor-meta-gie-id=5 |

sub-batches |

Configures splitting of a batch of frames in sub-batches. There are two ways to configure sub-batches. First option allows static mapping of each source id to individual sub-batch. Second option lets the user configure the sub-batch sizes. Mapping of individual streams to sub-batch happens dynamically at runtime. |

Option 1 : Semicolon delimited integer array where each number corresponds to source id. Must include all values from 0 to (batch-size -1) where batch-size is configured in Option 2 : Colon delimited integer array where each number corresponds to size of a sub-batch (i.e. max number of stream a sub-batch can accommodate) |

Option 1 : sub-batches=0,1;2,3 In this example, a batch size of 4 is split into two sub-batches where the first sub-batch consists of source ids 0 & 1 and second sub-batch consists of source ids 2 & 3 Option 2 : sub-batches=2:1 The above example indicates that there are two sub-batches, first one can accommodate 2 streams and second one can accommodate 1. |

sub-batch-err-recovery-trial-cnt (Alpha feature) |

Configure the number of times the plugin can try to recover when the low level tracker in a sub-batch returns with a fatal error. To recover from the error, the plugin reinitializes the low level tracker library. |

Integer,≥-1 where, -1 corresponds to infinite trials |

sub-batch-err-recovery-trial-cnt=3 |

user-meta-pool-size |

The size of tracker miscellaneous data buffer pool |

Unsigned Integer, >0 |

user-meta-pool-size=32 |

NvDsTracker API for Low-Level Tracker Library#

A low-level tracker library can be implemented using the API defined in sources/includes/nvdstracker.h. Parts of the API refer to sources/includes/nvbufsurface.h. The names of API functions and data structures are prefixed with NvMOT, which stands for NVIDIA Multi-Object Tracker. Below is the general flow of the API from a low-level library’s perspective:

The first required function is:

NvMOTStatus NvMOT_Query ( uint16_t customConfigFilePathSize, char* pCustomConfigFilePath, NvMOTQuery *pQuery );

The plugin uses this function to query the low-level library’s capabilities and requirements before it starts any processing sessions (i.e., contexts) with the library. Queried properties include the input frame’s color format (e.g., RGBA or NV12) and memory type (e.g., NVIDIA® CUDA® device or CPU-mapped NVMM).

The plugin performs this query once during initialization stage, and its results are applied to all contexts established with the low-level library. If a low-level library configuration file is specified, it is provided in the query for the library to consult. The query reply structure,

NvMOTQuery, contains the following fields:NvMOTCompute computeConfig: Report compute targets supported by the library. The plugin currently only echoes the reported value when initiating a context.uint8_t numTransforms: The number of color formats required by the low-level library. The valid range for this field is0toNVMOT_MAX_TRANSFORMS. Set this to0if the library does not require any visual data.Note

0does not mean that untransformed data will be passed to the library.NvBufSurfaceColorFormat colorFormats[NVMOT_MAX_TRANSFORMS]: The list of color formats required by the low-level library. Only the firstnumTransformsentries are valid.NvBufSurfaceMemType memType: Memory type for the transform buffers. The plugin allocates buffers of this type to store color- and scale-converted frames, and the buffers are passed to the low-level library for each frame. The support is currently limited to the following types:dGPU:

NVBUF_MEM_CUDA_PINNED NVBUF_MEM_CUDA_UNIFIED

Jetson:

NVBUF_MEM_SURFACE_ARRAYbool supportBatchProcessing: True if the low-level library supports the batch processing across multiple streams; otherwise false.bool supportPastFrame: True if the low-level library supports outputting the past-frame data; otherwise false.

After the query, and before any frames arrive, the plugin must initialize a context with the low-level library by calling:

NvMOTStatus NvMOT_Init ( NvMOTConfig *pConfigIn, NvMOTContextHandle *pContextHandle, NvMOTConfigResponse *pConfigResponse );

The context handle is opaque outside the low-level library. In the batch processing mode, the plugin requests a single context for all input streams. In per-stream processing mode, on the other hand, the plugin makes this call for each input stream so that each stream has its own context. This call includes a configuration request for the context. The low-level library has an opportunity to:

Review the configuration and create a context only if the request is accepted. If any part of the configuration request is rejected, no context is created, and the return status must be set to

NvMOTStatus_Error. ThepConfigResponsefield can optionally contain status for specific configuration items.Pre-allocate resources based on the configuration.

Note

In the

NvMOTMiscConfigstructure, thelogMsgfield is currently unsupported and uninitialized.The

customConfigFilePathpointer is only valid during the call.

Once a context is initialized, the plugin sends frame data along with detected object bounding boxes to the low-level library whenever it receives such data from upstream. It always presents the data as a batch of frames, although the batch can contain only a single frame in per-stream processing contexts. Note that depending on the frame arrival timings to the tracker plugin, the composition of frame batches could either be a full batch (that contains a frame from every stream) or a partial batch (that contains a frame from only a subset of the streams). In either case, each batch is guaranteed to contain at most one frame from each stream.

The function call for this processing is:

NvMOTStatus NvMOT_Process ( NvMOTContextHandle contextHandle, NvMOTProcessParams *pParams, NvMOTTrackedObjBatch *pTrackedObjectsBatch );, where:

pParamsis a pointer to the input batch of frames to process. The structure contains a list of one or more frames, with at most one frame from each stream. Thus, no two frame entries have the samestreamID. Each entry of frame data contains a list of one or more buffers in the color formats required by the low-level library, as well as a list of object attribute data for the frame. Most libraries require at most one-color format.

pTrackedObjectsBatchis a pointer to the output batch of object attribute data. It is pre-populated with a value fornumFilled, which is the same as the number of frames included in the input parameters.If a frame has no output object attribute data, it is still counted in

numFilledand is represented with an empty list entry (NvMOTTrackedObjList). An empty list entry has the correctstreamIDset and numFilled set to0.Note

The output object attribute data

NvMOTTrackedObjcontains a pointer to the detector object (provied in the input) that is associated with a tracked object, which is stored inassociatedObjectIn. You must set this to the associated input object only for the frame where the input object is passed in. For a pipeline with PGIEinterval=1, for example:

Frame 0:

NvMOTObjToTrackXis passed in. The tracker assigns it ID 1, and the output object’sassociatedObjectInpoints toX.Frame 1: Inference is skipped, so there is no input object from detector to be associated with. The tracker finds Object 1, and the output object’s

associatedObjectInpoints toNULL.Frame 2:

NvMOTObjToTrackYis passed in. The tracker identifies it as Object 1. The output Object 1 hasassociatedObjectInpointing toY.

Depending on the capability of the low-level tracker, there could be some user-defined miscellaneous data to report to tracker plugin.

batch_user_meta_listinNvDsBatchMetaas a user-meta:NvMOTStatus NvMOT_RetrieveMiscData ( NvMOTContextHandle contextHandle, NvMOTProcessParams *pParams, NvMOTTrackerMiscData *pTrackerMiscData );

where:

pParamsis a pointer to the input batch of frames to process. This structure is needed to check the list of stream ID in the batch.

pTrackerMiscDatais a pointer to the output miscellaneous data for the current batch to be filled by the low-level tracker. The data structureNvMOTTrackerMiscDatais defined innvdstracker.h.

In case that a video stream source is removed on the fly, the plugin calls the following function so that the low-level tracker library can remove it as well. Note that this API is optional and valid only when the batch processing mode is enabled, meaning that it will be executed only when the low-level tracker library has an actual implementation for the API. If called, the low-level tracker library can release any per-stream resource that it may be allocated:

void NvMOT_RemoveStreams ( NvMOTContextHandle contextHandle, NvMOTStreamId streamIdMask );

When all processing is complete, the plugin calls this function to clean up the context and deallocate its resources:

void NvMOT_DeInit (NvMOTContextHandle contextHandle);

NvMultiObjectTracker : A Reference Low-Level Tracker Library#

Multi-object tracking (MOT) is a key building block for a large number of intelligent video analytics (IVA) applications that requires spatio-temporal analysis of objects of interest to draw further insights about the objects’ behaviors in long term. Given a set of detected objects from the Primary GIE (PGIE) module on a single or multiple streams and with the APIs defined to work with the tracker plugin, the low-level tracker library is expected to carry out actual multi-object tracking operations to keep persistent IDs to the same objects over time.

DeepStream SDK provides a single reference low-level tracker library, called NvMultiObjectTracker, that implements all four low-level tracking algorithms (i.e., IOU, NvSORT, NvDeepSORT, and NvDCF) in a unified architecture. It supports multi-stream, multi-object tracking in the batch processing mode for efficient processing on CPU and GPU (and PVA for Jetson). The following sections will cover the unified tracker architecture and the details of each reference tracker implementation.

Unified Tracker Architecture for Composable Multi-Object Tracker#

In NvMultiObjectTracker low-level tracker library, different types of multi-object trackers share common modules when it comes to basic functionalities (e.g., data association, target management, state estimation, etc.), while differing in other core functionalities (e.g., visual tracking for NvDCF and deep association metric for NvDeepSORT). The NvMultiObjectTracker library employs a unified architecture to allow the composition of a multi-object tracker through configuration by enabling only the modules required for a particular object tracker. The IOU tracker, for example, requires a minimum set of modules that consist of data association and target management modules. On top of that, NvSORT adds a state estimator for more accurate motion estimation & prediction, and NvDeepSORT further introduces a deep Re-ID network to integrate appearance information into data association. Instead of the deep neural network-based Re-ID features in NvDeepSORT, NvDCF employs a Discriminative Correlation Filter (DCF)-based visual tracking module that uses conventional feature descriptors for more efficient tracking. However, NvDCF can still allow the use of Re-ID module for target re-association for longer-term robustness. MaskTracker utilizes SAM2 as visual tracking module and generates bounding boxes around segmentation masks for data association with detections.

The table below summarizes what modules are used to compose each object tracker, showing what modules are shared across different object trackers and how each object tracker differs in module composition:

Tracker Type |

State Estimator |

Target Management |

Visual Tracker |

Target Re-Association |

Data Association Metric |

|||

|---|---|---|---|---|---|---|---|---|

Spatio- temporal |

Re-ID |

Proximity & Size |

Visual Similarity |

Re-ID |

||||

IOU |

O |

O |

||||||

NvSORT |

O |

O |

O |

|||||

NvDeepSORT |

O |

O |

O |

O |

||||

NvDCF |

O |

O |

O |

O |

O |

O |

O |

|

MaskTracker |

O |

O |

O (SAM2) |

O (SAM2) |

||||

In the following sections, we will first discuss the general work flow of the NvMultiObjectTracker library and its core modules, and then each type of object trackers in more details with explanations on the config params in each module.

Workflow and Core Modules in The NvMultiObjectTracker Library#

The input to a low-level tracker library consists of (1) a batch of video frames from a single or multiple streams and (2) a list of detector objects for each video frame. If the detection interval (i.e., interval in Primary GIE section) is set larger than 0, the input data to the low-level tracker would have the detector object data only when the inferencing for object detection is performed for a video frame batch (i.e., the inferenced frame batch). For the frame batches where the inference is skipped (i.e., the uninferenced frame batch), the input data would include only the video frames.

Note

A detector object refers to an object that is detected by the detector in PGIE module, which is provided to the multi-object tracker module as an input.

A target refers to an object that is being tracked by the object tracker.

An inferenced frame is a video frame where an inference is carried out for object detection. Since the inference interval can be configured in setting for PGIE and can be larger than zero, the

frameNumof two consecutive inferenced frames may not be contiguous.

For carrying out multi-object tracking operations with the given input data, below are the essential functionalities to be performed. Multithreading is deployed to optimize their performance on CPU.

Data association between the detector objects from a new video frame and the existing targets for the same video stream

Target management based on the data association results, including the target state update and the creation and termination of targets

Depending on the tracker types, there could be some addition processing before data association. For example, NvDeepSORT extracts Re-ID features from all the detector objects and computes the similarity, while NvDCF performs the visual tracker based localization so the targets’ predicted locations in a new frame can be used for data association. More details will be covered in each tracker’s section.

Data Association#

For data association, various types of similarity metrics are used to calculate the matching score between the detector objects and the existing targets, including:

Location similarity (i.e., proximity)

Bounding box size similarity

Re-ID feature similarity (specific to NvDeepSORT tracker)

Visual appearance similarity (specific to NvDCF tracker)

For the proximity between detector objects and targets, IOU is a typical metric that is widely used, but it also depends on the size similarity between them. The similarity of the box size between two objects can be used explicitly, which is calculated as the ratio of the size of the smaller box over the larger one.

The total association score for a pair of detector object and target is the weighted sum of all the metrics:

\[totalScore=w_1*IOU+w_2*sizeSimilarity+w_3*reidSimilarity+w_4*visualSimilarity\]

where \(w_i\) is the weight for each metric set in config file. Users can also set a minimum threshold for each similarity and the total score.

During the matching, a detector object is associated with a target that belongs to the same class by default to minimize the false matching. However, this can be disabled by setting checkClassMatch: 0, allowing objects can be associated regardless of their object class IDs. This can be useful when employing a detector like YOLO, which can detect many classes of objects, where there could be false classification on the same object over time.

Regarding the matching algorithm, users can set associationMatcherType as 0 to employ an efficient greedy algorithm for optimal bipartite matching with similarity metrics defined above, or 1 for a newly introduced method named cascaded data association for higher accuracy.

The cascaded data association consists of multi-stage matching, assigning different priorities and similarity metrics based on detection and target confidence. Detector objects are split into two sets, confirmed (confidence between [tentativeDetectorConfidence, 1.0]) and tentative (confidence between [minDetectorConfidence, tentativeDetectorConfidence]). Then three stage matching are performed sequentially:

Confirmed detections and validated (both active and inactive) targets

Tentative detections and active targets left

Confirmed detections left and tentative targets

The first stage uses the joint-similarity metrics defined above, while the later two stages only considers the IOU similarity, because proximity can be a more reliable metric than visual similarity or Re-ID when the detection confidence is low due to, say, partial occlusions or noise. Each stage takes different sets of bboxes as candidates and uses the efficient greedy algorithm for matching. The matched pairs are produced from each stage and combined together.

The output of the data association module consists of three sets of objects/targets:

The unmatched detector objects

The matched pairs of the detector objects and the existing targets

The unmatched targets

The unmatched detector objects are among the objects detected by a PGIE detector, yet not associated with any of the existing targets. An unmatched detector object is considered as a newly observed object that needs to be tracked, unless they are determined to be duplicates to any of the existing target. If the maximum IOU score of a new detector object to any of the existing targets is lower than minIouDiff4NewTarget, a new target tracker would be created to track the object since it is not a duplicate to an existing target.

Target Management and Error Handling#

Although a new object is detected by the detector (i.e., a detector object), there is a possibility that this may be a false positive. To suppress such noise in detection, the NvMultiObjectTracker tracker library employs a technique called Late Activation, where a newly detected object is examined for a period of time and activated for long-term tracking only if it survives such a period. To be more specific, whenever a new object is detected, a new tracker is created to track the object, but the target is initially put into the Tentative mode, which is a probationary period, whose length is defined by probationAge under TargetManagement section of the config file. During this probationary period, the tracker output will not be reported to the downstream, since the target is not validated yet; however, those unreported tracker output data (i.e., the past-frame data) are stored within the low-level tracker for later report.

The same target may be detected for the next frame; however, there could be false negative by the detector (i.e., missed detection), resulting in a unsuccessful data association to the target. The NvMultiObjectTracker library employs another technique called Shadow Tracking, where a target is still being tracked in the background for a period of time even when the target is not associated with a detector object. Whenever a target is not associated with a detector object for a given time frame, an internal variable of the target called shadowTrackingAge is incremented. Once the target is associated with a detector object, then shadowTrackingAge will be reset to zero.

If the target is in the Tentative mode and the shadowTrackingAge reaches earlyTerminationAge specified in the config file, the target will be terminated prematurely (which is referred to as Early Termination). If the target is not terminated during the Tentative mode and successfully assocciated with a detector object, the target is activated and put into the Active mode, starting to report the tracker outputs to the downstream. If the past-frame data is enabled, the tracked data during the Tentative mode will be reported as well, since they were not reported yet. Once a target is activated (i.e., in Active mode), if the target is not associated for a given time frame (or the tracker confidence gets lower than a threshold), it will be put into the Inactive mode, and its shadowTrackingAge will be incremented, yet still be tracked in the background. However, the target will be terminated if the shadowTrackingAge exceeds maxShadowTrackingAge.

The state transitions of a target tracker are summarized in the following diagram:

The NvMultiObjectTracker library can generate a unique ID to some extent. If enabled by setting useUniqueID: 1, each video stream will be assigned a 32-bit long random number during the initialization stage. All the targets created from the same video stream will have the same upper 32-bit of the uint64_t-type target ID set by the per-stream random number. In the meantime, the lower 32-bit of the target ID starts from 0. The randomly generated upper 32-bit number allows the target IDs from a particular video stream to increment from a random position in the possible ID space. If disabled (i.e., useUniqueID: 0, which is the default value), both the upper and lower 32-bit will start from 0, resulting in the target ID to be incremented from 0 for every run.

Note that the incrementation of the lower 32-bit of the target ID is done across the whole video streams in the same NvMultiObjectTracker library instantiation. Thus, even if the unique ID generation is disabled, the tracker IDs will be unique for the same pipeline run. If the unique ID generation is disabled, and if there are three objects for Stream 1 and two objects for Stream 2, for example, the target IDs will be assigned from 0 to 4 (instead of 0 to 2 for Stream 1 and 0 to 1 for Stream 2) as long as the two streams are being processed by the same library instantiation.

preserveStreamUpdateOrder controls whether to use single or multiple threads to update targets. If it is enabled, new IDs are generated sequentially following input stream ID order in each batch using a single thread, i.e. the objects for Stream 1 and 2 will have IDs from 0 to 2 and 3 to 4 respectively. By default, this option is disabled so target management is done with multi-threads to enable better performance but the ID order is not preserved. If the user needs consistent IDs over multiple runs for the same video source, please set preserveStreamUpdateOrder: 1 and batched-push-timeout=-1 in deepstream-app config.

The NvMultiObjectTracker library pre-allocates all the GPU memories during initialization based on:

The number of streams to be processed

The maximum number of objects to be tracked per stream (denoted as

maxTargetsPerStream)

Thus, the CPU/GPU memory usage by the NvMultiObjectTracker library is almost linearly proportional to the total number of objects being tracked, which is (number of video streams) × (maxTargetsPerStream), except the scratch memory space used by dependent libraries (such as cuFFT™, TensorRT™, etc.). Thanks to the pre-allocation of all the necessary memory, the NvMultiObjectTracker library is not expected to have memory growth during long-term run even when the number of objects increases over time.

Once the number of objects being tracked reaches the configured maximum value (i.e., maxTargetsPerStream), any new objects will be discarded until some of the existing targets are terminated. Note that the number of objects being tracked includes the targets that are being tracked in the shadow tracking mode. Therefore, NVIDIA recommends that users set maxTargetsPerStream large enough to accommodate the maximum number of objects of interest that may appear in a frame, as well as the objects that may have been tracked from the past frames in the shadow tracking mode.

The minDetectorConfidence property under BaseConfig section in a low-level tracker config file sets the confidence level below which the detector objects are filtered out.

State Estimation#

The NvMultiObjectTracker library employs two types of state estimators, both of which are based on Kalman Filter (KF): Simple-bbox KF, Regular-bbox KF, and Simple-location KF. The Simple-bbox KF has 6 states defined, which are {x, y, w, h, dx, dy}, where x and y indicate the coordinates of the top-left corner of a target bbox, while w and h the width and the height of the bbox, respectively. dx and dy denote the velocity of x and y states. The Regular-bbox KF, on the other hand, have 8 states defined, which are {x, y, w, h, dx, dy, dw, dh}, where dw and dh are the velocity of w and h states and the rest is the same as the Simple-bbox KF. The Simple-location KF has 4 states only, which are {x, y, dx, dy}. Unlike the the two KFs that estimate the bbox attributes, note that the Simple-location KF is meant to estimate the object location in either 2D camera image plane or 3D world ground plane.

All the Kalman Filter types employ a constant velocity model for generic use. The measurement vector for the Simple-bbox and Regular-bbox KF is defined as {x, y, w, h}, which represents the bbox attributes, while that for the Simple-location KF is defined as {x, y}. There is an option to use bbox aspect ratio a and its velocity da instead of w and dw when useAspectRatio is enabled, which is specially used by NvDeepSORT. In case the state estimator is used for a generic use case (like in the NvDCF tracker), the process noise variance for {x, y}, {w, h}, and {dx, dy, dw, dh} can be configured by processNoiseVar4Loc, processNoiseVar4Size, and processNoiseVar4Vel, respectively.

When a visual tracker module is enabled (like in the NvDCF tracker), there could be two different measurements from the state estimator’s point of view: (1) the bbox (or location) from the detector at PGIE and (2) the bbox (or location) from the tracker’s localization. This is because the NvDCF tracker module is capable of localizing targets using its own learned filter. The measurement noise variance for these two different types of measurements can be configured by measurementNoiseVar4Detector and measurementNoiseVar4Tracker. These parameters are expected to be tuned or optimized based on the detector’s and the tracker’s characteristics for better measurement fusion.

The usage of the state estimator in the NvDeepSORT tracker slightly differs from that for the aforementioned generic use case in that it is basically a Regular KF, yet with a couple of differences as per the original paper and the implementation (Check the references in NvDeepSORT Tracker section):

Use of the aspect ratio

aand the heighth(instead ofwandh) to estimate the bbox sizeThe process and measurement noises that are proportional to the bounding box height (instead of constant values)

To allow these differences, the state estimator module in the NvMultiObjectTracker library has a set of additional config parameters:

useAspectRatioto enable the use ofa(instead ofw)noiseWeightVar4LocandnoiseWeightVar4Velas the proportion coefficients for the measurement and velocity noise, respectively

Note that if these two parameters are set, the fixed process noise and measurement noise parameters for the generic use cases will be ignored.

Model Inference#

NvMultiObjectTracker supports different kinds of neural network models to improve the tracking accuracy, which are supported by a unified inference module using NVIDIA TensorRT™ backend for acceleration. Currently, below types of models are supported:

Re-identification: Given each target’s cropped image, extract a unique feature vector that is robust to spatial-temporal variance and occlusion. This is used in NvDeepSORT and target re-association, and the parameters are defined in

ReIDsection in the tracker config.Pose Estimation: Given each target’s cropped image, estimate the 2D and 3D key-points. These key-points are used in single (SV3DT) and multi-view 3D tracking (MV3DT) to estimate the 3D height and target foot location in the world coordinate system, and the parameters are defined in

PoseEstimatorsection in the tracker config.Target Segmentation: Given the whole frame image, prompt and past frame memory, segmentation models like SAM2 generate a segmentation mask for each target. The segmentation mask is used in MaskTracker to match targets with detections and fuse this information to improve bounding box accuracy, where the parameters are defined in

config_tracker_module_Segmenter.ymlas a separate config.

Note

Both NVIDIA TAO toolkit or third-party ONNX models are supported. Users can also setup their own models.

The setup instructions for all different types of models can be found in

/opt/nvidia/deepstream/deepstream/sources/tracker_ReID/README.Sample applications to use the latest models are also provided on GitHub:

To perform inference, the input image is first resized to the network input dimension inferDims and converted to the required colorFormat, which is either RGB or BGR. Then each pixel value is pre-processed by y = netScaleFactor*(x-offsets) where x is the initial pixel value with range [0,255], y is the corresponding output float value. This preprocessing is the same as the one used in Gst-nvinfer and Gst-nvinferserver plugins. The input is also transposed to the network’s inputOrder, which is either NCHW or NHWC. useVPICropScaler: 1 can be used to enable the VPI crop scaler, which is a hardware-accelerated crop scaler that performs above operations on GPU or PVA.

The TensorRT™ inference engine configuration is controlled through several key parameters: batchSize specifies the number of inputs processed simultaneously for optimal GPU utilization, while networkMode determines the inference precision (0=FP32, 1=FP16, 2=INT8). Model files are specified via onnxFile for ONNX format models, tltEncodedModel for TAO Toolkit encoded models, or modelEngineFile for pre-built TensorRT engines. For INT8 quantization, calibrationTableFile provides the calibration data required to maintain accuracy while achieving maximum performance. The workspaceSize parameter allocates GPU memory for TensorRT optimization and inference operations.

Target Re-Identification#

Re-identification (Re-ID) uses TensorRT™-accelerated deep neural networks to extract unique feature vectors from detected objects that are robust to spatial-temporal variance and occlusion. It has two use-cases in NvMultiObjectTracker: (1) In NvDeepSORT, the Re-ID similarity is used for data association of objects over consecutive frames.; (2) In target re-association (which will be described in more detail in the following section), the Re-ID features of targets are extracted and kept, so that they can be used for re-association with the same target if they are seemingly lost. reidType selects the mode for each aforementioned use-case.

In the Re-ID module, the detector objects are cropped and resized into the configured input size of the Re-ID network. The parameter keepAspc controls whether the object’s aspect ratio is preserved after cropping. Then NVIDIA TensorRT™ creates an engine from the network, which processes the input in batches and outputs a fixed-dimensional vector for each detector object as the Re-ID feature. The cosine similarity function requires each feature’s L2 norm normalized to 1. Check Re-ID Feature Output on how to retrieve these features in the tracker plugin and downstream modules. For each target, a gallery of its Re-ID features in most recent frames are kept internally. The size of the feature gallery can be set by reidHistorySize.

config_tracker_NvDeepSORT.ymlandconfig_tracker_NvDCF_accuracy.ymlconfigs use ReIdentificationNet by default, which is a ResNet-50 Re-ID network in NVIDIA TAO toolkit on NGC. Users need to follow instructions in Setup Sample Re-ID Models to setup, or check Customize Re-ID Model for more information on adding a custom Re-ID model for object tracking with different architectures and datasets.

The Re-ID similarity between a detector object and a target is the cosine similarity between the detector object’s Re-ID feature and its nearest neighbor in the target’s featue gallery, whose value is in range [0.0, 1.0]. Specifically, each Re-ID feature in the target’s gallery takes the dot product with the detector object’s Re-ID feature. The maximum of all the dot products is the similarity score, i.e.

\[score_{ij}=\max_{k}(feature\_det_{i}\cdot feature\_track_{jk})\]

where:

\(\cdot\) denotes the dot product.

\(feature\_det_{i}\) denotes the i-th detector object’s feature.

\(feature\_track_{jk}\) denotes the k-th Re-ID feature in the j-th target’s feature gallery. \(k\) =[1,

reidHistorySize].

The Re-ID has a spatial-temporal constraint. If an object moves out of frame or gets occluded beyond maxShadowTrackingAge, it will be assigned a new ID even if it returns into the frame.

The extracted Re-ID features (i.e., embeddings) can be exported to the metadata, which is explained in a separate section in Re-ID Feature Output.

Target Re-Association#

The target re-association algorithm enhances the long-term robustness of multi-object tracking by jointly using the Re-ID and spatio-temporal (i.e., motion) features. It addresses one of the major tracking failure cases that occurs in the situation where objects undergo partial- or full-occlusions in a gradual or abrupt manner. During this course of action, the detector at PGIE module may capture only some part of the objects (due to partial visibility), resulting in ill-sized, ill-centered boxes on the target. Later, the target cannot be associated with the object appearing again due to the size and location prediction errors, potentially causing tracking failures and ID switches. Such a re-association problem can typically be handled as a post-processing; however, for real-time analytics applications, this is often expected to be handled seamlessly as a part of the real-time multi-object tracking.

The target re-association takes advantage of the Late Activation and Shadow Tracking in target management module. It tries to associate the newly-appeared targets with previously lost targets based on motion and Re-ID similarity in a seamless, real-time manner by the following steps:

Tracklet Prediction: Whenever an existing target is not associated with a detector object for a prolonged period (same as probationAge), it is considered that the target is lost. While the visual tracker module keeps track of the target in the shadow tracking mode, a length of the predicted tracklet (configured by trajectoryProjectionLength) is generated using some of the recently matched tracklet points (whose length is set by prepLength4TrajectoryProjection) and stored into an internal database until it is matched again with a detector object or re-associated with another target.

Re-ID Feature Extraction: Before a target is lost, the Re-ID network extracts its Re-ID feature with the frame interval of reidExtractionInterval and stores them in the feature gallery. These features will be used to identify target re-appearance in the tracklet matching stage.

Target ID Acquisition: When a new target is instantiated, its validity is examined for a few frames (i.e., probationAge) and a target ID is assigned only if validated (i.e., Late Activation), after which the target state report starts. During the target ID acquisition, the new target is examined if it matches with one of the predicted tracklets from the existing targets in the internal database where the aforementioned predicted tracklets are stored. If matched, it would mean that the new target is actually the re-appearance of a disappeared target in the past. Then, the new target is re-associated with the existing target and its tracklet is fused into that as well. Otherwise, a new target ID is assigned.

Tracklet Matching: During the tracklet matching process in the previous step, the valid candidate tracklets are queried from the database based on the feasible time window configured by maxTrackletMatchingTimeSearchRange. For the new target and each candidate, both the motion and Re-ID similarity are taken into account for tracklet matching. The motion similarity is the average IOU along the tracklet with various criteria including the minimum average IOU score (i.e., minTrackletMatchingScore), maximum angular difference in motion (i.e., maxAngle4TrackletMatching), minimum speed similarity (i.e., minSpeedSimilarity4TrackletMatching), and minimum bbox size similarity (i.e., minBboxSizeSimilarity4TrackletMatching) computed by a Dynamic Time Warping (DTW)-like algorithm. The Re-ID similarity is the cosine distance between the new target’s Re-ID feature and its nearest neighbor in the candidate’s feature gallery. The total similarity score is the weighted sum of both metrics:

\[totalScore=w_1*IOU+w_2*reidSimilarity\]

where \(w_i\) is the weight for each metric set in config file. Users can also set a minimum threshold for each similarity and the total score.

Tracklet Fusion: Once two tracklets are associated, they are fused together to generate one smooth tracklet based on the matching status with detector and the confidence at each point.

config_tracker_NvDCF_accuracy.yml provides an example to enable this feature. Since Re-ID is computationally expensive, users may choose to increase reidExtractionInterval to improve performance or set the parameters like below (i.e., disabling Re-ID feature extraction) to use motion-only target re-association without Re-ID.

TrajectoryManagement: useUniqueID: 0 # Use 64-bit long Unique ID when assignining tracker ID. Default is [true] enableReAssoc: 1 # Enable Re-Assoc minMatchingScore4Overall: 0 # min matching score for overall minTrackletMatchingScore: 0.5644 # min tracklet similarity score for re-assoc matchingScoreWeight4TrackletSimilarity: 1.0 # weight for tracklet similarity score minTrajectoryLength4Projection: 36 # min trajectory length required to make projected trajectory prepLength4TrajectoryProjection: 50 # the length of the trajectory during which the state estimator is updated to make projections trajectoryProjectionLength: 94 # the length of the projected trajectory maxAngle4TrackletMatching: 106 # max angle difference for tracklet matching [degree] minSpeedSimilarity4TrackletMatching: 0.0967 # min speed similarity for tracklet matching minBboxSizeSimilarity4TrackletMatching: 0.5577 # min bbox size similarity for tracklet matching maxTrackletMatchingTimeSearchRange: 20 # the search space in time for max tracklet similarity trajectoryProjectionProcessNoiseScale: 0.0100 # trajectory projector's process noise scale w.r.t. state estimator trajectoryProjectionMeasurementNoiseScale: 100 # trajectory projector's measurement noise scale w.r.t. state estimator trackletSpacialSearchRegionScale: 0.2598 # the search region scale for peer tracklet ReID: reidType: 0 # The type of reid among { DUMMY=0, NvDEEPSORT=1, Reid based reassoc=2, both NvDEEPSORT and reid based reassoc=3}Note

Target re-association can be effective only when the state estimator is enabled, otherwise the tracklet prediction will not be made properly. The parameters provided above is tuned for PeopleNet v2.6.2, and it may not work as expected for other types of detectors.

Bounding-box Unclipping#

Another small experimental feature is the bounding box unclipping. If a target is fully visible within the field-of-view (FOV) of the camera but starts going out of the FOV, the target would be partially visible and the bounding box (i.e., bbox) may capture only a part of the target (i.e., clipped by the FOV) until it fully exits the scene. If it is expected that the size of the bbox doesn’t change much around the border of the video frame, the full bbox can be estimated beyond the FOV limit using the bbox size estimated when the target was fully visible. This feature can be enabled by setting enableBboxUnClipping: 1 under TargetManagement module in the low-level config file.

Single-View 3D Tracking#

Note

To allow users to easily try out and experience SV3DT, a sample usecase for SV3DT with pose estimator has been hosted on GitHub. So, users can just clone and run it with the sample data provided.

As mentioned earlier, partial occlusion is one of the most challenging problems that object trackers have to deal with and often lead to tracking failures. If the object detectors capture only the visible part of the object (which is often the case), the partial occlusion would cause the detection bboxes to have abrupt or gradual changes in attributes in terms of bbox location, size, aspect ratio, confidence, and most importantly the visual appearance within the bbox. Considering the object trackers rely on the bbox attributes as spatio-temporal measure and the visual appearance (e.g., ReID embedding) extracted within the bbox as visual similarity measure, such changes in bbox attributes is a major source of tracking failures, resulting in more frequent ID switches.

To tackle these challenging problems, DeepStream SDK introduced a new feature called the Single-View 3D Tracking (SV3DT) that allows the object tracking to be carried out in a 3D world coordinate system (instead of the 2D camera image plane) when (1) a 3x4 projection matrix and (2) a 3D model info are provided for a video stream in a camera info file like below.

# camInfo-01.yml # The 3x4 camera projection matrix (in row-major): # 996.229 -202.405 -9.121 -1.185 # 105.309 478.174 890.944 1.743 # -0.170 -0.859 0.481 -1085.484 projectionMatrix_3x4: - 996.229 - -202.405 - -9.121 - -1.185 - 105.309 - 478.174 - 890.944 - 1.743 - -0.170 - -0.859 - 0.481 - -1085.484 # The human model. modelInfo: height: 250.0 radius: 30.0 # "radius" represents the acual radius of the 3D cylinder, or half of a cuboid's bottom edge

There are two options (projectionMatrix_3x4 and projectionMatrix_3x4_w2p) in which users can provide corresponding 3x4 camera projection matrices to support different usecase. Please refer to The 3x4 Camera Projection Matrix section for more details.

Note that there are a few assumptions that this algorithm requires:

A human is modeled as a cylinder or cuboid with height and radius in the 3D world coordinate system. The cuboid’s bottom surface is assumed to be a square with edge length equal to twice the radius. The default height and radius are provided in 3D model info, which is the same for all the people. Additionally, a pose estimation model can be provided to estimate the 3D height of different people on the fly for better 3D accuracy.

A 3x4 projection matrix (that transforms a 3D world coordinate point to a 2D camera image coordinate point) is provided for a video stream or a camera.

Video streams are captured from cameras that are mounted higher than the human height. This ensures that when a human is partially-occluded, the upper body is still visible.

SV3DT algorithm can be used with an optional 2D pose estimator, which estimates human key-points on the 2D image plane. If the pose estimator is enabled, the algorithm uses upper body key-points as 2D anchor to achieve a higher 3D accuracy. Otherwise, the algorithm uses 2D detection bounding boxes, especially the top edge as 2D anchor, which we will touch upon shortly.

2D Key-Points as Anchors: If the 2D pose estimator is enabled, SV3DT can estimate each person’s different 3D height and refine it over frames. It will use the head and waist key-points as 2D anchors. It assumes the 3D line between these two key-points is perpendicular to the ground plane. By minimizing the projection error, it jointly solves the 3D distance between them and the world coordinate on the ground plane. Assuming body proportions are the same for all the people, which can be configured as waistHeightRatio, the 3D height can be estimated as headHeight - waistHeight * waistHeightRatio. Then the 3D human model is updated for each target with different 3D height. The radius is also adjusted proportionally to the 3D height. More information about the 2D pose estimator can be found in Pose Estimation section.

Detection Boxes as Anchors: When 2D pose esimator is disabled, for each person, SV3DT algorithm tries to fit the 3D human model to the detection bbox in such a way that the bounding box of the projected 3D human model from the world coordinate system to the camera image plane matches with the detection bbox.

In the cases where a person is partially occluded, the head and waist key-points, or the top edge of the detection bbox is used as an anchor to align the bbox of the projected 3D human model. Once aligned, we can recover the full-body bbox using the projected 3D human model, as if the person is not occluded. Therefore, if SV3DT is enabled, the input detection bboxes are always first recovered to the full-body bboxes based on the provided 3D model info especially when the input detection bboxes capture only the visible part of the person due to partial occlusions. This greatly enhances the multi-object tracking accuracy and robustness, since the bbox attributes are not altered during the course of partial occlusions.

An animated image below shows how 3D human models can be fitted into the input detection bboxes when the persons are partially occluded. The thin, gray bboxes on the persons indicate the input detection bboxes, which capture only the visible part of the objects. The figure demonstrates that the SV3DT algorithm is still able to estimate the accurate foot location of each person. The person trajectories are drawn based on the estimated foot locations, allowing robust spatio-temporal behavior analytics of persons in the scene despite varying degree of occlusions. Some of the persons in this example are barely seen only on the head and shoulders, but they are being successfully tracked as if not occluded at all.

As a derived metric, the ratio between the bbox for the visible part and the bbox for the projected 3D human model can be considered as an approximated visibility of the object, which could be a useful information.

Users can still get access to the corresponding detection bboxes by checking out detector_bbox_info in NvDsObjectMeta.

To enable SV3DT feature, we introduced a new section in tracker config files, ObjectModelProjection, like below:

ObjectModelProjection: cameraModelFilepath: # In order of the source streams - 'camInfo-01.yml' - 'camInfo_02.yml' ...

Every camera view is different, so the 3x4 projection matrix is supposed to be unique to each camera. Therefore, the camera info file (e.g., camInfo-01.yml) is to be provided for each stream, which include the 3x4 projection matrix and the model info that are shown at the beginning of the section.

Once a 3D human model corresponding to an input detection bbox is estimated and located in the world coordinate system, the foot location (i.e., the center of the base of the human model) of a person on the world ground plane is what we want to keep estimating because it is a physical state that better follows the motion dynamics modeling than the motion of the object on 2D camera image plane. To perform the state estimation of the foot location of the objects on a 3D world ground plane, users need to set the state estimator type as stateEstimatorType: 3 like below:

StateEstimator: stateEstimatorType: 3 # the type of state estimator among { DUMMY_ESTIMATOR=0, SIMPLE_BBOX_KF=1, REGULAR_BBOX_KF=2, SIMPLE_LOCATION_KF=3 } # [Dynamics Modeling] processNoiseVar4Loc: 6810.866 # Process noise variance for location processNoiseVar4Vel: 1348.487 # Process noise variance for velocity measurementNoiseVar4Detector: 100.000 # Measurement noise variance for detector's detection measurementNoiseVar4Tracker: 293.323 # Measurement noise variance for tracker's localization

Meta Data for 3D Tracking#

The additional miscellaneous data that is generated when SV3DT is enabled include (1) visibility, (2) foot location in both world plane and 2D image, (3) convex hull (human cylinders projected on 2D image), and (4) 3D bounding box information. These data can be saved in text files and/or outputted to object meta for downstream usage. To do that, users would need to set outputVisibility: 1, outputFootLocation: 1, outputConvexHull: 1 in ObjectModelProjection section respectively. Note that 3D bounding box information is controlled by the same setting as foot location (outputFootLocation: 1). The sample use cases includes saving in terminated track dump for low level tracker, attaching in KITTI track dump for deepstream-app, and converting them in schema through Gst-nvmsgconv.

NvDsObj3DBbox User Meta: When SV3DT is enabled, each tracked object’s 3D bounding box information is stored in the NvDsObj3DBbox user meta structure. This structure contains comprehensive 3D spatial information about the tracked object including centroid coordinates, dimensions, rotation angles, and velocity in world coordinates. The NvDsObj3DBbox is retrieved and added in updateObjectProjectionMeta() in nvtracker_proc.cpp. The complete structure definition can be found in nvds_tracker_meta.h.

NvDs3DTracking Schema: The NvDs3DTracking structure is used in the DeepStream schema for event messages and contains key 3D tracking metadata including foot locations in both world and image coordinates, visibility information, convex hull data, and 3D bounding box information. The complete structure definition can be found in nvdsmeta_schema.h.

A figure below shows how the 3D human models can be fitted to the input detection bboxes. Note the current DeepStream OSD only supports 3D cuboid visualization as in right figure. The left figure uses the convex hull of the projected 3D cylinder model on 2D camera image plane. Users can refer to Miscellaneous Data Output section on how to retrieve the convex hull and create their own script to plot the convex hull.

The 3x4 Camera Projection Matrix#

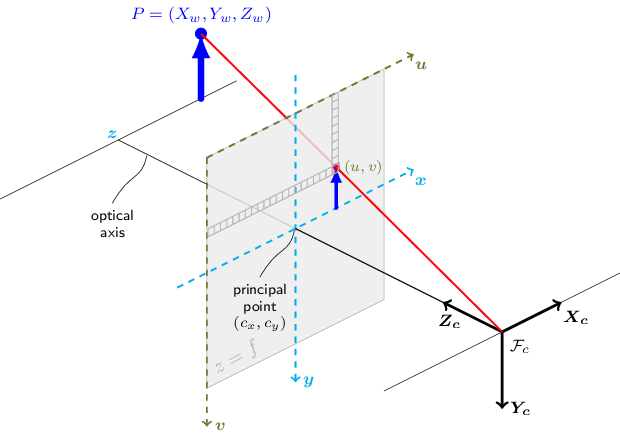

The 3x4 Camera Projection Matrix is also called as simply the camera matrix, which is a 3x4 matrix that converts a 3D world point to a 2D point on camera image plane based on a pinhole camera model like shown in the figure below:

Pinhole Camera Model. Source: OpenCV Doc and License#

More detailed and general information about the camera matrix can be found in various sources that deal with the computer vision geometries and camera calibration, including OpenCV’s documentation on Camera Calibration.

For projectionMatrix_3x4 in a camera model file (e.g., camInfo-01.yml), the principal point (i.e., (Cx, Cy)) in the camera matrix is assumed to be at (0, 0) as image coordinates. But, the optical center (i.e., (Cx, Cy)) is located at the image center (i.e., (img_width/2, img_height/2)). Thus, to move the origin to the left-top of the camera image (i.e., the pixel coordinates), SV3DT internally adds (img_width/2, img_height/2) after the transformation using the camera matrix provided in projectionMatrix_3x4.

In case that the 3x4 camera projection matrix already accounts for such translation of the principal point, users can provide the camera matrix in projectionMatrix_3x4_w2p instead. This assumes that the 3x4 camera projection matrix transforms a 3D world point directly into an actual pixel point whose origin is at the left-top corner of the image, so it does not require any further translation of the principal point.

Pose Estimation#

Pose estimation is a technique that estimates the key-points of a target, such as human or vehicle. It is used in SV3DT to estimate the 3D height in real world. Currently, SV3DT supports NVIDIA’s BodyPose3DNet with TensorRT acceleration. BodyPose3DNet is a 3D human pose estimation network based on HRNet architecture that predicts 34 keypoints in 3D space for a person in an image, including joints from head to toe such as pelvis, shoulders, elbows, wrists, hips, knees, and ankles. The model outputs both 2D and 3D keypoint coordinates, enabling accurate human pose tracking and analysis for applications like action understanding, surveillance, and human-robot interaction. It can be downloaded and used with command:

mkdir -p /opt/nvidia/deepstream/deepstream/samples/models/Tracker/

wget --content-disposition 'https://api.ngc.nvidia.com/v2/models/org/nvidia/team/tao/bodypose3dnet/deployable_accuracy_v1.0/files?redirect=true&path=bodypose3dnet_accuracy.etlt' -P /opt/nvidia/deepstream/deepstream/samples/models/Tracker/

Add PoseEstimator section in the tracker config like below to enable the network. Note the direct output of this network consists of both 2D and 3D key-points, but the 3D key-points are in each target’s local coordinate system. Currently, SV3DT only uses the 2D key-points to estimate the 3D height and target foot location in the world coordinate system.

Pose Estimator Parameters

PoseEstimator:

poseEstimatorType: 1 # Type of pose estimator used

useVPICropScaler: 1 # Use VPI backend for cropping and scaling

batchSize: 1 # Batch size for pose estimation

workspaceSize: 1000 # Workspace size in MB for the pose estimator engine

inferDims: [3, 256, 192] # Input dimensions for the pose estimator network (C, H, W)

networkMode: 1 # Inference precision mode (fp32=0, fp16=1, int8=2)

inputOrder: 0 # Input order for the network (NCHW=0, NHWC=1)

colorFormat: 0 # Input color format (RGB=0, BGR=1)

offsets: [123.6750, 116.2800, 103.5300] # Channel-wise mean subtraction values

netScaleFactor: 0.00392156 # Scaling factor for input normalization

onnxFile: "/opt/nvidia/deepstream/deepstream/samples/models/Tracker/bodypose3dnet_accuracy.onnx" # Path to the ONNX model file

modelEngineFile: "/opt/nvidia/deepstream/deepstream/samples/models/Tracker/bodypose3dnet_accuracy.onnx_b1_gpu0_fp16.engine" # Path to the engine file

poseInferenceInterval: -1 # Pose inference frame interval. -1 means only for the first frame of each target and use it to determine the target height.

Configuration Parameters#

The following table summarizes the configuration parameters for the common modules in the NvMultiObjectTracker low-level tracker library.

Module |

Property |

Meaning |

Type and Range |

Default value |

Support Dynamic Updates |

|---|---|---|---|---|---|

Base Config |

minDetectorConfidence |

Minimum detector confidence for a valid object |

Float, -inf to inf |

minDetectorConfidence: 0.0 |

True |

Control |

tracker-reset |

soft reset the tracker removing all tracks and track history |

Boolean |

tracker-reset:0 |

True |

Target Management |

preserveStreamUpdateOrder |

Whether to ensure target ID update order the same as input stream ID order |

Boolean |

preserveStreamUpdateOrder: 0 |

False |

maxTargetsPerStream |

Max number of targets to track per stream |

Integer, 0 to 65535 |

maxTargetsPerStream: 30 |

False |

|

minIouDiff4NewTarget |

Min IOU to existing targets for discarding new target |

Float, 0 to 1 |

minIouDiff4NewTarget: 0.5 |

True |

|

enableBboxUnClipping |

Enable bounding-box unclipping |

Boolean |

enableBboxUnClipping: 0 |

True |

|

probationAge |

Length of probationary period in #of frames |

Integer, ≥0 |

probationAge: 5 |

True |

|

maxShadowTrackingAge |

Maximum length of shadow tracking |

Integer, ≥0 |

maxShadowTrackingAge: 38 |

True |

|

earlyTerminationAge |

Early termination age |

Integer, ≥0 |

earlyTerminationAge: 2 |

True |

|

outputTerminatedTracks |

Output total frame history for terminated tracks to the tracker plugin for downstream usage |

Boolean |

outputTerminatedTracks: 0 |

False |

|

outputShadowTracks |

Output shadow track state information to the tracker plugin for downstream usage |

Boolean |

outputShadowTracks: 0 |

False |

|

terminatedTrackFilename |

File name prefix to save terminated tracks |

String |

terminatedTrackFilename: “” |

False |

|

Trajectory Management |

useUniqueID |

Enable unique ID generation scheme |

Boolean |

useUniqueID: 0 |

False |

enableReAssoc |

Enable motion-based target re-association |

Boolean |

enableReAssoc: 0 |

False |

|

minMatchingScore4Overall |

Min total score for re-association |

Float, 0.0 to 1.0 |

minMatchingScore4Overall: 0.4 |

True |

|

minTrackletMatchingScore |

Min tracklet similarity score for matching in terms of average IOU between tracklets |

Float, 0.0 to 1.0 |

minTrackletMatchingScore: 0.4 |

False |

|

minMatchingScore4ReidSimilarity |

Min ReID score for re-association |

Float, 0.0 to 1.0 |

minMatchingScore4ReidSimilarity: 0.8 |

True |

|

matchingScoreWeight4TrackletSimilarity |

Weight for tracklet similarity term in re-assoc cost function |

Float, 0.0 to 1.0 |

matchingScoreWeight4TrackletSimilarity: 1.0 |

True |

|

matchingScoreWeight4ReidSimilarity |

Weight for ReID similarity term in re-assoc cost function |

Float, 0.0 to 1.0 |

matchingScoreWeight4ReidSimilarity: 0.0 |

True |

|

minTrajectoryLength4Projection |

Min tracklet length of a target (i.e., age) to perform trajectory projection [frames] |

Integer, >=0 |

minTrajectoryLength4Projection: 20 |

True |

|

prepLength4TrajectoryProjection |

Length of the trajectory during which the state estimator is updated to make projections [frames] |

Integer, >=0 |

prepLength4TrajectoryProjection: 10 |

True |

|

trajectoryProjectionLength |

Length of the projected trajectory [frames] |

Integer, >=0 |

trajectoryProjectionLength: 90 |

True |

|

maxAngle4TrackletMatching |

Max angle difference for tracklet matching [degree] |

Integer, [0, 180] |

maxAngle4TrackletMatching: 40 |

True |

|

minSpeedSimilarity4TrackletMatching |

Min speed similarity for tracklet matching |

Float, 0.0 to 1.0 |

minSpeedSimilarity4TrackletMatching: 0.3 |

True |

|

minBboxSizeSimilarity4TrackletMatching |

Min bbox size similarity for tracklet matching |

Float, 0.0 to 1.0 |

minBboxSizeSimilarity4TrackletMatching: 0.6 |

True |

|

maxTrackletMatchingTimeSearchRange |

Search space in time for max tracklet similarity |

Integer, >=0 |

maxTrackletMatchingTimeSearchRange: 20 |

True |

|

trajectoryProjectionProcessNoiseScale |

Trajectory state estimator’s process noise scale |

Float, 0.0 to inf |

trajectoryProjectionProcessNoiseScale: 1.0 |

True |

|

trajectoryProjectionMeasurement NoiseScale |

Trajectory state estimator’s measurement noise scale |

Float, 0.0 to inf |

trajectoryProjectionMeasurement NoiseScale: 1.0 |

True |

|

trackletSpacialSearchRegionScale |

Re-association peer tracklet search region scale |

Float, 0.0 to inf |

trackletSpacialSearchRegionScale: 0.0 |

True |

|

reidExtractionInterval |

Frame interval to extract ReID features per target for re-association; -1 means only extracting the beginning frame per target |

Integer, ≥-1 |

reidExtractionInterval: 0 |

True |

|

Data Associator |

associationMatcherType |

Type of matching algorithm { GREEDY=0, CASCADED=1 } |

Integer, [0, 1] |

associationMatcherType: 0 |

False |

checkClassMatch |

Enable associating only the same-class objects |

Boolean |

False |

||

minMatchingScore4Overall |

Min total score for valid matching |

Float, 0.0 to 1.0 |

minMatchingScore4Overall: 0.0 |

True |

|

minMatchingScore4SizeSimilarity |

Min bbox size similarity score for valid matching |

Float, 0.0 to 1.0 |

minMatchingScore4SizeSimilarity: 0.0 |

True |

|

minMatchingScore4Iou |

Min IOU score for valid matching |

Float, 0.0 to 1.0 |

minMatchingScore4Iou: 0.0 |

True |

|

matchingScoreWeight4SizeSimilarity |

Weight for size similarity term in matching cost function |

Float, 0.0 to 1.0 |

matchingScoreWeight4SizeSimilarity: 0.0 |

True |

|

matchingScoreWeight4Iou |

Weight for IOU term in matching cost function |

Float, 0.0 to 1.0 |

matchingScoreWeight4Iou: 1.0 |

True |

|

tentativeDetectorConfidence |

If a detection’s confidence is lower than this but higher than minDetectorConfidence, then it’s considered as a tentative detection |

Float, 0.0 to 1.0 |

tentativeDetectorConfidence: 0.5 |

True |

|

minMatchingScore4TentativeIou |

Min iou threshold to match targets and tentative detection |

Float, 0.0 to 1.0 |

minMatchingScore4TentativeIou: 0.0 |

True |

|

State Estimator |

stateEstimatorType |

Type of state estimator among { DUMMY=0, SIMPLE=1, REGULAR=2, SIMPLE_LOC=3 } |

Integer, [0,3] |

stateEstimatorType: 0 |

False |

processNoiseVar4Loc |

Process noise variance for bbox center |

Float, 0.0 to inf |

processNoiseVar4Loc: 2.0 |

False |

|

processNoiseVar4Size |

Process noise variance for bbox size |

Float, 0.0 to inf |

processNoiseVar4Size: 1.0 |

False |

|

processNoiseVar4Vel |

Process noise variance for velocity |

Float, 0.0 to inf |

processNoiseVar4Vel: 0.1 |

False |

|

measurementNoiseVar4Detector |

Measurement noise variance for detector’s detection |

Float, 0.0 to inf |

measurementNoiseVar4Detector: 4.0 |

False |

|

measurementNoiseVar4Tracker |

Measurement noise variance for tracker’s localization |

Float, 0.0 to inf |

measurementNoiseVar4Tracker: 16.0 |

False |

|

noiseWeightVar4Loc |

Noise covariance weight for bbox location; if set, location noise will be proportional to box height |

Float, >0.0 considered as set |

noiseWeightVar4Loc: -0.1 |

False |

|

noiseWeightVar4Vel |