Gst-nvdsxfer#

The Gst-nvdsxfer plugin performs data transfer between discrete GPUs. Currently it is supported on x86 platform only.

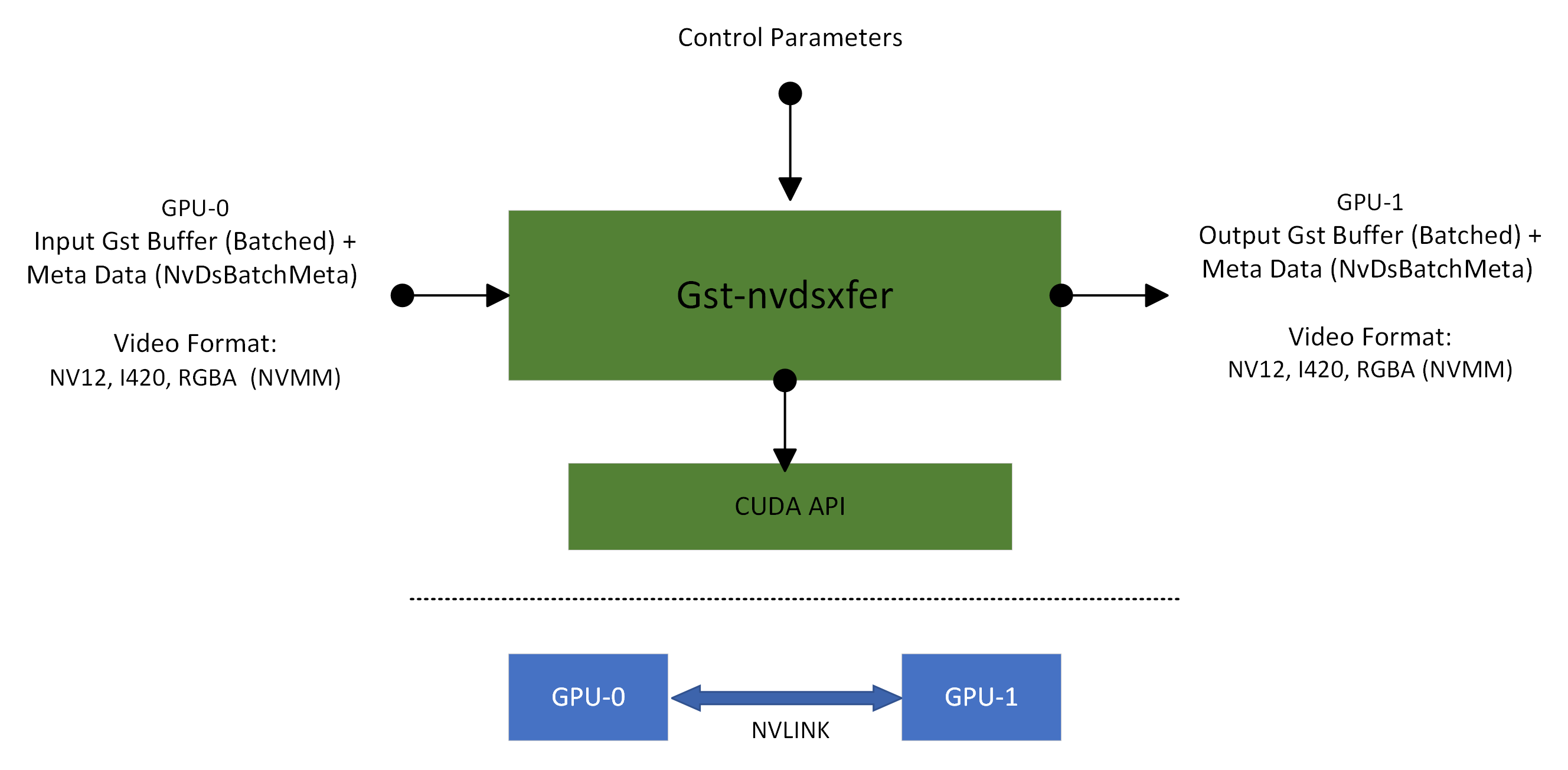

It uses CUDA APIs to utilize NVIDIA NVLINK technology for high-speed, direct GPU-to-GPU interconnect for optimized data transfer between discrete GPUs.

The plugin accepts CUDA memory based (NvBufSurface allocated) video Gst Buffers from upstream component. It transfer the input data to CUDA memory based (NvBufSurface allocated) video output Gst Buffer using the optimized NVLINK based data copy.

Note

The Gst-nvdsxfer plugin is currently supports Single Node, Single Application with Multi-dGPU setup based use case pipelines. Video format conversion or scaling is not supported while doing data copy between two discrete GPUs. Multi-dGPUs are connected using NVLINK Bridge Connector. Users must confirm the NVLINK state (active/inactive - Use command “nvidia-smi nvlink -s” to check) between two discrete GPUs before using the nvdsxfer plugin in the gst-pipeline.

As shown in the diagram below input video data is copied to output over a NVLINK connected discrete GPUs.

Inputs and Outputs#

This section summarizes the inputs, outputs of the Gst-nvdsxfer plugin.

Inputs

Gst Buffer batched buffer

NvDsBatchMeta

Raw Video Format: NV12, I420, RGBA (NVMM)

Control parameters

gpu-id

p2p-gpu-id

batch-size

buffer-pool-size

nvbuf-memory-type

Output

Gst Buffer batched buffer

NvDsBatchMeta

Raw Video Format: NV12, I420, RGBA (NVMM)

Gst Properties#

The following tables describes the Gst properties of the Gst-nvdsxfer plugin.

Property |

Meaning |

Type and Range |

Example notes |

|---|---|---|---|

batch-size |

Maximum number of buffers in a batch |

Unsigned Integer. Range: 0 - 4294967295 Default: 1 |

batch-size=2 |

buffer-pool-size |

Maximum number of buffers in muxer’s internal pool |

Unsigned Integer. Range: 2 - 16 Default: 4 |

buffer-pool-size=2 |

gpu-id |

Set GPU Device ID |

Unsigned Integer. Range: 0 - 4294967295 Default: 0 |

gpu-id=1 |

p2p-gpu-id |

Set P2P GPU ID to enable P2P access.Default P2P access between GPUs is disabled. |

Integer. Range: -1 - 1024 Default: -1 |

p2p-gpu-id=0 |

nvbuf-memory-type |

Type of NvBufSurface Memory to be allocated for output buffers |

Enum “GstNvBufMemoryType” Default: 0, “nvbuf-mem-default” (0): nvbuf-mem-default - Default memory allocated, specific to particular platform (1): nvbuf-mem-cuda-pinned - Allocate Pinned/Host cuda memory (2): nvbuf-mem-cuda-device - Allocate Device cuda memory (3): nvbuf-mem-cuda-unified - Allocate Unified cuda memory |

nvbuf-memory-type=2 |

How to test#

nvdsxferis currently supported for X86 only. support with “Jetson + dGPU” is not yet enabled.Multi-dGPUs are connected using NVLINK Bridge Connector. Use below command to confirm the NVLINK state (active/inactive) if ready to use.

nvidia-smi nvlink -s

nvdsxferplugin currently verified using 2 separate dGPU (discrete GPUs) only.Below listed gst-launch-1.0 pipelines simulates some of the reference use cases pipelines using 2 separate dGPU (discrete GPUs).Set property

p2p_gpu_id=0if Peer to Peer (P2P) access between discrete GPUs permitted.If P2P access is not possible then pipeline will fail, remove

p2p_gpu_id=0property to make it run without P2P access.Below mentioned reference gst-launch-1.0 pipelines use legacy

streammuxby default. Newnvstreammuxcan also be used by enabling USE_NEW_NVSTREAMMUX=yes environment variable with appropriate properties set for newstreammuxplugin

Note

gst-launch-1.0 pipelines mentioned in the Use cases section, are not optimal pipelines though can demonstrate nvdsxfer plugin usage for various use cases to achieve better performance and GPU utilization.

Note

deepstream-multigpu-nvlink-test sample application can demonstrate nvdsxfer plugin based gstreamer pipeline.

Refer /opt/nvidia/deepstream/deepstream/sources/apps/sample_apps/deepstream-multigpu-nvlink-test/README for usage and application supported feature.

Use cases#

Below reference gst-launch-1.0 commands uses h265 elementary stream as input. In order to use DeepStream SDK provided sample mp4 stream, use following command to convert from mp4 container file to h265 elementary stream.

cd /opt/nvidia/deepstream/deepstream/

gst-launch-1.0 filesrc location= samples/streams/sample_1080p_h265.mp4 ! qtdemux ! h265parse ! 'video/x-h265,stream-format=byte-stream' ! filesink location= samples/streams/sample_1080p.h265

Single Stream + Multi-dGPUs Setup#

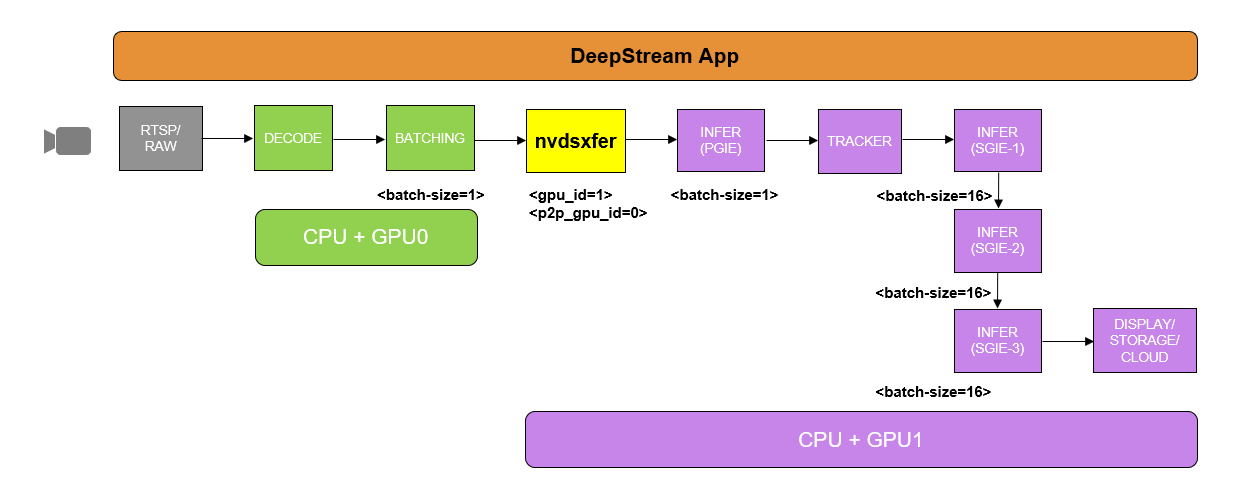

Running “Decode + StreamMux + PGIE” and “Tracker + SGIE (Multiple)” on separate dGPUs

gst-launch-1.0 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_0 nvstreammux name=m batch-size=1 gpu-id=0 \

width=1920 height=1080 nvbuf-memory-type=2 ! queue ! nvinfer gpu-id=0 batch-size=1 \

config-file-path=samples/configs/deepstream-app/config_infer_primary.txt ! queue ! \

nvdsxfer gpu-id=1 p2p_gpu_id=0 ! queue ! nvtracker gpu-id=1 enable-batch-process=1 \

ll-lib-file=lib/libnvds_nvmultiobjecttracker.so ll-config-file=samples/configs/deepstream-app/config_tracker_NvDCF_perf.yml ! \

queue ! nvinfer gpu-id=1 batch-size=16 unique-id=2 config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehiclemake.txt ! \

queue ! nvinfer gpu-id=1 batch-size=16 unique-id=3 config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehicletypes.txt ! \

queue ! fpsdisplaysink video-sink=fakesink sync=0 -e -v

Running “Decode + StreamMux” and “PGIE + Tracker + SGIE (Multiple)” on separate dGPUs

gst-launch-1.0 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \ nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_0 nvstreammux name=m batch-size=1 gpu-id=0 \ width=1920 height=1080 nvbuf-memory-type=2 ! queue ! nvdsxfer gpu-id=1 p2p_gpu_id=0 ! queue ! \ nvinfer gpu-id=1 batch-size=1 config-file-path= samples/configs/deepstream-app/config_infer_primary.txt ! queue ! \ nvtracker gpu-id=1 enable-batch-process=1 ll-lib-file=lib/libnvds_nvmultiobjecttracker.so \ ll-config-file=samples/configs/deepstream-app/config_tracker_NvDCF_perf.yml ! queue ! nvinfer gpu-id=1 batch-size=16 unique-id=2 \ config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehiclemake.txt ! queue ! nvinfer gpu-id=1 batch-size=16 unique-id=3 \ config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehicletypes.txt ! queue ! fpsdisplaysink video-sink=fakesink sync=0 -e -v

gst-launch-1.0 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \ nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_0 nvstreammux name=m batch-size=1 gpu-id=0 \ width=1920 height=1080 nvbuf-memory-type=2 ! queue ! nvdsxfer gpu-id=1 p2p_gpu_id=0 ! queue ! \ nvinfer gpu-id=1 batch-size=1 config-file-path= samples/configs/deepstream-app/config_infer_primary.txt ! queue ! \ nvtracker gpu-id=1 enable-batch-process=1 ll-lib-file=lib/libnvds_nvmultiobjecttracker.so \ ll-config-file=samples/configs/deepstream-app/config_tracker_NvDCF_perf.yml ! queue ! nvinfer gpu-id=1 batch-size=16 unique-id=2 \ config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehiclemake.txt ! queue ! nvinfer gpu-id=1 batch-size=16 unique-id=3 \ config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehicletypes.txt ! queue ! fpsdisplaysink video-sink=fakesink sync=0 -e -v

Multiple Streams + Multi-dGPU Setup#

Running “Multi-instance(4) decode + Streammux + PGIE” on single dGPU and “ tracker + SGIE - multiple models” on separate dGPU

gst-launch-1.0 nvstreammux name=m batch-size=4 gpu-id=0 width=1920 height=1080 nvbuf-memory-type=2 ! queue ! \

nvinfer gpu-id=0 batch-size=4 config-file-path=samples/configs/deepstream-app/config_infer_primary.txt ! queue ! \

nvdsxfer gpu-id=1 p2p_gpu_id=0 ! queue ! nvtracker gpu-id=1 enable-batch-process=1 \

ll-lib-file=lib/libnvds_nvmultiobjecttracker.so ll-config-file=samples/configs/deepstream-app/config_tracker_NvDCF_perf.yml ! queue ! \

nvinfer gpu-id=1 batch-size=16 unique-id=2 config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehiclemake.txt ! queue ! \

nvinfer gpu-id=1 batch-size=16 unique-id=3 config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehicletypes.txt ! queue ! \

fpsdisplaysink video-sink=fakesink sync=0 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_0 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_1 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_2 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_3 -e -v

Running “Multi-instance(4) decode + StreamMux” on single-dGPU and “PGIE + Tracker + SGIE(Multiple)” on separate dGPU

gst-launch-1.0 nvstreammux name=m batch-size=4 gpu-id=0 width=1920 height=1080 nvbuf-memory-type=2 ! queue ! \

nvdsxfer gpu-id=1 p2p_gpu_id=0 ! queue ! nvinfer gpu-id=1 batch-size=4 config-file-path=samples/configs/deepstream-app/config_infer_primary.txt ! queue ! \

nvtracker gpu-id=1 enable-batch-process=1 ll-lib-file=lib/libnvds_nvmultiobjecttracker.so \

ll-config-file=samples/configs/deepstream-app/config_tracker_NvDCF_perf.yml ! queue ! nvinfer gpu-id=1 batch-size=16 unique-id=2 \

config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehiclemake.txt ! queue ! nvinfer gpu-id=1 batch-size=16 unique-id=3 \

config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehicletypes.txt ! queue ! fpsdisplaysink video-sink=fakesink sync=0 \

multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_0 \

multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_1 \

multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_2 \

multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_3 -e -v

Running “Multi-instance(8) decode” on multi-dGPU and “StreamMux + PGIE + Tracker + SGIE(Multiple)” on any one of the dGPU

gst-launch-1.0 nvstreammux name=m batch-size=8 gpu-id=0 width=1920 height=1080 nvbuf-memory-type=2 ! queue ! nvinfer gpu-id=0 batch-size=8 \

config-file-path=samples/configs/deepstream-app/config_infer_primary.txt ! queue ! nvtracker gpu-id=0 enable-batch-process=1 \

ll-lib-file=lib/libnvds_nvmultiobjecttracker.so ll-config-file=samples/configs/deepstream-app/config_tracker_NvDCF_perf.yml ! queue ! \

nvinfer gpu-id=0 batch-size=16 unique-id=2 config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehiclemake.txt ! queue ! \

nvinfer gpu-id=0 batch-size=16 unique-id=3 config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehicletypes.txt ! queue ! \

fpsdisplaysink video-sink=fakesink sync=0 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_0 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_1 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_2 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_3 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=1 cudadec-memtype=0 ! queue ! nvdsxfer gpu-id=0 p2p_gpu_id=1 ! queue ! m.sink_4 multifilesrc \

location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! nvv4l2decoder gpu-id=1 cudadec-memtype=0 ! queue ! \

nvdsxfer gpu-id=0 p2p_gpu_id=1 ! queue ! m.sink_5 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=1 cudadec-memtype=0 ! queue ! nvdsxfer gpu-id=0 p2p_gpu_id=1 ! queue ! m.sink_6 multifilesrc \

location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! nvv4l2decoder gpu-id=1 cudadec-memtype=0 ! queue ! \

nvdsxfer gpu-id=0 p2p_gpu_id=1 ! queue ! m.sink_7 -e -v

Running “Multi-instance(8) decode” on multi-dGPU, “StreamMux + PGIE” and “Tracker + SGIE(Multiple)” on separate dGPU

gst-launch-1.0 nvstreammux name=m batch-size=8 gpu-id=0 width=1920 height=1080 nvbuf-memory-type=2 ! queue ! nvinfer gpu-id=0 batch-size=8 \

config-file-path=samples/configs/deepstream-app/config_infer_primary.txt ! queue ! nvdsxfer gpu-id=1 p2p_gpu_id=0 ! queue ! nvtracker gpu-id=1 \

enable-batch-process=1 ll-lib-file=lib/libnvds_nvmultiobjecttracker.so ll-config-file=samples/configs/deepstream-app/config_tracker_NvDCF_perf.yml ! \

queue ! nvinfer gpu-id=1 batch-size=16 unique-id=2 config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehiclemake.txt ! \

queue ! nvinfer gpu-id=1 batch-size=16 unique-id=3 config-file-path=samples/configs/deepstream-app/config_infer_secondary_vehicletypes.txt ! \

queue ! fpsdisplaysink video-sink=fakesink sync=0 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! \

queue ! nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_0 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! \

queue ! nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_1 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! \

queue ! nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_2 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! \

queue ! nvv4l2decoder gpu-id=0 cudadec-memtype=0 ! queue ! m.sink_3 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! \

queue ! nvv4l2decoder gpu-id=1 cudadec-memtype=0 ! queue ! nvdsxfer gpu-id=0 p2p_gpu_id=1 ! queue ! m.sink_4 multifilesrc \

location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! nvv4l2decoder gpu-id=1 cudadec-memtype=0 ! queue ! \

nvdsxfer gpu-id=0 p2p_gpu_id=1 ! queue ! m.sink_5 multifilesrc location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! \

nvv4l2decoder gpu-id=1 cudadec-memtype=0 ! queue ! nvdsxfer gpu-id=0 p2p_gpu_id=1 ! queue ! m.sink_6 multifilesrc \

location=samples/streams/sample_1080p.h265 loop=true ! h265parse ! queue ! nvv4l2decoder gpu-id=1 cudadec-memtype=0 ! queue ! \

nvdsxfer gpu-id=0 p2p_gpu_id=1 ! queue ! m.sink_7 -e -v